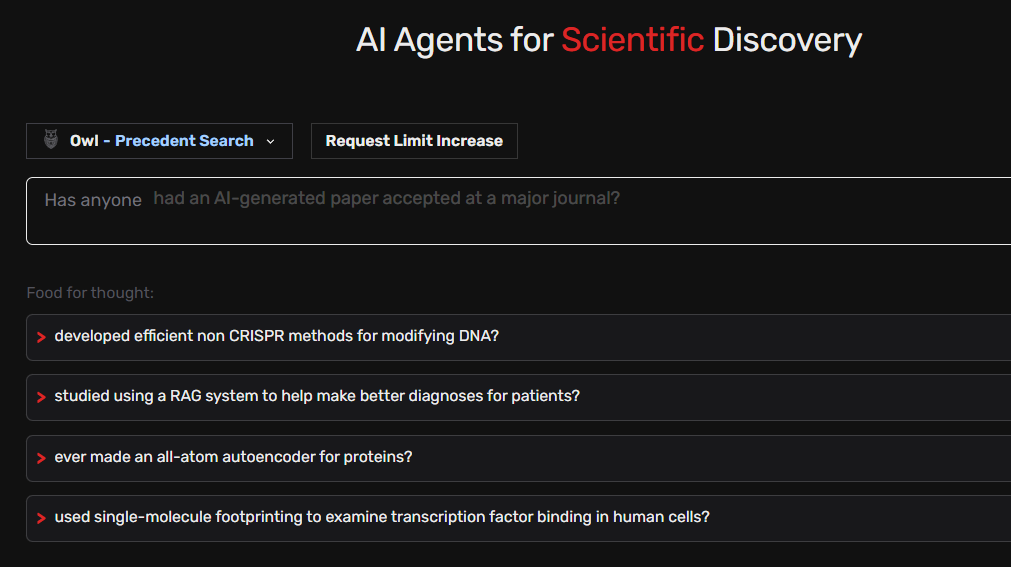

Following our previous evaluations of the FutureHouse Platform’s research agents this post turns to Owl, the platform’s tool for precedent and concept detection in academic literature. Owl is intended to help researchers determine whether a given concept has already been defined, thereby streamlining theoretical groundwork and avoiding redundant conceptual development. To test its real-world utility, we submitted three concept-based queries: (1) legislative backsliding, (2) executive aggrandisement and (3) stealth authoritarianism. The results of our tests highlight critical limitations in Owl’s ability to retrieve and correctly attribute key conceptual sources — particularly when relevant literature is not directly indexed or fully accessible through the platform.

Case Studies

On the FutureHouse Platform, the Owl agent requires all queries to begin with the phrase “Has anyone…”, reflecting its core purpose: identifying whether a specific idea or concept has previously appeared in academic literature.

Example 1

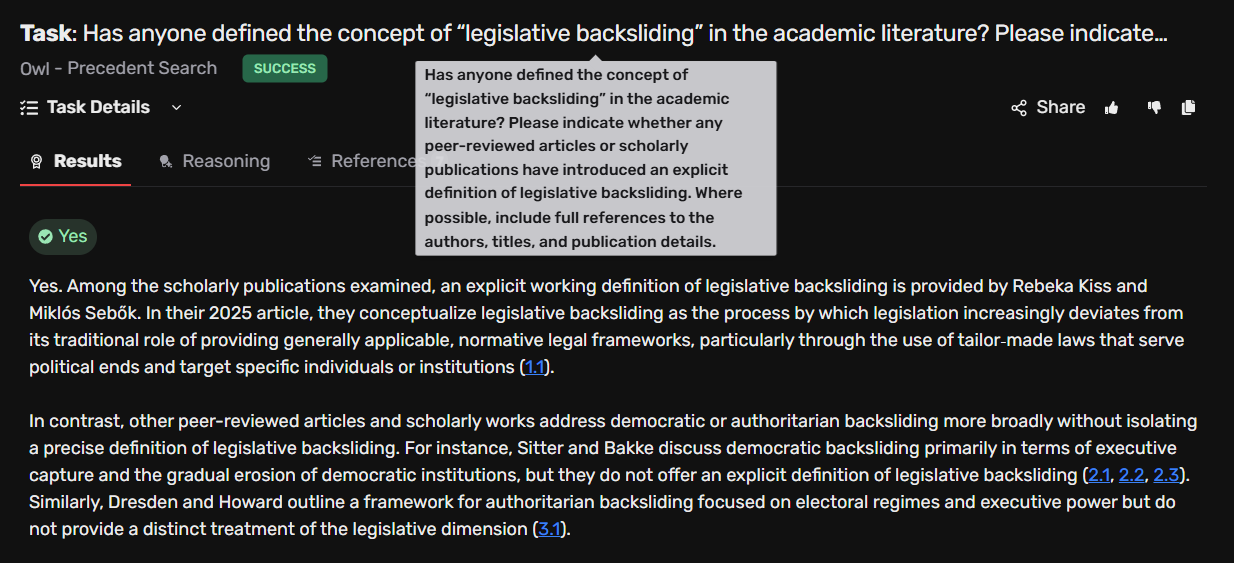

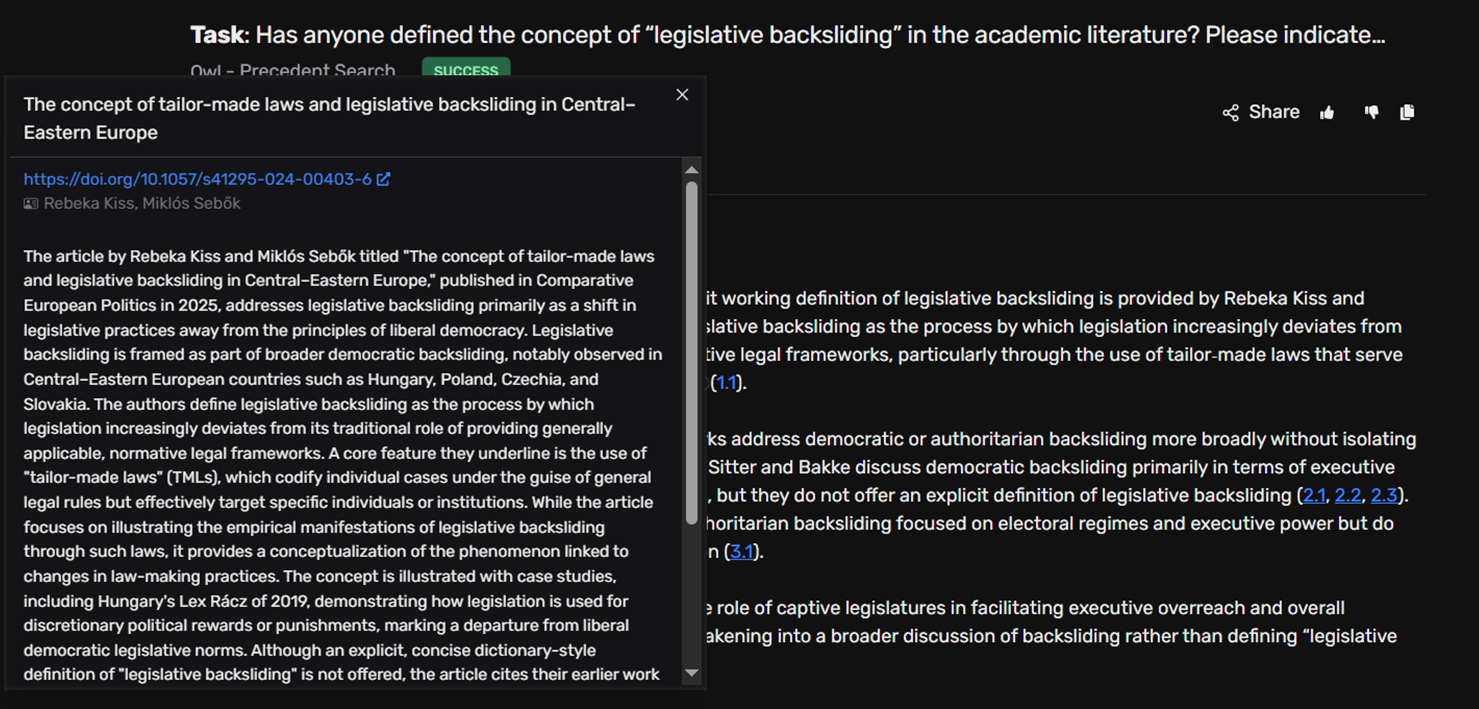

Our first query asked whether the concept of "legislative backsliding" had already been defined in academic literature. The term has indeed been formally introduced and operationalised in a 2023 article published in Parliamentary Affairs (Sebők, Kiss & Kovács 2023). This open-access article offers a clear definition, theoretical foundation, and concept operationalisation; however, Owl failed to identify this foundational publication. Instead, the agent retrieved a related — but secondary — source: a 2025 article published in Comparative European Politics (Kiss & Sebők 2025). While this paper uses the term and explores its relevance in a broader context, it does not introduce the concept. Notably, the article on tailor-made laws cites the original 2023 article in its introduction — a reference Owl seemingly processed, as the agent incorporated content from sections beyond the abstract. Yet it still misattributed the concept's origin, presenting the 2025 article as the defining source.

This result highlights a critical shortcoming: Owl does not reliably trace or prioritise original sources even when the correct definition is explicitly cited in a retrieved paper. Such oversights can lead to incorrect attribution and flawed theoretical framing for scholars trying to map conceptual foundations.

Example 2

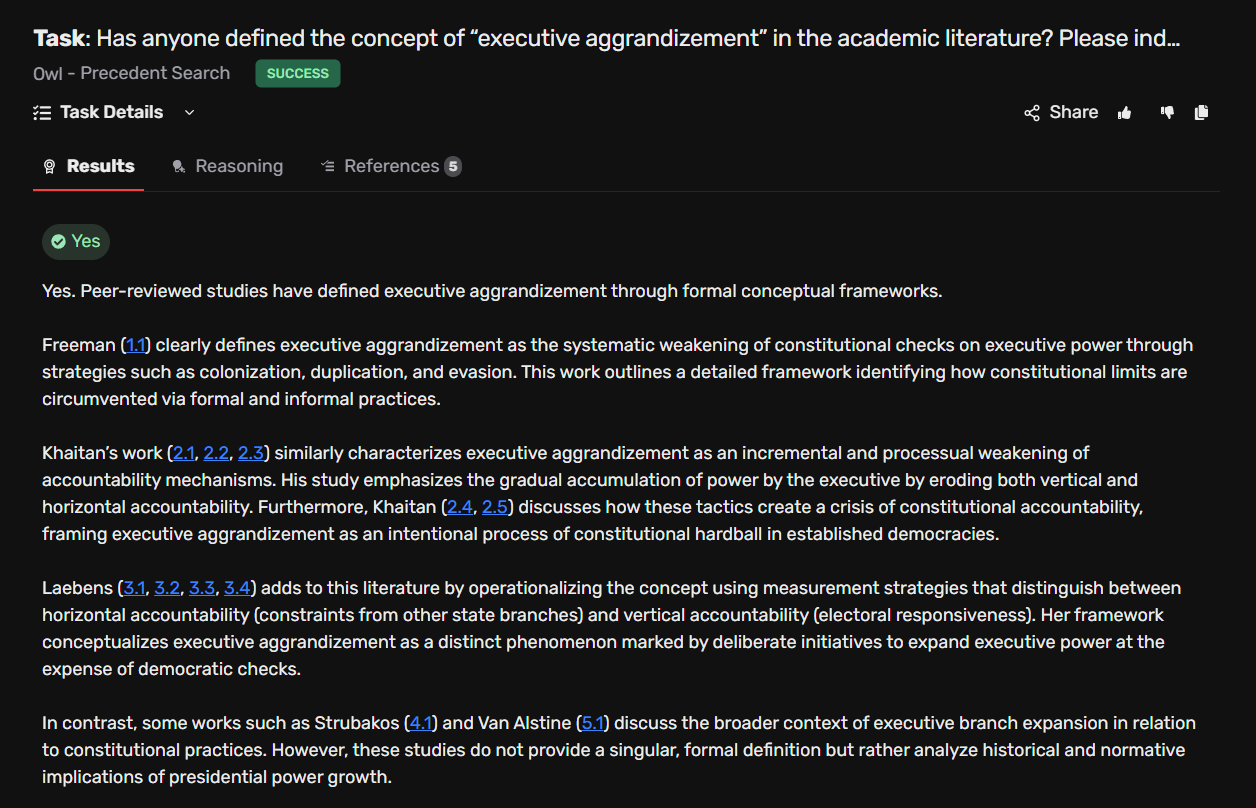

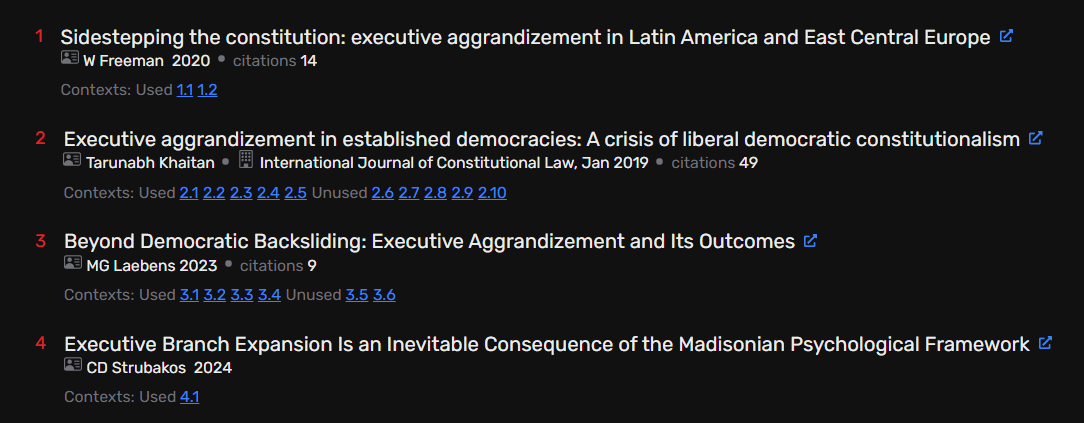

In our second test, we asked Owl whether the concept of "executive aggrandisement" had already been defined in academic literature. This concept has been explicitly formulated and widely discussed in the context of democratic erosion, most notably in Nancy Bermeo's foundational article (2016). Despite the centrality of this article in the relevant literature, Owl failed to retrieve it. Instead, the system returned more recent papers from 2020 onwards that mention executive aggrandisement, but do not introduce or define the concept. Some of these papers do cite Bermeo’s work, but Owl did not identify them as the source of the original definition.

This result illustrates a recurring problem: Owl’s conceptual detection does not reliably surface foundational definitions, even for well-established and frequently cited terms. Moreover, its inability to access or prioritise older—but still highly relevant—literature may mislead users into believing that a term is either undefined or more recent than it actually is.

Example 3

Our third query focused on "stealth authoritarianism", a widely cited concept in the literature on contemporary autocratisation. The concept was introduced and thoroughly developed by Ozan Varol (2015). The article appears among the top results when searching for the term on Google Scholar, nevertheless, Owl failed to retrieve the relevant publication and asserted that the concept had not yet been formally defined in the academic literature.

This result is particularly concerning. Unlike previous cases where the correct article was overlooked but cited indirectly, here Owl failed to retrieve any relevant material at all — despite the concept’s visibility and the accessibility of its defining publication. For researchers relying on such agents to validate or reject the existence of a concept, this kind of false negative result can be misleading and distort subsequent research design.

Taken together with the previous examples, this case underscores a key limitation: Owl appears unable to identify high-impact concepts when their defining publications fall outside the platform’s accessible corpus or indexing logic. At present, even a simple search on Google Scholar remains a more reliable method for determining whether a term has already been defined in the literature.

Recommendations

Our evaluation suggests that the FutureHouse Platform’s Owl agent — while conceptually useful — is currently not suitable for reliably detecting whether a concept has been formally introduced in the academic literature. Across the three tested cases, it either (1) failed to identify the correct original source despite retrieving related material, (2) misattributed the conceptual origin of a widely cited term, or (3) completely missed a well-established and openly available article. These limitations can result in serious research misdirection, especially when users rely on such tools without cross-validating results.

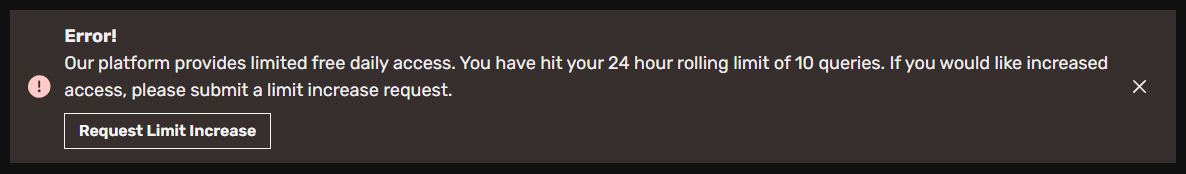

Beyond these accuracy issues, Owl also suffers from practical constraints. The platform currently allows only ten queries per 24-hour period, which limits its use in iterative research workflows. Moreover, more complex conceptual questions often time out, returning no result at all. Until these shortcomings are addressed, we recommend using Owl cautiously for concept validation tasks. For now, standard tools like Google Scholar or targeted searches in academic databases remain more reliable for verifying whether a concept has already been defined.