Image-based classification is increasingly used across biology, ecology, and agriculture—from identifying animal species to detecting plant diseases. One common use case is the analysis of leaf images to distinguish between healthy and diseased plants. In this post, we compare two approaches to classifying strawberry leaves as either fresh (healthy) or scorch (diseased): a prompt-based method using GPT-4o’s vision capabilities, and a scalable API-powered workflow. While the prompt-based solution works well for up to 10 images, it is limited by interface constraints. The API approach, on the other hand, delivered 100% accuracy on a 100-image test set and can be easily extended to much larger datasets.

Prompt-Based Classification: Simple, but Limited Approach

To explore how well a prompt-based approach could handle plant disease classification, we used GPT-4o’s multimodal capabilities directly via the ChatGPT interface. The goal was to determine whether the model could reliably distinguish between fresh (healthy) and scorch (diseased) strawberry leaves based on image input and minimal prompting.

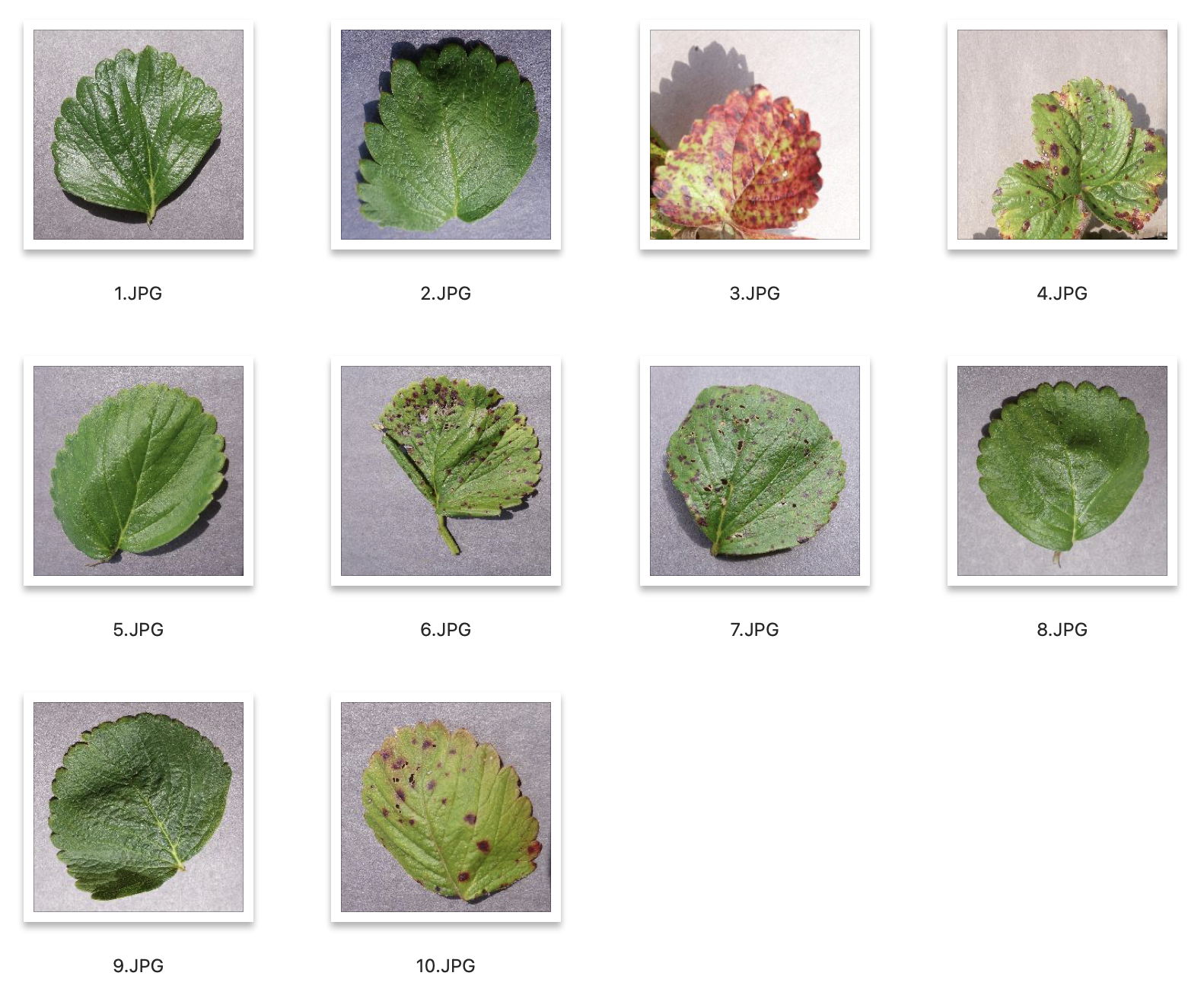

Input file

The input consisted of ten high-resolution images of strawberry leaves, each saved under a simple numeric filename (e.g. 1.jpg, 2.jpg, up to 10.jpg). These included a balanced mix of healthy and visibly scorched leaves to reflect real-world variation. Importantly, the model was not shown any labelled examples or reference classifications beforehand. Instead, it received only the classification prompt along with the ten unlabelled images to assess. All images were uploaded in a single batch alongside the prompt, which clearly instructed the model to return a concise two-column table containing the filename and the corresponding label for each image.

Prompt

You are a plant pathology expert. I have uploaded 10 images of strawberry leaves.

For each image, classify the leaf as either fresh (healthy) or scorch (damaged by disease).

Your response must be a two-column table:

Column A: Filename

Column B: Classification (fresh or scorch)

Please respond with only the table, and no additional explanation.

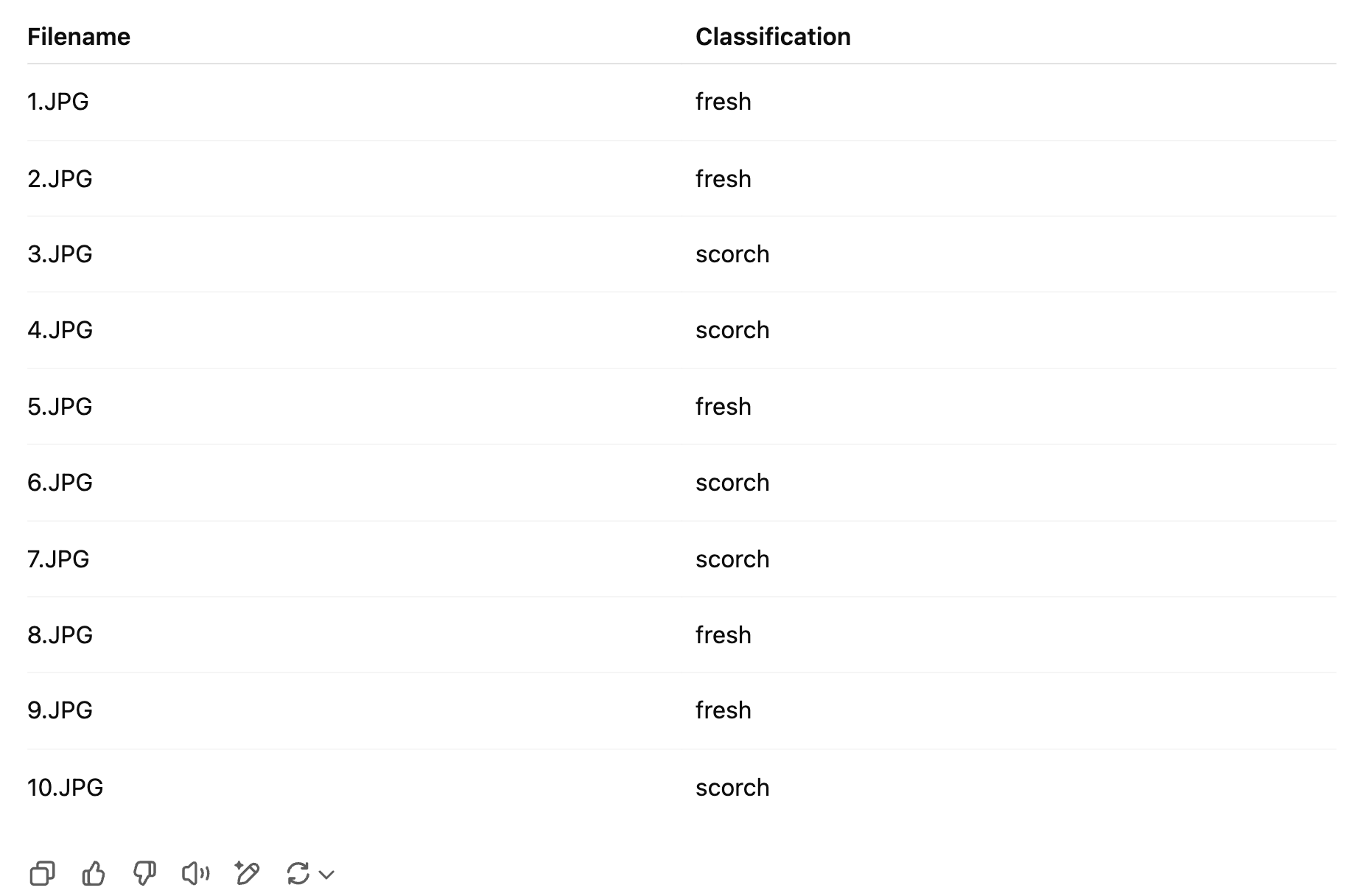

Output

Despite the simplicity of the setup, the prompt-based approach performed remarkably well. The model correctly classified all ten images, even in cases where the distinction between fresh and scorch was not immediately obvious. For instance, image 7.jpg showed subtle signs of leaf damage that could easily be overlooked by a non-expert, yet the model correctly identified it as scorch. Similarly, images like 2.jpg, and 8.jpg contained healthy leaves photographed under uneven lighting, with darker areas that might have been mistaken for damage. Impressively, the model did not misclassify these and correctly labelled them as fresh.

Limitations

This outcome highlights the robustness of GPT-4o’s visual reasoning capabilities, even in the absence of example-based guidance. However, the main drawback remains the interface itself: a maximum of ten images can be uploaded per session, and the process cannot be automated or repeated at scale without manual intervention. For larger datasets or production workflows, an API-based solution is essential.

Scalable Classification with the OpenAI API

To overcome the limitations of prompt-based image classification, we developed an automated pipeline using the OpenAI API and the gpt-4o model. This approach allowed us to scale the task well beyond the 10-image constraint of the web interface. By writing a short Python script in Google Colab, we were able to read images from a ZIP archive, send them to the model one by one, and record the results in an Excel file—streamlining the entire classification process.

Input file

The ZIP archive contained 100 images of strawberry leaves, with a mixed distribution of fresh (healthy) and scorch (diseased) examples. We kept the filename format consistent (1.jpg, 2.jpg, ..., 100.jpg) to simplify processing and comparison with earlier results. Each image was passed to the gpt-4o model using the same instruction as in the prompt-based experiment: classify the leaf as either fresh or scorch, and return only the label.

The ZIP archive contained 100 images of strawberry leaves, with a mixed distribution of fresh and scorch examples (sourced from a Kaggle dataset, which we curated into a balanced sample). We kept the filename format consistent (1.jpg, 2.jpg, ..., 100.jpg) to simplify processing and comparison with earlier results. Each image was passed to the gpt-4o model using the same instruction as in the prompt-based experiment: classify the leaf as either fresh or scorch, and return only the label.

Script

The API-based solution automates the entire classification process. After extracting the ZIP archive, the script loops through each file, encodes the image in base64, and sends it to the gpt-4o model via the OpenAI API. Each request includes a fixed prompt asking the model to return only one word: fresh or scorch. The model’s response is parsed to extract the label, which is stored alongside the filename. Once all images are processed, the results are saved in a two-column Excel file.

Output

The results were striking: the model achieved 100% classification accuracy, correctly identifying even subtle cases of leaf damage while avoiding false positives on healthy leaves affected by shadow or uneven lighting.

Beyond accuracy, the API solution brings a major advantage: scalability. The script can easily process hundreds or thousands of images in one run, and could be integrated into larger diagnostic systems or digital farming tools. It also enables full reproducibility, since the input, model, and output are all controlled programmatically—ideal for research, monitoring, or high-volume labelling tasks.

Recommendations

For small-scale tasks or quick experimentation, the prompt-based approach offers a fast and user-friendly way to classify plant images—no coding required, and surprisingly accurate within the 10-image limit. However, for larger datasets or production use, we strongly recommend switching to the API-based workflow. It not only removes interface constraints but also enables full automation, reproducibility, and scalability. When integrated into a simple script, GPT-4o delivers reliable, high-quality classifications that can support advanced plant monitoring systems or accelerate dataset annotation workflows.

The authors used GPT-4o [OpenAI (2025), GPT-4o (accessed on 28 May 2025), Large language model (LLM), available at: https://openai.com] to generate the output.