As scientific research became increasingly data-intensive and fragmented across disciplines, the limitations of traditional research workflows became more apparent. In response to these structural challenges, FutureHouse — a nonprofit backed by Eric Schmidt — launched a platform in May 2025 featuring four specialised AI agents. Designed to support literature analysis, hypothesis development, experimental design, and the identification of prior work, the platform aimed to augment core elements of the scientific process. This blog post focuses on one of the agents available on the Platform: Crow, a general-purpose agent designed to retrieve and synthesise information from scientific literature.

The FutureHouse Platform: Domain-Specific Agents for Scientific Research

The FutureHouse Platform was launched with the explicit goal of supporting researchers through a suite of AI agents purpose-built for scientific applications. Unlike general-purpose language models, these agents were developed to operate over full-text scholarly papers, assess methodological quality, and interact with domain-specific databases. Each agent was designed to address a distinct task in the research pipeline:

- Crow serves as a general-purpose agent that answers scientific questions by retrieving and synthesising content from full-text literature. Optimised for integration via API, it prioritises high-quality sources and concise scholarly output.

- Falcon is designed for in-depth literature reviews. With access to specialised databases such as OpenTargets, Falcon can retrieve and synthesise scientific information at scale, offering structured insight into large, complex research areas.

- Owl (formerly known as HasAnyone) detects whether a specific experiment or study has previously been conducted. This helps researchers avoid duplication and locate precedents, even when the terminology used in prior work differs.

- Phoenix supports experimental chemistry by suggesting synthesis pathways, evaluating compound properties, and interfacing with tools for planning laboratory workflows. While still considered experimental, Phoenix showcases the potential for AI in computational chemistry and drug discovery.

The platform is accessible via both web interface and API, enabling integration into automated research workflows, from literature monitoring to hypothesis screening and experiment planning.

Testing the Crow - Concise Search agent

Example 1

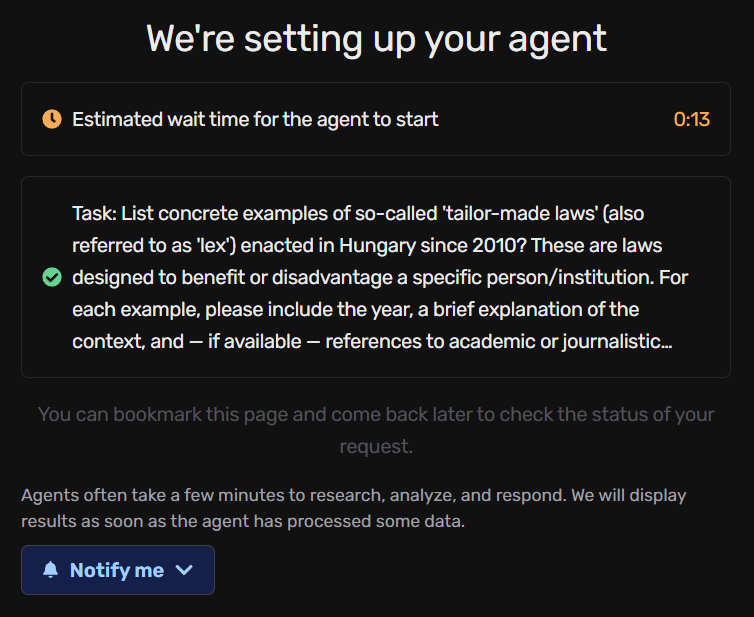

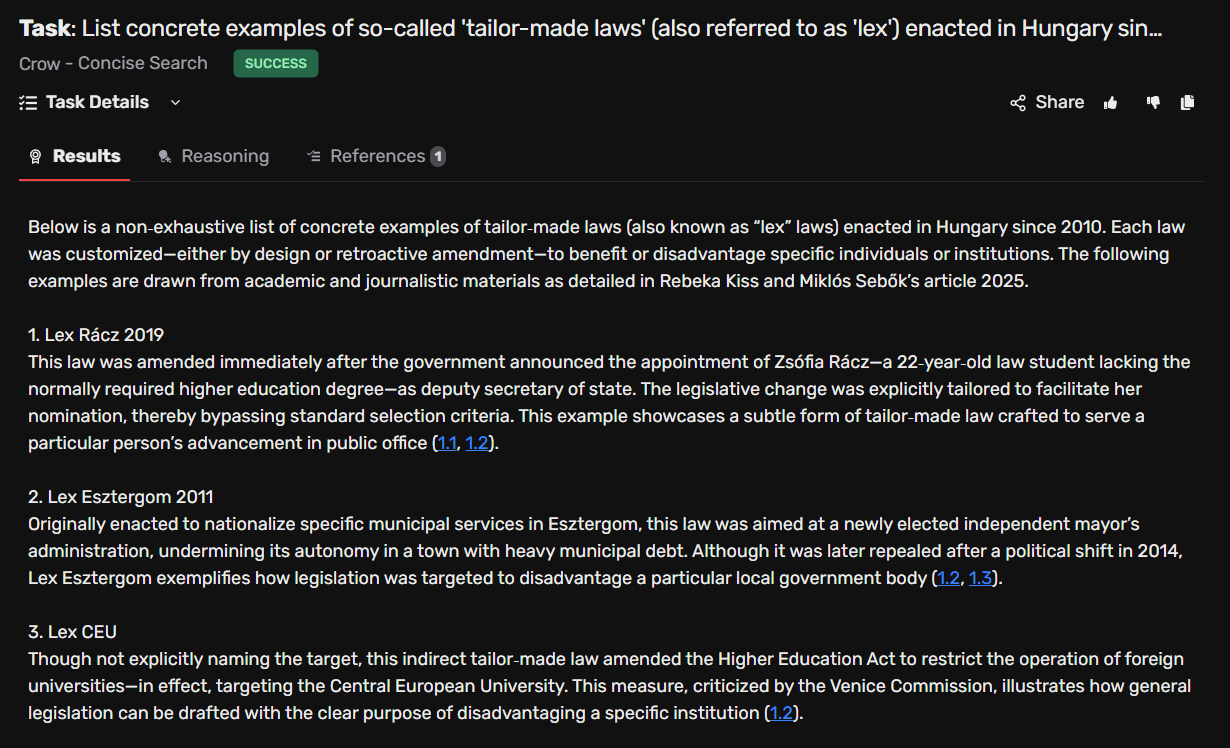

To test the practical utility of the Crow agent for empirical legal-political research, we submitted a focused question on a well-defined topic: "List concrete examples of so-called 'tailor-made laws' (also referred to as 'lex' laws) enacted in Hungary since 2010. These are laws designed to benefit or disadvantage a specific person, institution, company, or case. For each example, please include the year, a brief explanation of the context, and — if available — references to academic or journalistic sources." This task required the agent to identify legislative cases that fit a specific typology (tailor-made laws) in a particular jurisdiction (Hungary), over a delimited time period (2010–2023), and to ground its answer in verifiable sources.

After submitting our research question on tailor-made laws in Hungary, the FutureHouse platform began preparing the Crow agent for deployment. The interface informed us that the agent would need several minutes to research, analyse, and produce a response — a delay consistent with the platform’s promise of depth over speed.

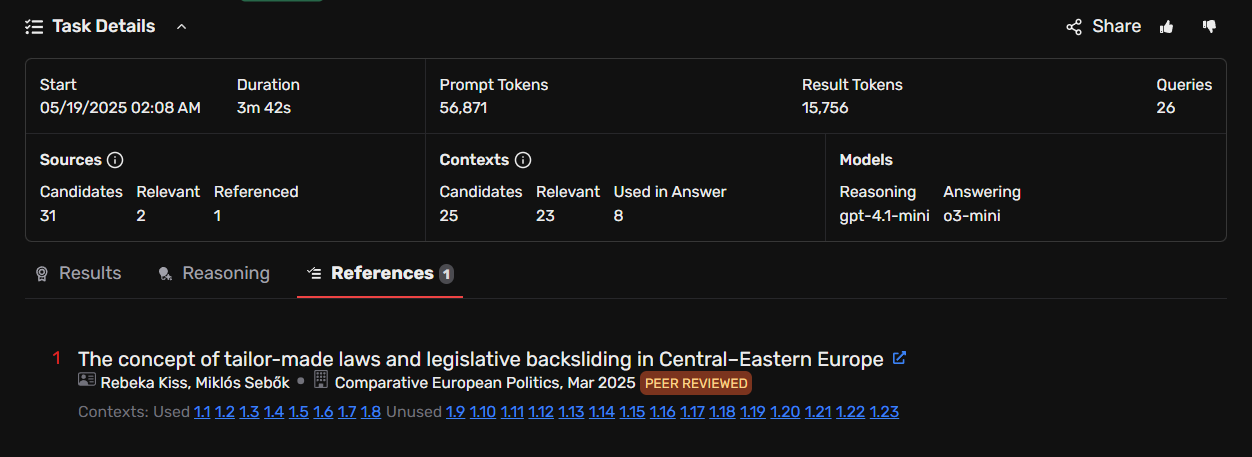

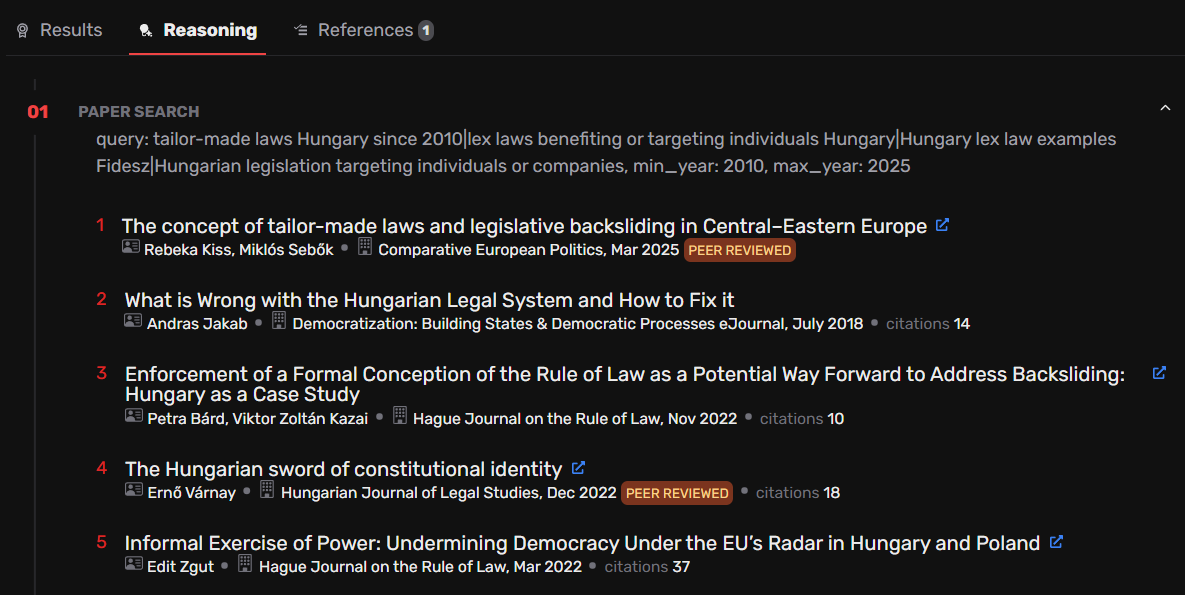

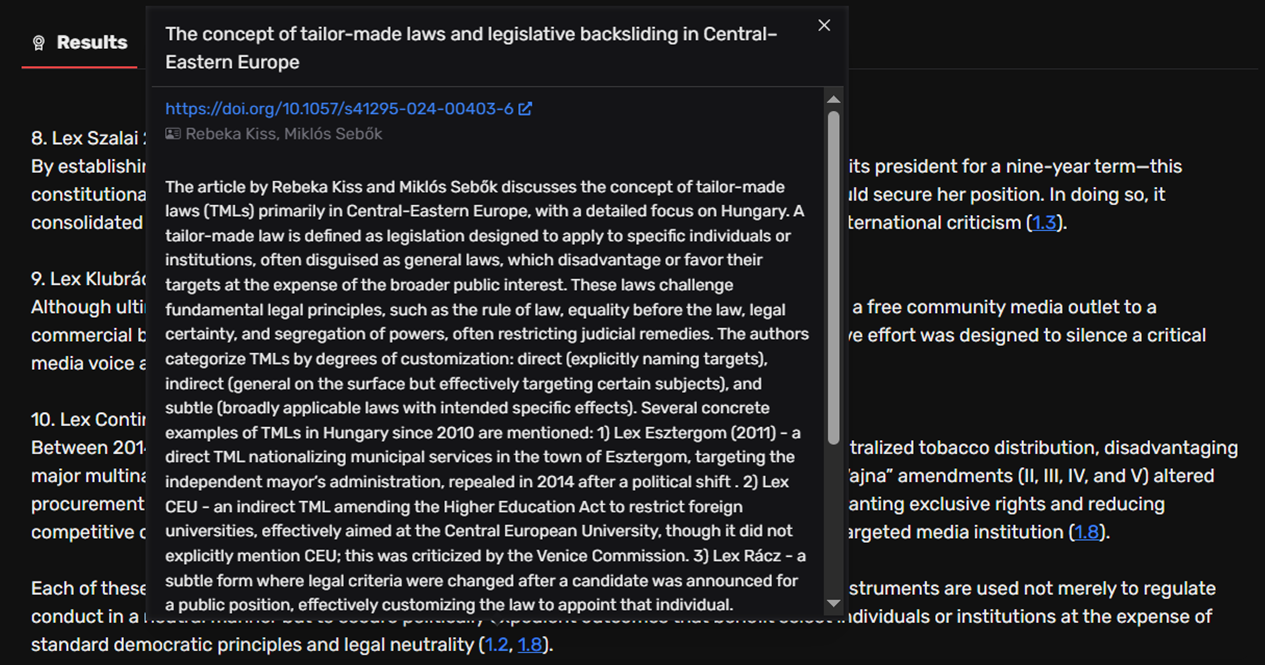

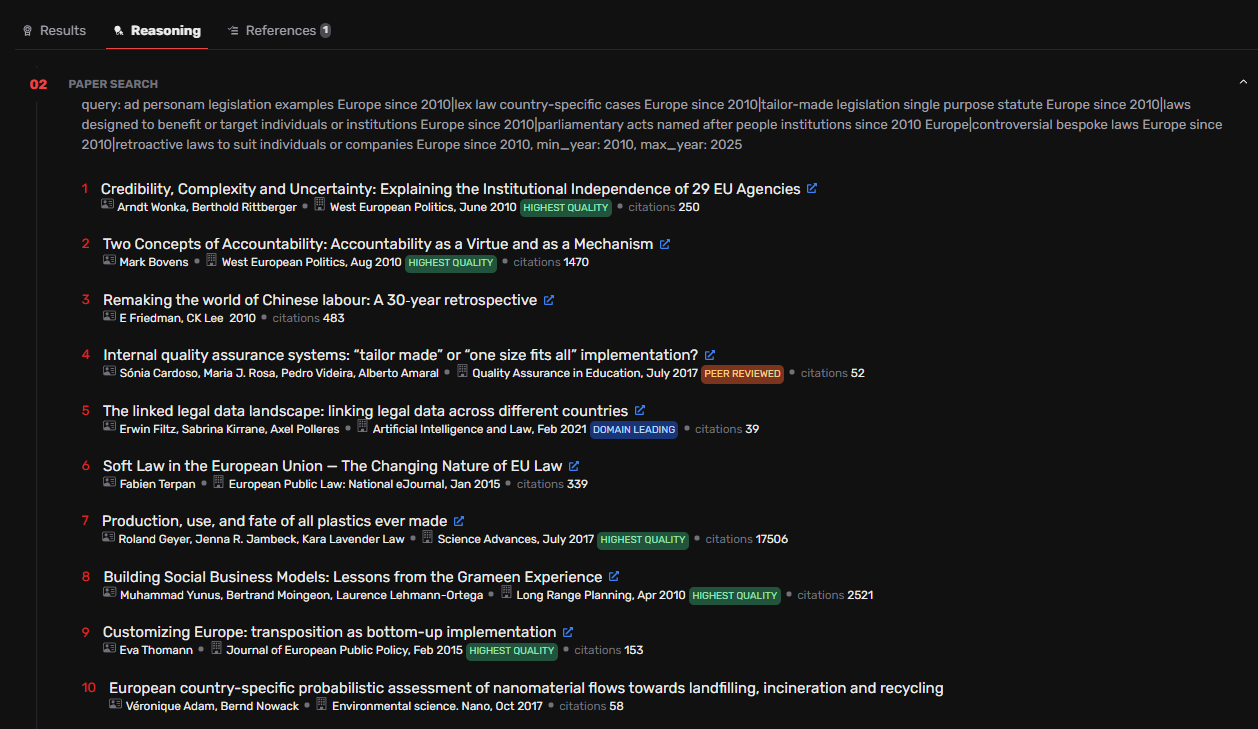

Within approximately four minutes, the agent returned a complete answer. Behind the scenes, the system had executed a detailed literature search, as visualised in the Reasoning tab. This included multiple peer-reviewed articles and working papers focused on Hungarian constitutional developments, the rule of law, and the phenomenon of democratic- and legislative backsliding. However, as shown in the References panel, Crow ultimately relied on a single source to build its final answer: our own recent peer-reviewed article published in Comparative European Politics.

The examples returned by Crow were not only clearly structured and relevant — they were also factually accurate. Each listed case (e.g. Lex Rácz, Lex Esztergom, Lex CEU) correctly matched the definition of a tailor-made law, as specified in the original query. The legislative intent and affected actor(s) were briefly outlined, and each entry reflected how legal changes were used to benefit or disadvantage a specific target.

Example 2

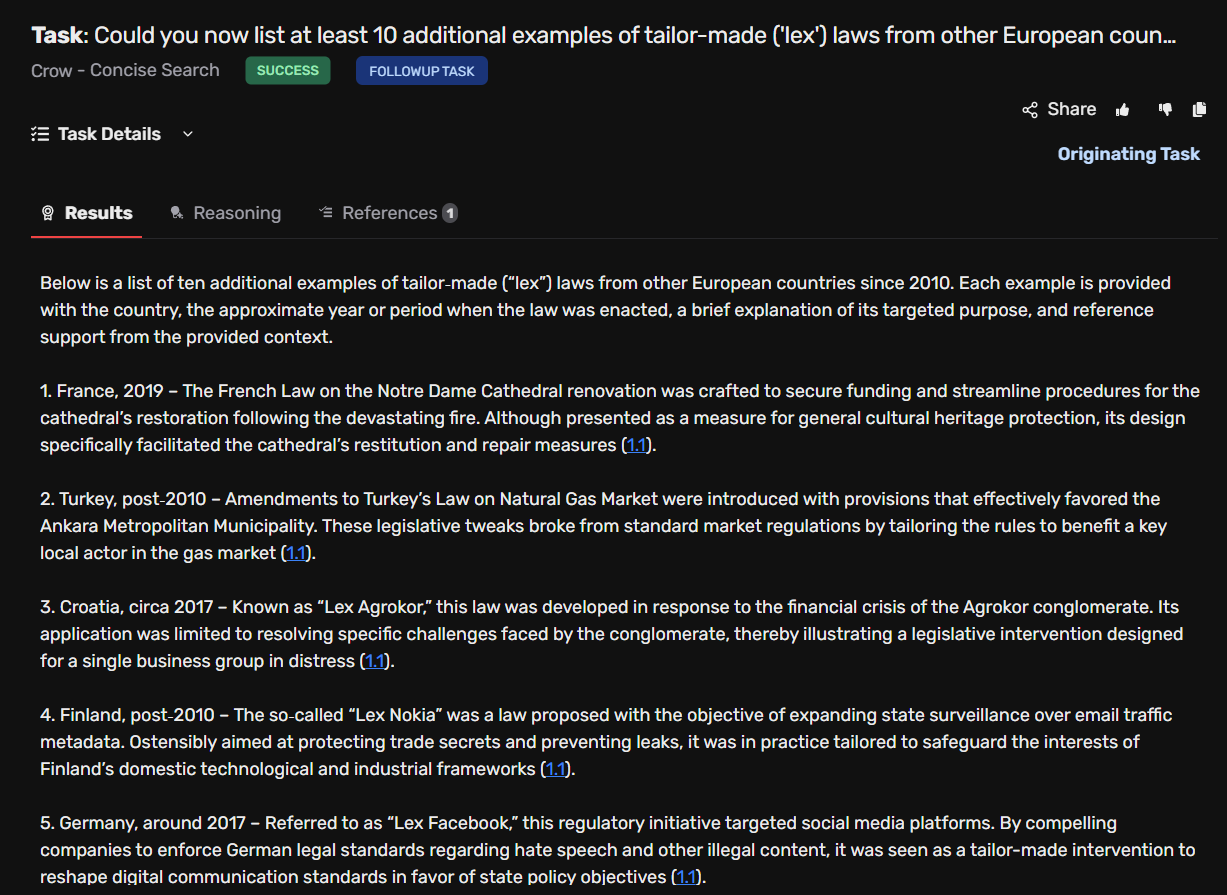

In a follow-up task — the only one allowed before the platform requires returning to the original task — we asked the agent to list at least 10 examples of tailor-made (‘lex’) laws from other European countries since 2010. (The platform does not support chained follow-ups beyond two steps, the search could not be extended further without restarting from the initial query.) As before, we requested basic contextualisation and source references. Although the agent returned a list of ten diverse examples (e.g. Lex Facebook, Lex Agrrokor, Lex Dragnea), the response revealed notable limitations.

Crow’s internal paper search surfaced a range of unrelated publications — including papers on plastic waste, nanomaterials, and education quality systems — while failing to retrieve our previously used article. This suggests an inconsistency in source prioritisation across sessions.

The examples listed were entirely based on our own article, even though the publication includes a literature review that cites multiple other studies touching on similar laws. Crow did not incorporate any of these cited works, nor did it identify new examples beyond the ones we explicitly listed in our paper.

Example 3

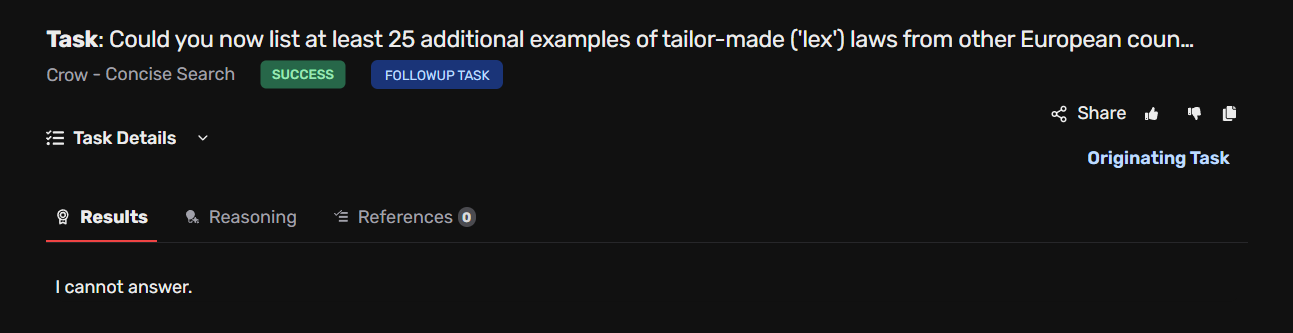

After reaching the limit of two chained steps in our previous session, we returned to the original task to initiate a new follow-up. This time, we expanded the request, asking Crow to list at least 25 additional examples of tailor-made (‘lex’) laws from other European countries since 2010.

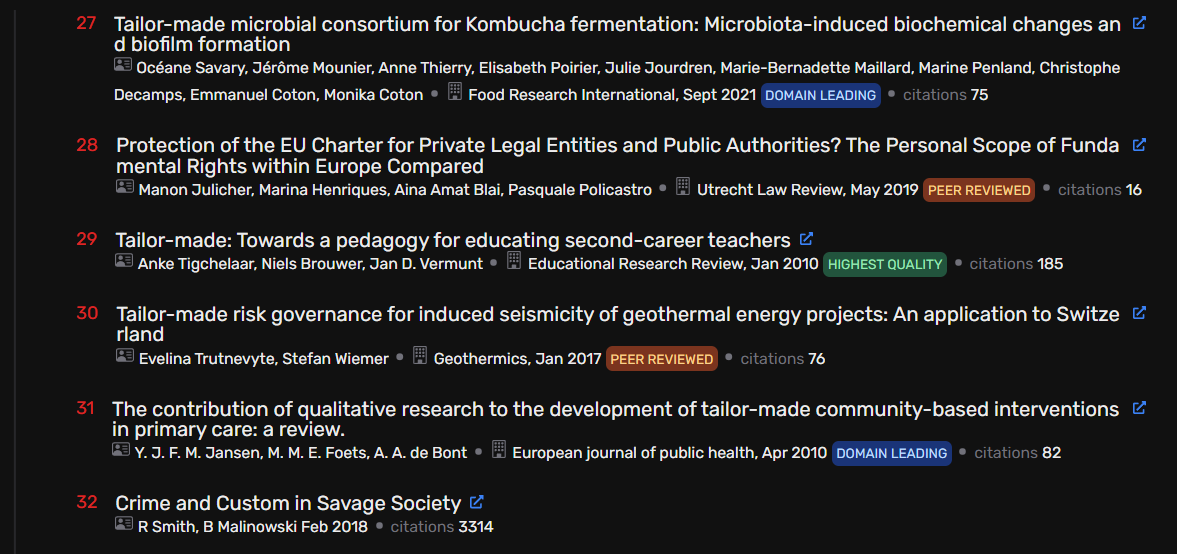

While the prompt was successfully accepted and recognised as a follow-up to the original Hungarian query, the agent failed to return any output, responding only with: “I cannot answer.” Even more telling is the list of references generated in this session. Instead of drawing from legal or political science literature, Crow returned an entirely irrelevant set of papers, seemingly selected only because they contained the phrase “tailor-made” — ranging from microbiome studies to geothermal risk governance.

Recommendations

Our initial tests show that Crow performs well in narrowly scoped, citation-based tasks, especially when suitable high-quality sources are available. In Example 1, the agent accurately identified Hungarian "tailor-made laws" and linked each to specific sections of a relevant peer-reviewed article. However, Crow struggled with broader or exploratory queries. In Example 2, it repeated examples from our own publication without incorporating related studies cited in that same article. In Example 3, it failed to return any output and listed irrelevant sources based only on keyword matches.

Overall, Crow may be useful for precise, source-grounded retrieval, but its value for discovery-driven or cross-source synthesis is currently limited. Future posts will explore the platform’s other agents — Falcon, Owl, and Phoenix — to assess their capabilities across different stages of the research pipeline.

The authors used the Crow agent via FutureHouse Platform [FutureHouse AI (2025), Crow (accessed on 17 May 2025), General-purpose agent, available at: https://www.futurehouse.org/] to generate the output.