We tested the new OpenAI Agent to assess its usefulness in academic research tasks, comparing it directly with Manus.ai and Perplexity’s research mode. Our aim was to evaluate how effectively each tool finds relevant scholarly and policy sources, navigates restricted websites (including captchas and Cloudflare protections), and allows researchers to intervene when access issues arise. While the OpenAI Agent offers a sleek, browser-based interface, it largely replicates the functionality of Manus.ai — without notable improvements. Crucially, it fails to alert users when content is blocked, often skipping entire sources without notice, whereas Manus.ai allows manual override. In our view, the OpenAI Agent is not worse, but also not better than existing alternatives, offering no clear advantage for research-driven use cases.

Prompt

We used the same structured prompt across all three platforms, designed to simulate a typical academic query: locating relevant studies and policy papers on a well-defined research topic. The prompt required the agents to identify scholarly sources, summarise their content, and provide links for verification. This allowed us to assess not only the quality and relevance of the results, but also how each tool handles source attribution, depth of understanding, and access to academic material.

Please provide a detailed summary of how the European Union’s Better Regulation framework addresses the role and necessity of explanatory memoranda in its official documents.

Your response should be based on both official EU sources (such as the Better Regulation Guidelines, Toolbox, and relevant communications from the European Commission) and academic literature, especially when discussing any limitations, criticisms, or conceptual debates about the function and quality of explanatory memoranda.

Structure your response as follows:

- General Principles – What overarching goals or principles (e.g. transparency, accountability, evidence-based policymaking) justify the need for explanatory memoranda according to the Better Regulation agenda?

- Formal Requirements – What specific procedural or legal requirements mandate the inclusion of explanatory memoranda in the legislative process? Please refer to relevant Better Regulation tools and interinstitutional agreements.

- Institutional Responsibility – Which institutions are responsible for ensuring the presence and quality of explanatory memoranda (e.g. European Commission, European Parliament, Council)?

- Quality and Clarity Standards – What guidance or criteria are provided regarding the clarity, content, and accessibility of explanatory memoranda?

- Critiques or Limitations – What recognised limitations, shortcomings, or academic criticisms exist regarding how explanatory memoranda are currently used or implemented under the Better Regulation framework?

OpenAI Agents' Output

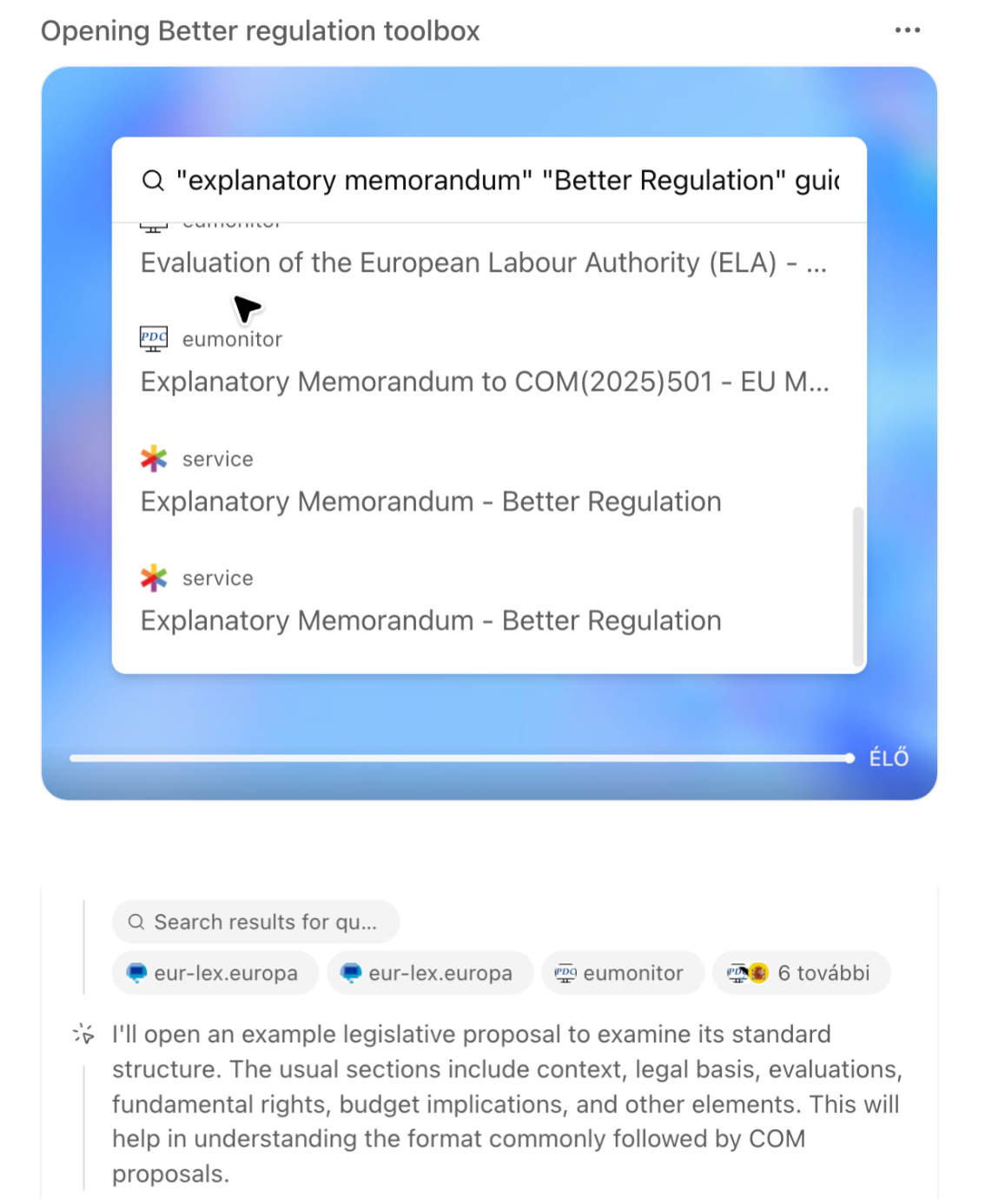

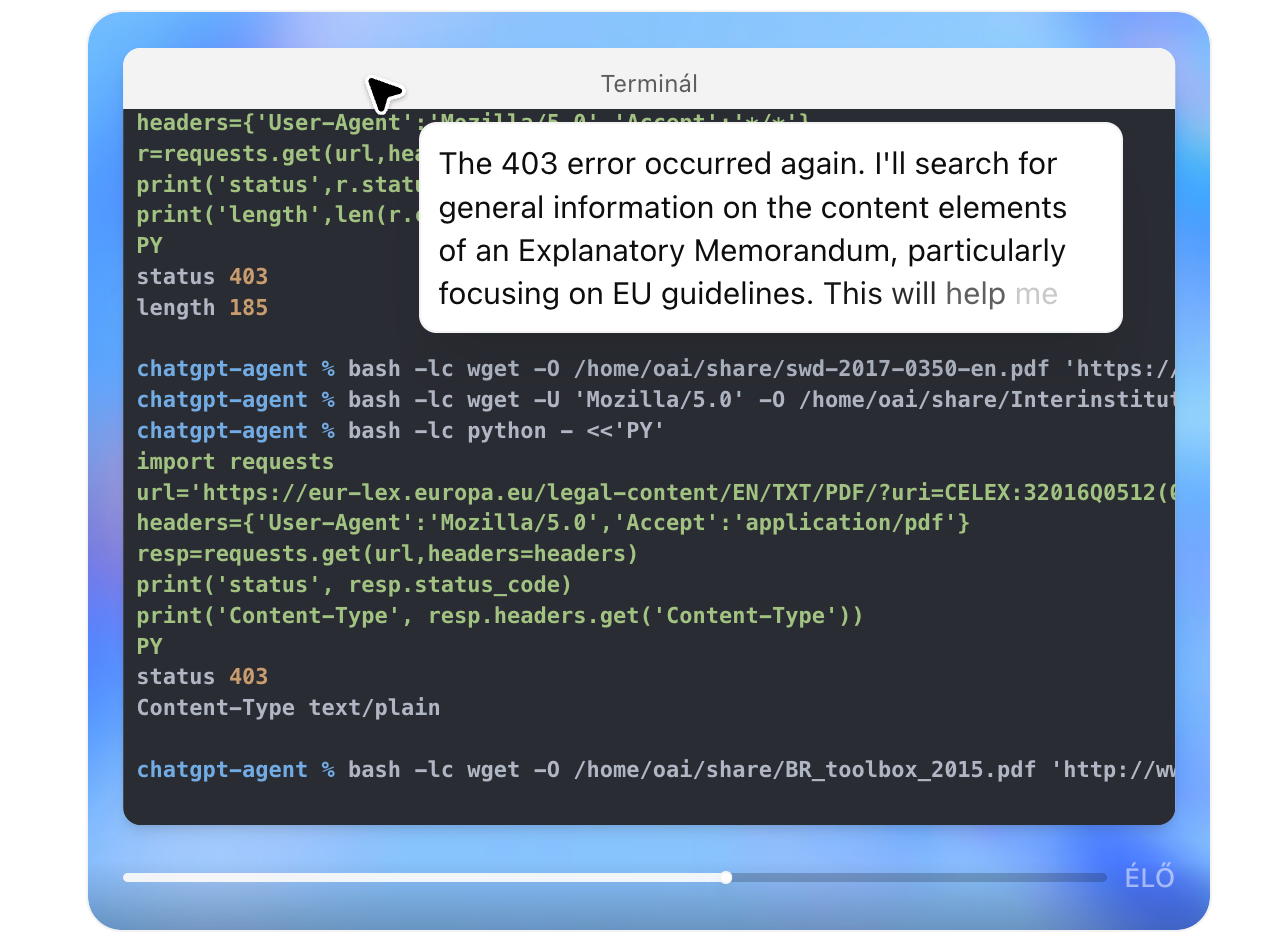

The OpenAI Agent interface mirrors the structure and logic of tools like Manus.ai: it opens a small embedded browser, performs web searches, navigates pages, downloads documents, and displays a real-time terminal log of its actions. From the user’s perspective, it creates the impression of watching an autonomous assistant work through the steps of a research query — just like Manus.ai. What sets it apart within the ChatGPT environment is the ability to switch between two distinct views: a browser-like visual interface, where users can follow the agent’s navigation and page rendering, and a background task view, which logs every command and HTTP request in terminal format. This functionality resembles the Deep Research mode in ChatGPT, offering greater transparency into what the agent is doing behind the scenes — including which links it tries to open, what type of content it retrieves, and how it structures its search strategy. For researchers, this dual-view system is particularly valuable when assessing the credibility, traceability, and scope of retrieved sources.

During testing, the OpenAI Agent frequently encountered 403 errors and captcha blocks, particularly on official EU websites. Unlike some other tools, it does not alert the user or allow manual intervention — it simply skips over blocked content without notice. While the terminal log reveals these failed attempts, the lack of fallback options limits its reliability for research tasks that require access to protected or dynamically loaded sources.

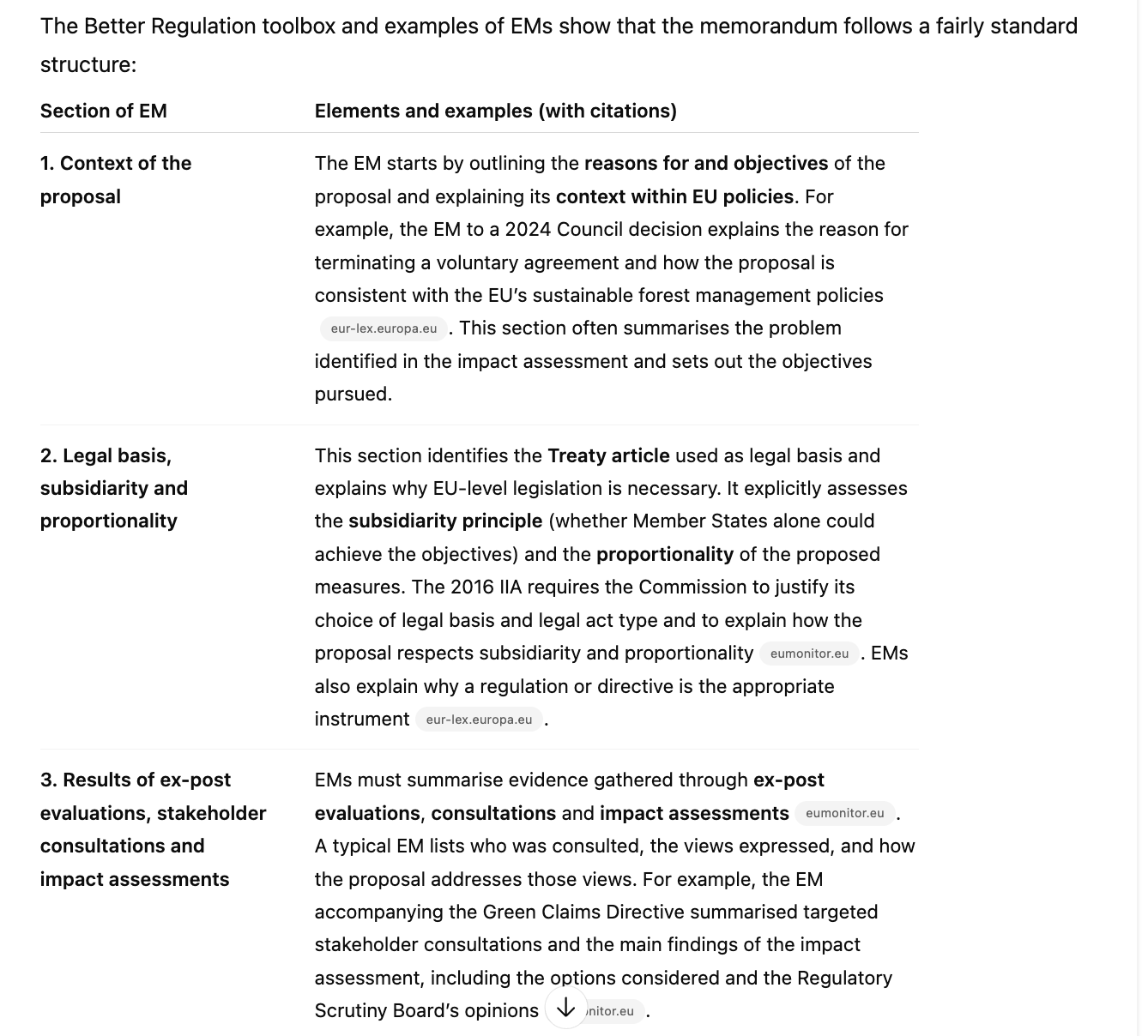

The final output generated by the OpenAI Agent was well-structured and aligned with the prompt, clearly outlining the key elements of explanatory memoranda within the EU’s Better Regulation framework. The Agent successfully identified and summarised relevant EU-level documents, but beyond those, it relied on only a single academic source, which was only marginally relevant to our specific focus. No additional scholarly literature was included, even though several suitable studies are available.

How Manus.ai Performed

Manus.ai uses a browser-based interface that closely resembles the one now adopted by the OpenAI Agent. However, it offers a more robust experience when dealing with restricted or protected content. When encountering captchas or access blocks, Manus.ai actively notifies the user and allows immediate manual intervention — for example, solving a captcha directly. While the OpenAI Agent interface technically allows users to take control in similar situations, it does not issue a notification or prompt for action, often skipping blocked content silently. This distinction proved crucial in our test, where Manus.ai successfully retrieved sources that were inaccessible to the OpenAI Agent. Moreover, it identified multiple relevant academic studies, including critical perspectives on the Better Regulation agenda — demonstrating higher relevance and coverage for our research task.

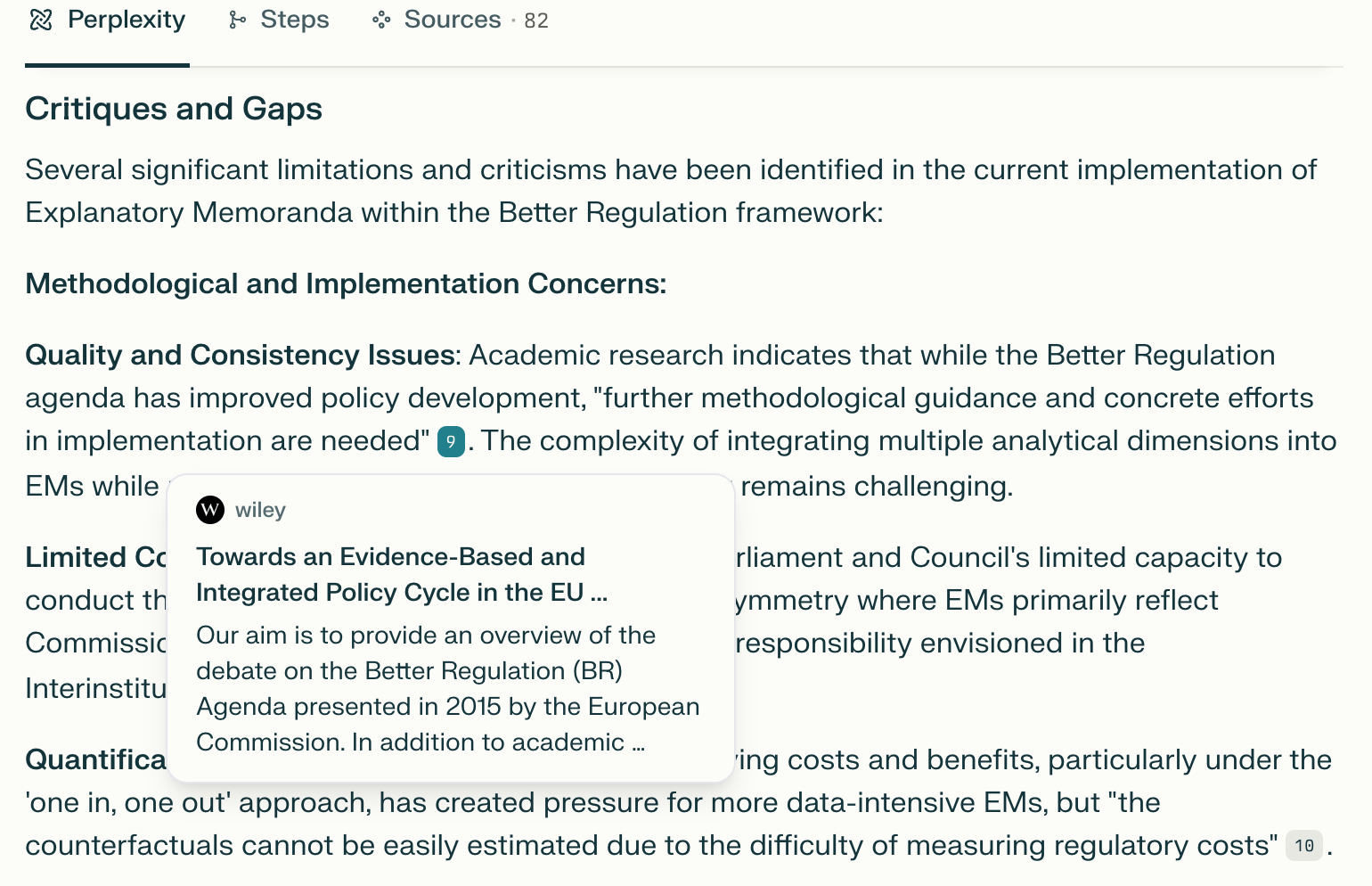

Perplexity’s Research Mode

Perplexity’s research mode delivered fast and highly relevant results. Unlike the OpenAI Agent, which relied on a single marginal source, and Manus.ai, which required user intervention, Perplexity quickly surfaced the same academic studies and policy sources we had previously identified manually through Google Search and Google Scholar. Its output included not only official EU documents but also peer-reviewed literature on methodological gaps and implementation critiques within the Better Regulation framework — exactly matching the research focus of our query. Most impressively, the entire search and summary process was completed in under three minutes, compared to the 10–12 minutes required by both the OpenAI Agent and Manus.ai for similar tasks.

Recommendations

For research tasks requiring quick access to relevant academic and policy sources, Perplexity currently offers the most efficient and accurate results, closely matching what human researchers retrieve manually. While Manus.ai provides greater control and transparency, especially when navigating restricted content, it is slower. The OpenAI Agent, though promising in design and autonomy, did not impress in this initial, rapid test, due to limited source coverage and silent handling of blocked content. That said, this was only a preliminary evaluation focused on a single type of academic query. We plan to test the OpenAI Agent further in other research scenarios, where its agentic capabilities and tool integration may prove more effective.

The authors used GPT-4o [OpenAI (2025), GPT-4o (accessed on 4 August 2025), Large language model (LLM), available at: https://openai.com] to generate the output.