As GenAI tools become increasingly embedded in academic workflows, researchers seek ways to tailor these systems to their specific needs. OpenAI’s ChatGPT interface offers a number of personalisation options and privacy-focused features that can significantly enhance research efficiency, consistency, and ethical compliance. This blog post introduces the key settings available to ChatGPT users—including custom instructions, memory, and model selection,—and discusses how these can be configured to support scientific work.

1. Custom Instructions: Tailoring Response Style, Tone, and Background Knowledge

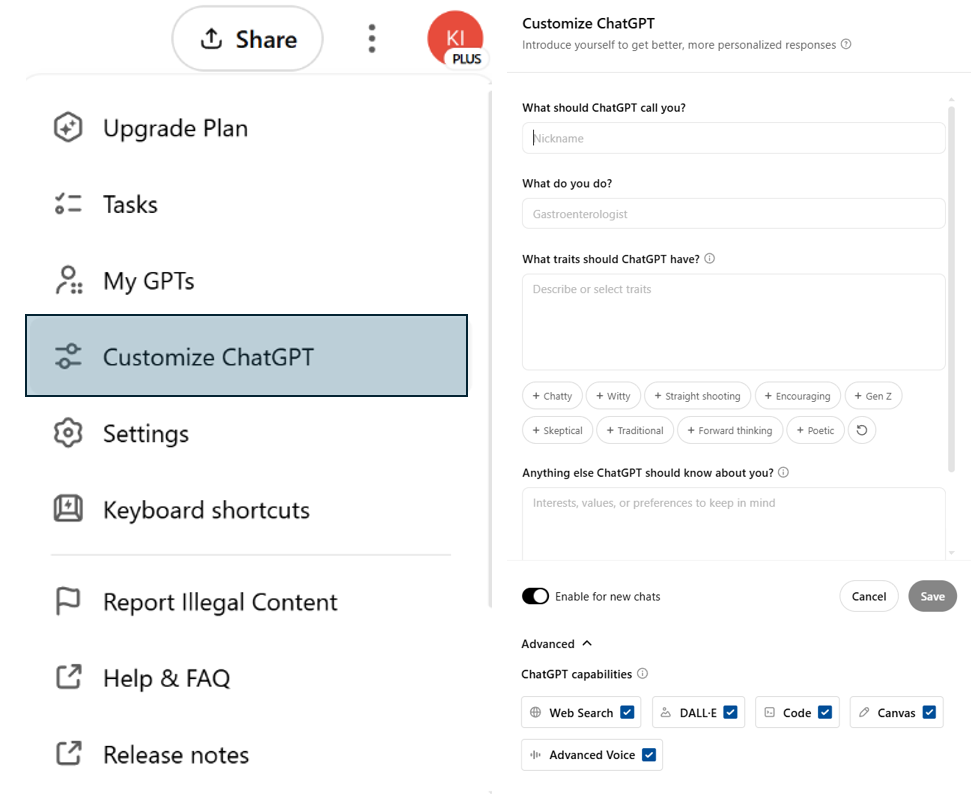

The "Customize ChatGPT" menu, located in the main sidebar, allows users to configure basic interaction preferences. Researchers can specify how the assistant should address them, indicate their professional role, and define preferred response traits such as concise, straightforward, or encouraging.

An optional free-text field enables further contextual information, for example:

- “I prefer UK spelling”,

- “Use APA7 citations”, or

- “I conduct academic research and value concise, evidence-based responses.”

These settings can help standardise tone and domain relevance across sessions, making interactions more consistent for academic and professional use.

2. Memory: Context Persistence Across Sessions

The Memory functionality in ChatGPT introduces persistent context across sessions, enabling the model to recall user-provided information such as research interests, preferred tone, or disciplinary norms. This is particularly useful in workflows requiring iterative engagement—such as progressive drafting, longitudinal analysis, or tool-assisted literature synthesis.

When enabled, memory supports:

- Greater semantic coherence across sessions.

- Reduced need for prompt repetition.

- Adaptive interaction tuned to researcher-specific expectations.

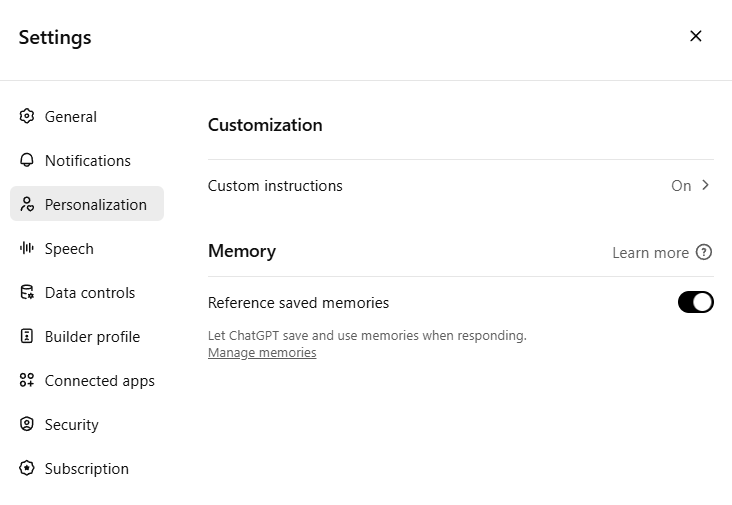

Users retain full control over what is stored. Via Settings > Personalisation > Memory, the “Manage memories” interface provides a transparent overview of saved content, with options to review, edit, or delete individual entries—or to clear memory entirely.

Disabling memory may be appropriate for sensitive or time-limited work to ensure full confidentiality and reduce the risk of context carryover between unrelated projects. When the memory feature is deactivated, Chatgpt treats each session independently, without retaining contextual information from previous interactions.

3. Model Selection

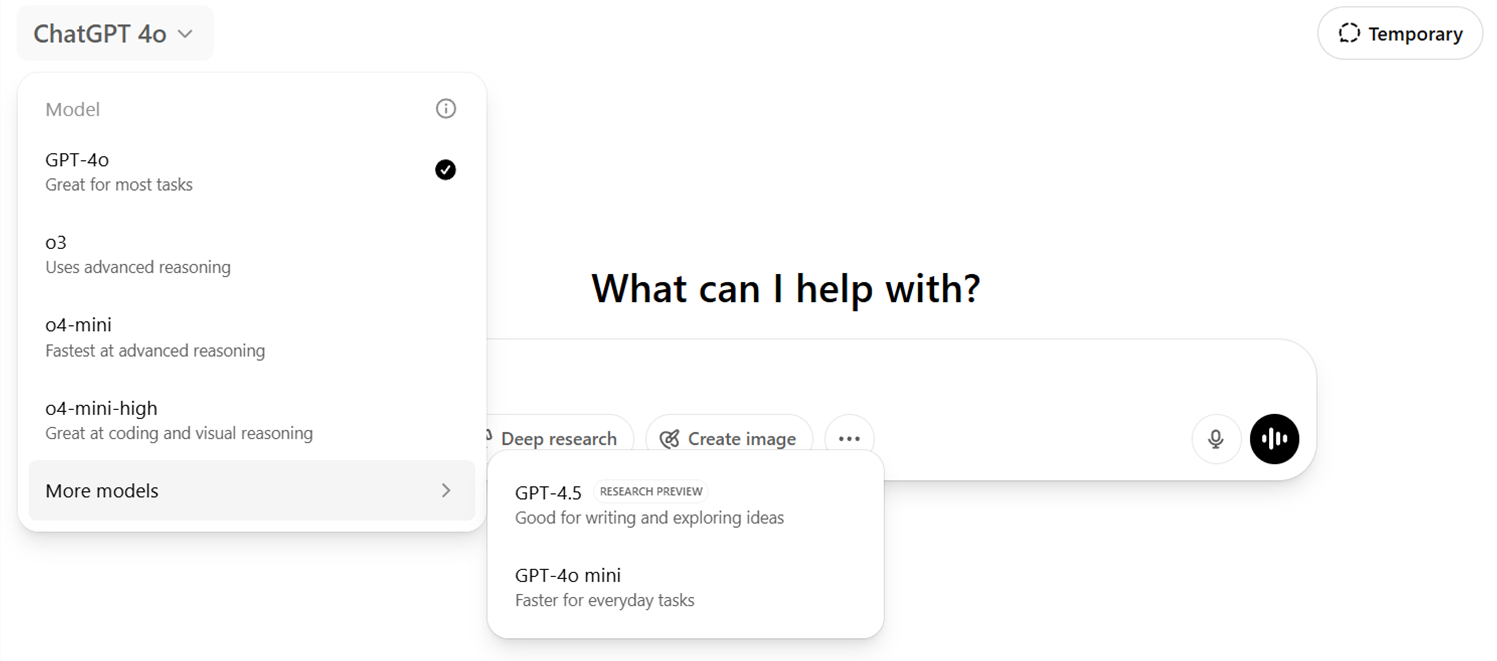

Users with a ChatGPT Plus subscription can select from several models, each optimised for distinct types of tasks. These options are available from the model selector at the top of the chat window:

- GPT-4o – The default model, suitable for most tasks including research assistance, reasoning, and multimodal input.

- o3 – Optimised for advanced reasoning; recommended for logic-heavy tasks or in-depth conceptual exploration.

- o4-mini – Designed for speed, offering rapid responses while maintaining solid reasoning performance.

- o4-mini-high – Tailored for coding and visual tasks, ideal for research involving structured data, code generation, or visual input.

- GPT-4.5 (Research Preview) – Good for writing and exploring ideas.

- GPT-4o mini – A lightweight alternative optimised for fast, general-purpose queries.

Recommendations

In sum, ChatGPT offers a range of configurable features—such as customisation, memory, and model selection—that can enhance research workflows by improving consistency, efficiency, and relevance. By aligning these settings with specific academic needs, researchers can make better use of the tool while maintaining control over tone, context, and data privacy.

The authors used GPT-4o [OpenAI (2025) GPT-4o (accessed on 22 April 2025), Large language model (LLM), available at: https://openai.com] to generate the output.