In a previous blog post, we focused on input file handling in leading GenAI interfaces, mapping out which formats could be uploaded and processed reliably, and where the main limitations were. This time, we turn to the other side of the equation—output generation—with a concrete test case: producing a fully functional, downloadable .txt file. Using the same prompt from an earlier post on synthetic survey data generation, we re-tested the interfaces to see what has changed. Several AI platforms have improved their ability to generate downloadable content, yet basic functionality — such as producing a simple .txt file in a stable, clickable format — remains inconsistent. While some models manage this task reliably, others still fail, getting stuck in endless output loops or producing unusable links. More complex formats such as .pptx, .xlsx, or .json present even greater challenges, often leading to incomplete files or incompatibility issues.

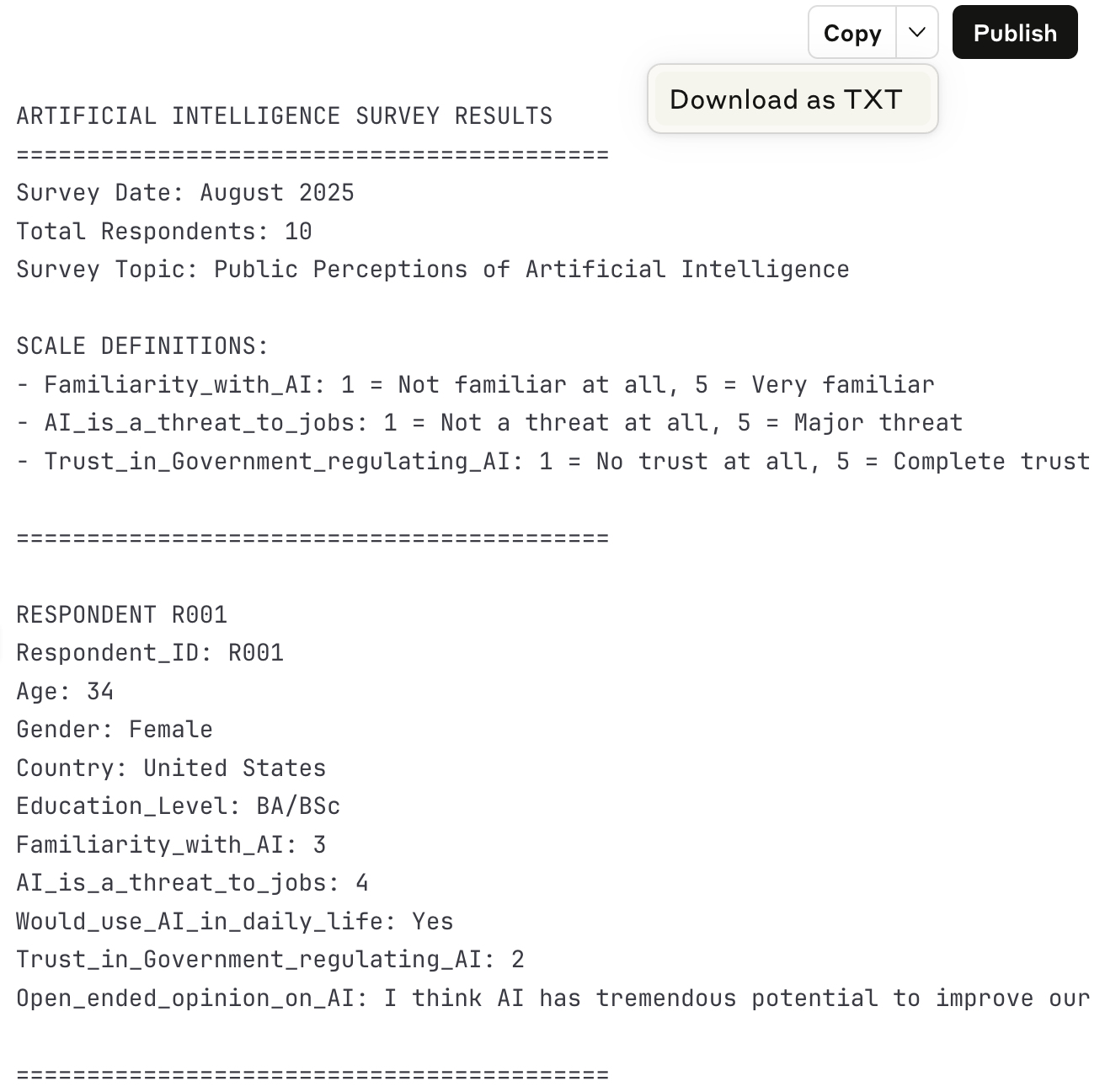

Gemini

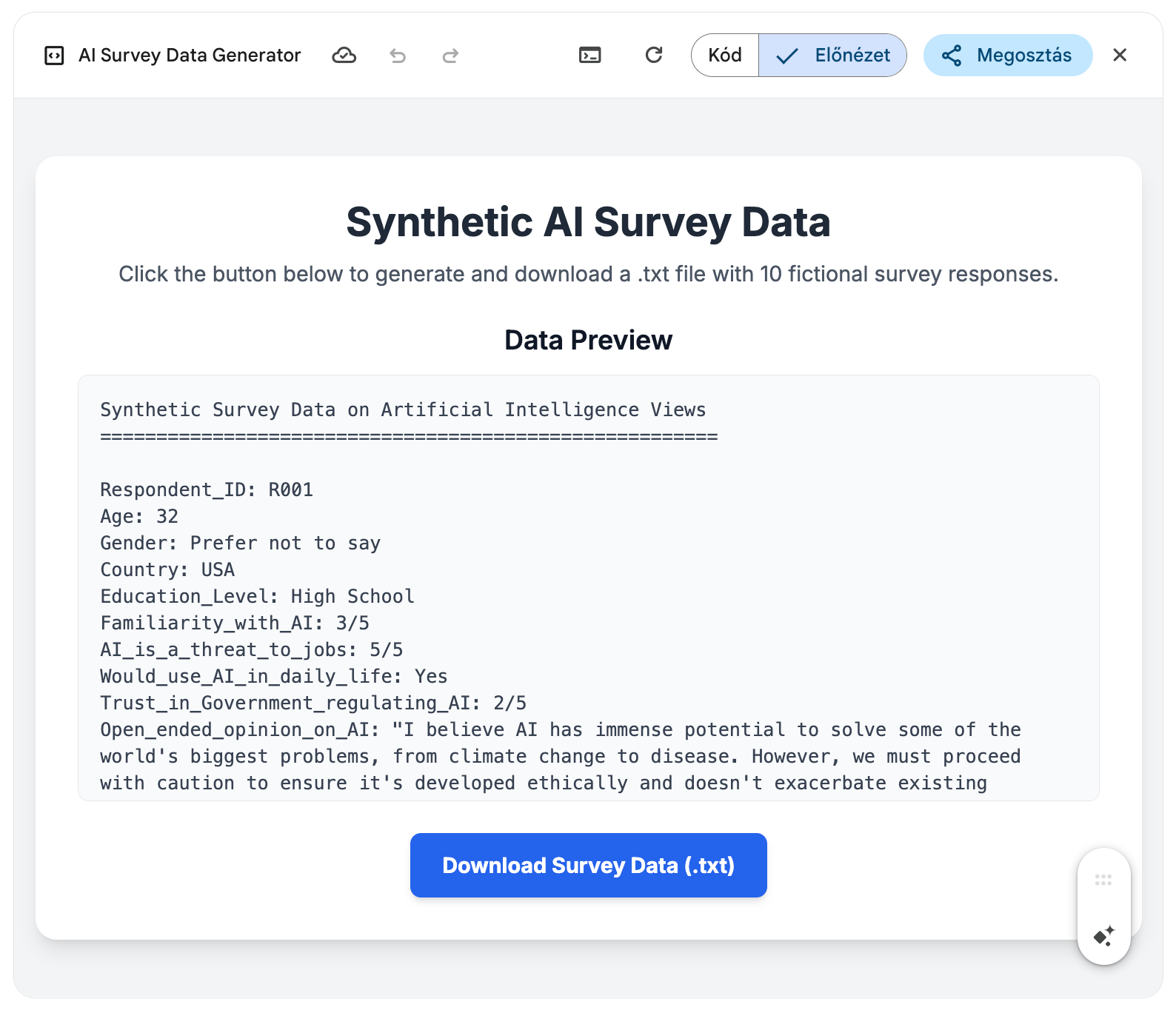

Gemini has shown notable improvement since our last test. In this round, it generated the requested structured .txt file without errors, providing a direct download link. Before downloading, the interface displayed the full content in a preview window, allowing users to check the data immediately. This combination of in-app preview and seamless export makes Gemini’s approach both user-friendly and efficient, particularly for workflows that benefit from quick content verification before saving.

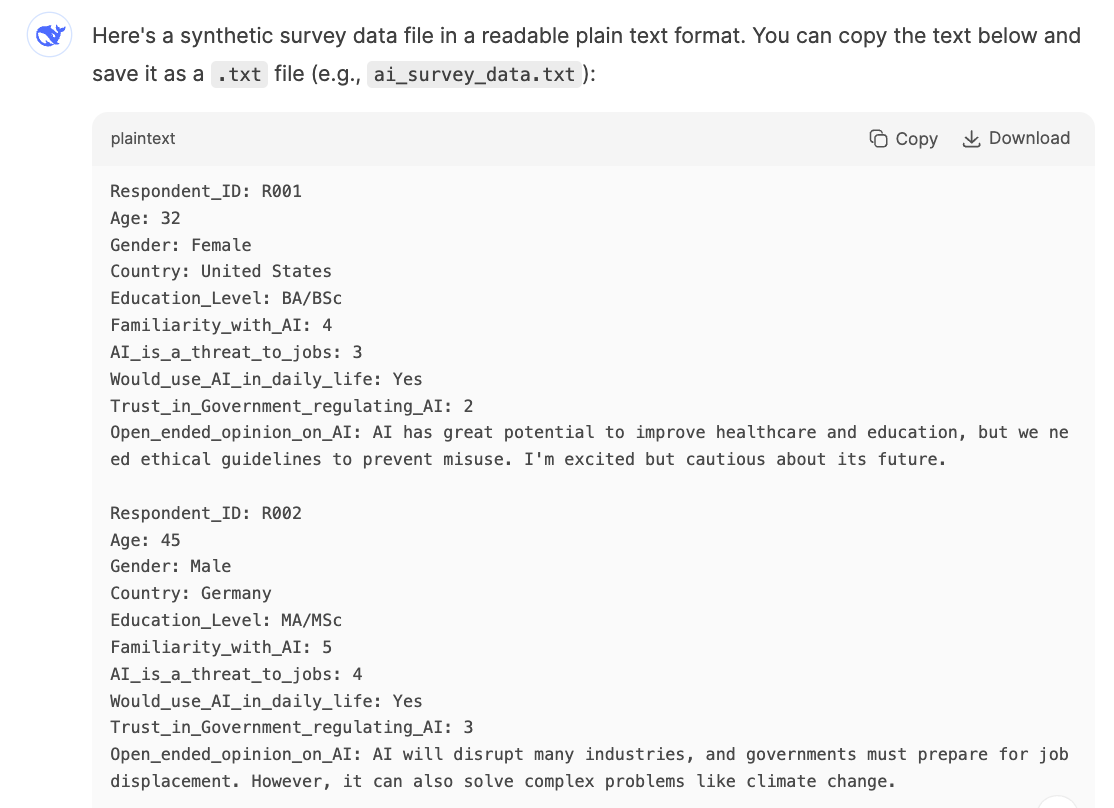

Mistral

Mistral was one of the interfaces that fully completed the task, generating structured data in the correct format and providing an actual downloadable .txt file—no extra steps, no post-processing. The output was well-structured, readable, and precisely aligned with the prompt. As an open-weight model, Mistral stands out for its flexibility in research and deployment contexts, offering a rare blend of transparency and functionality. For workflows requiring a straightforward plain text export, it’s a good and reliable choice.

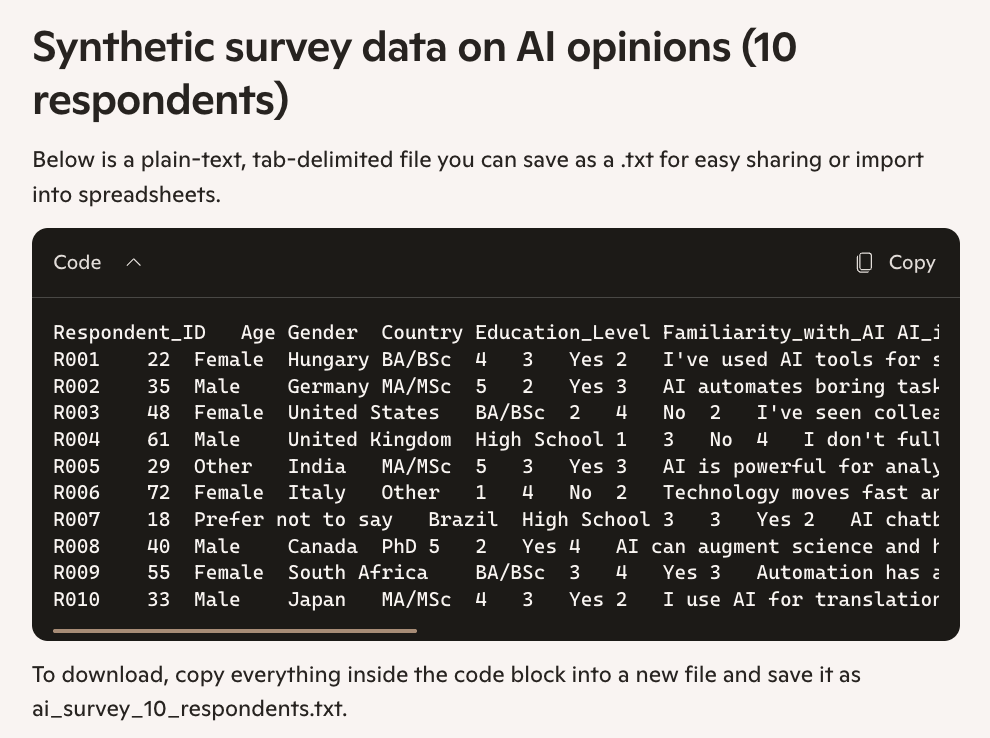

ChatGPT

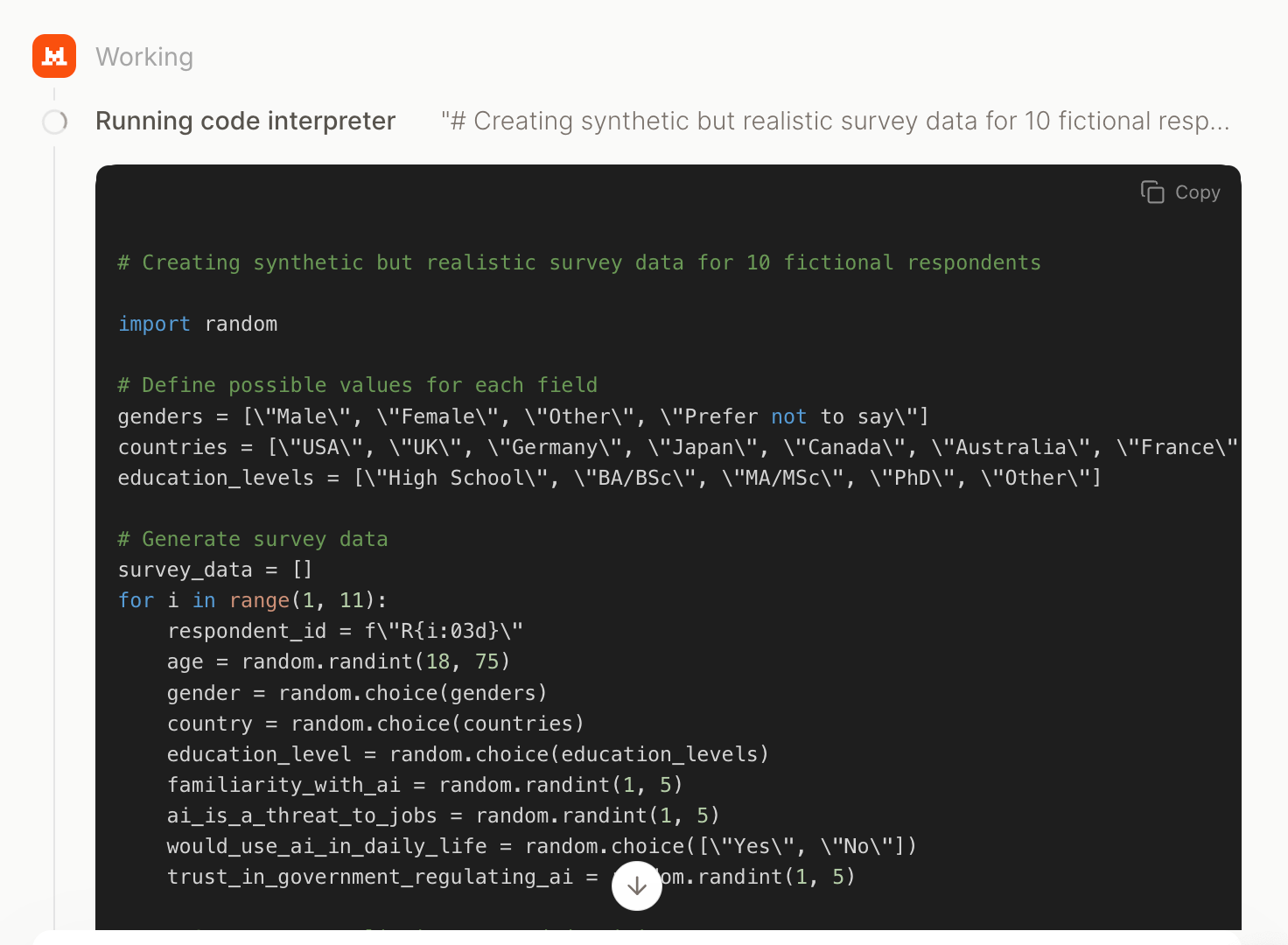

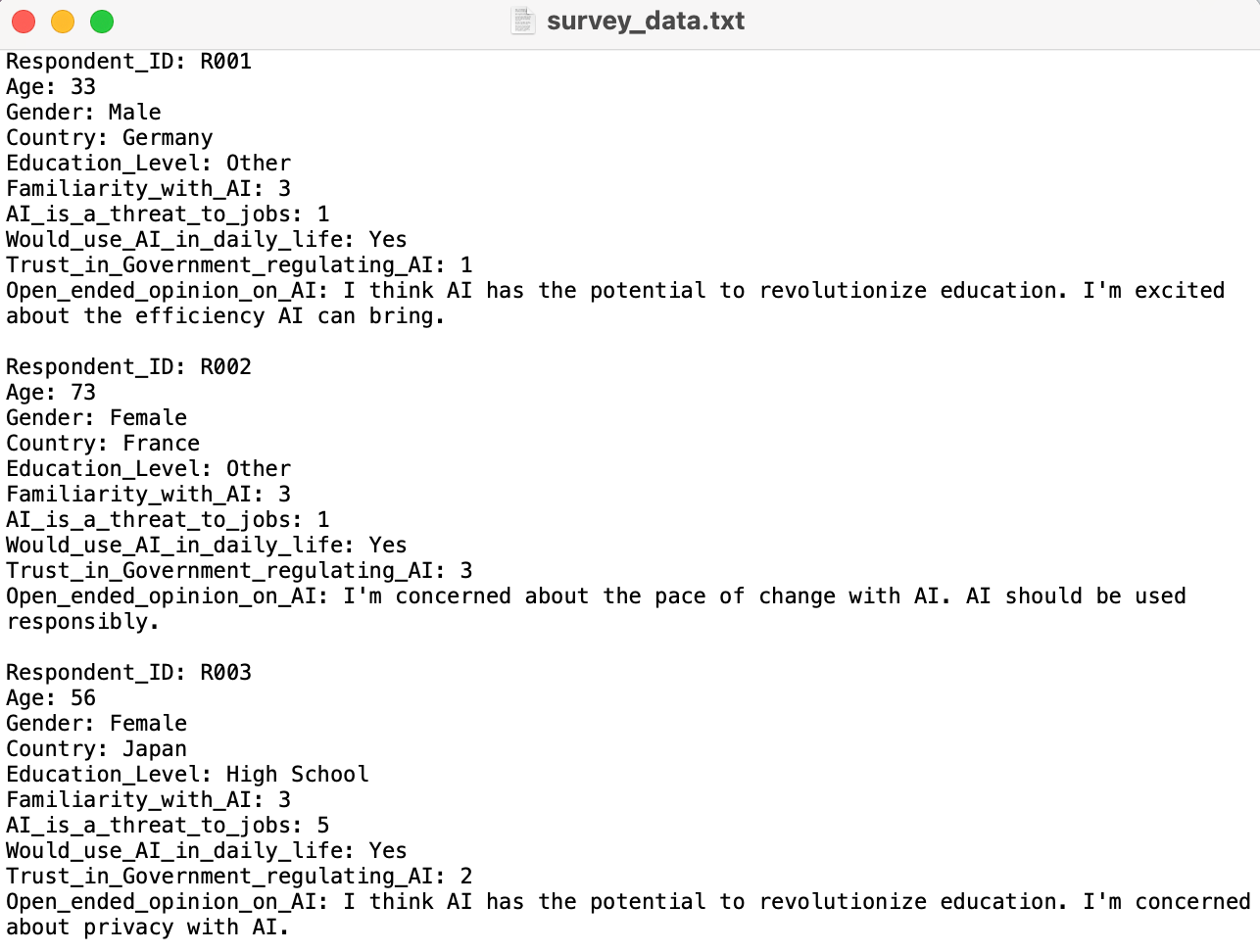

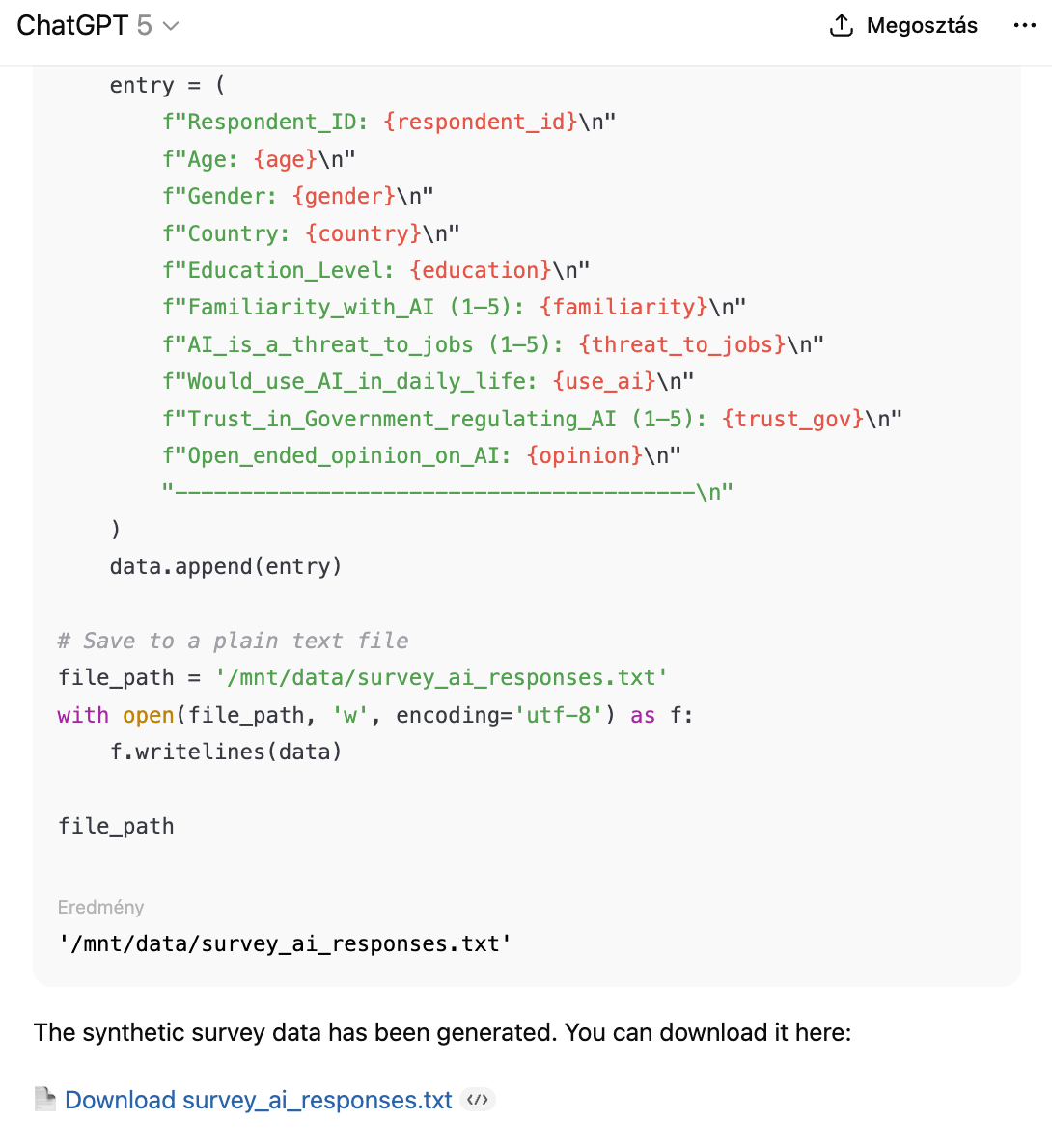

ChatGPT completed the task without fuss. They correctly generated the required data and saved it in a clean .txt file that was immediately available for download. The output matched the prompt’s structure and was presented in a readable table-like format, which opened flawlessly in standard text editors. Unlike many other models, there was no need for manual copying or code-based instructions to generate the file. If you use OpenAI’s higher-tier models and need .txt export as part of your workflow, these dependable options get the job done.

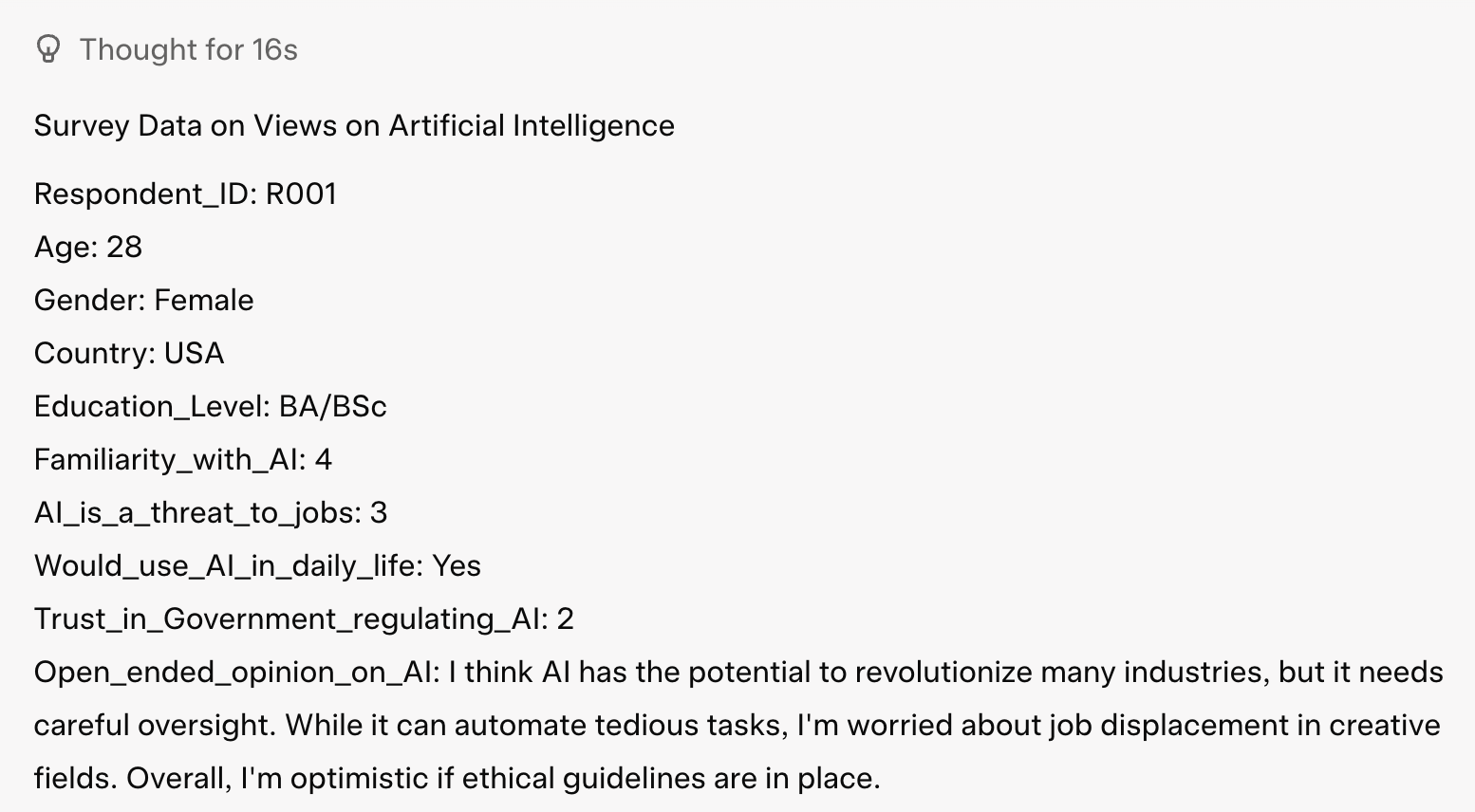

Claude

Claude also handled the task smoothly, producing exactly the structured survey data we requested. The content was presented in a clean, readable format that could be copied directly into a text editor or downloaded as a .txt file without any additional formatting.

DeepSeek

DeepSeek has also made progress since the last comparison. Previously, it only returned the file content as plain text, requiring users to copy and save it manually. In the latest test, however, it offered a direct .txt download alongside the inline content preview, significantly improving usability. This small but important change removes the extra step of manual file creation and brings DeepSeek closer to the more user-friendly solutions offered by its competitors.

Copilot

Copilot’s file generation method has not changed since the previous comparison. It still produces the file content only as copyable plain text, accompanied by instructions to open a text editor and save the content manually as a .txt file. While this approach ensures compatibility across platforms, it lacks the convenience of an instant download link or integrated file output, which competitors have increasingly adopted.

Grok

Grok also did not offer a downloadable file. Instead, it returned the dataset as plain text within the chat, requiring the user to manually copy and save the content. This limits convenience for spreadsheet workflows or automated processing, as no structured file output is generated.

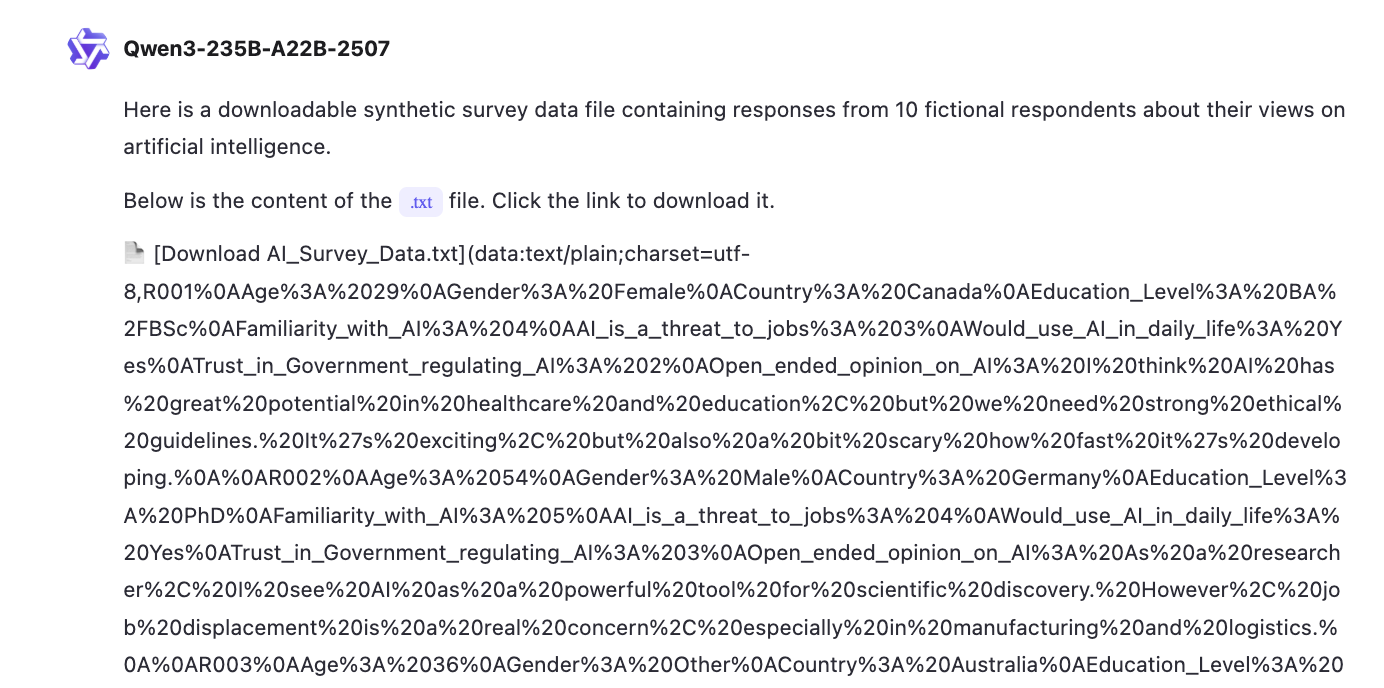

Qwen

Qwen attempted to provide a .txt file with synthetic survey responses from 10 respondents, but instead began generating an extremely long, URL-encoded text stream. The process became stuck in a continuous output loop, producing an effectively endless link until it had to be manually stopped. As a result, no usable file was produced.

Recommendations

For researchers relying on downloadable outputs, platform choice still matters. While several interfaces have improved since our last test, significant differences remain—some can now handle a range of formats seamlessly, others still struggle even with the simplest file types. Complex formats such as .pptx, .xlsx, or .json are generally more error-prone, making it essential to test the full workflow before committing to a single tool. In practice, selecting an interface that matches both the required file types and your preferred export process will help minimise interruptions and ensure results are usable straight away.