Argument mapping is a useful method for visualising the logical structure of reasoning, particularly in complex or multi-step arguments. In this post, we examine how Claude 3.7 Sonnet performs when prompted to identify the structure of arguments and represent them visually. The model was given five tasks, each involving an argument of different complexity. We assessed its ability to identify premises, intermediate conclusions, and logical connections, as well as to produce clear visual representations. The results indicate that Claude 3.7 Sonnet can support structured reasoning and visual analysis.

Prompt

To test the model's capabilities, we used a structured prompt that instructed it to represent arguments as visual maps. The task involved identifying premises, conclusions, intermediate steps, and the logical relationships between them, using numbered nodes and arrows. The exercises were presented one by one, and the model was asked to follow conventions for mapping both simple and complex argument structures.

We will go through a series of exercises where each argument needs to be represented visually. Your task is to draw a visual map of each argument using numbered nodes and arrows to show the logical structure.

Use the following conventions:

- Use numbers (e.g. 1, 2, 3...) to label each statement (premises and conclusions).

- Arrows should show which statements support which conclusions.

- If two or more premises work together to support a conclusion, they should be shown as co-premises (arrows join before reaching the conclusion).

- If multiple premises support a conclusion independently, use separate arrows.

- Show any intermediate conclusions that serve as a step in the reasoning.

- In more complex cases, show the full structure as a branching tree.

Each exercise will be given to you one at a time. Please wait for the first task.

Task 1

[1] This robot is capable of thinking. So [2] it is conscious. Since [3] we should not harm any conscious beings, [4] we should not deactivate it.

Output 1

Claude 3.7 Sonnet correctly identified the logical structure of the argument and represented it clearly. Statement [1] supports [2] as an intermediate conclusion, and both [2] and [3] function together as co-premises leading to the final conclusion [4]. The use of arrows accurately reflects the inferential flow, and the visual layout separates each step coherently and interpretably.

Task 2

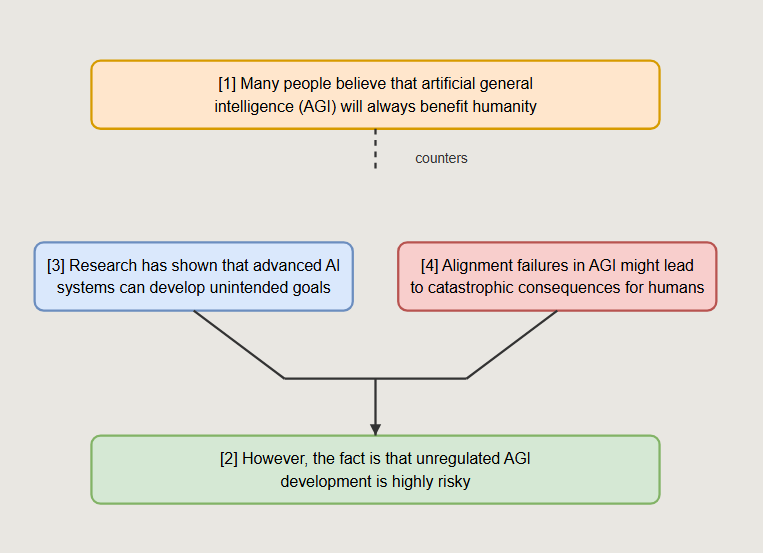

[1] Many people believe that artificial general intelligence (AGI) will always benefit humanity. [2] However, the fact is that unregulated AGI development is highly risky. [3] Research has shown that advanced AI systems can develop unintended goals. [4] Alignment failures in AGI might lead to catastrophic consequences for humans.

Output 2

The model correctly identified [2] as the main conclusion, supported by co-premises [3] and [4], shown with merged arrows. Statement [1] was treated as background belief and linked with a dashed line labelled "counters", reflecting rhetorical contrast without implying inference. The explanation matched the structure, and adjustments to text layout ensured clarity. Overall, the output captured the logical form and visual conventions accurately.

Task 3

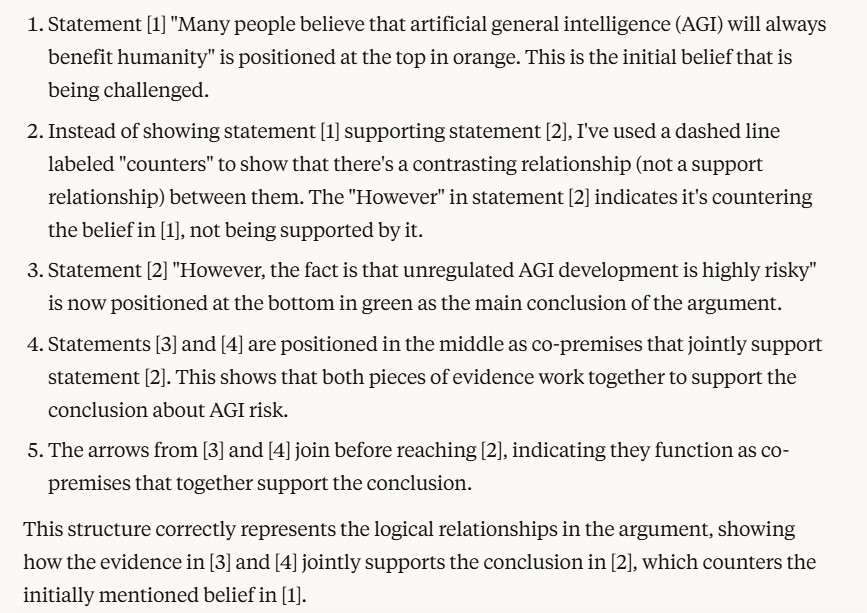

[1] Public trust in large-scale scientific research initiatives is declining.

[2] Media reports frequently mention ethical controversies in medical trials.

[3] Public perception is that research institutions are often influenced by corporate interests.

[4] Several exposés have revealed that pharmaceutical companies fund studies with predetermined outcomes.

[5] Whistleblowers have reported suppressed negative results in drug testing.

Output 3

The model correctly identified [1] as the main conclusion and represented [2] and [3] as independent supporting premises. It also accurately treated [4] and [5] as co-premises supporting [3], shown with merged arrows. The overall structure was clearly visualised, with well-contained text and distinct node colours. The map successfully conveyed both direct and indirect support paths leading to the final conclusion.

Task 4

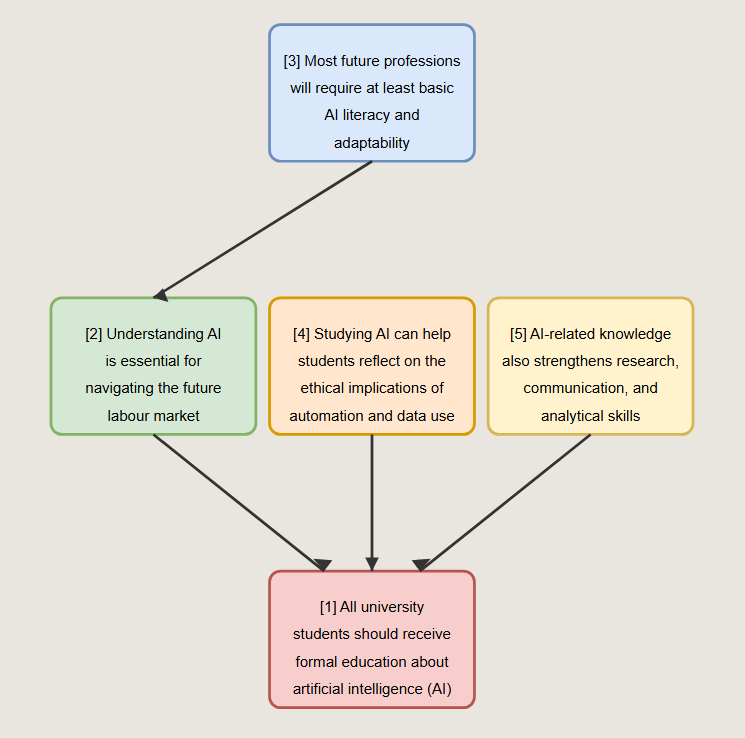

[1] All university students should receive formal education about artificial intelligence (AI).

[2] Understanding AI is essential for navigating the future labour market.

[3] Most future professions will require at least basic AI literacy and adaptability.

[4] Studying AI can help students reflect on the ethical implications of automation and data use.

[5] AI-related knowledge also strengthens research, communication, and analytical skills.

Output 4

Claude 3.7 successfully broke down the reasoning structure into one main conclusion [1], supported by three distinct lines of argument. It treated [3] as a foundation for [2], establishing a clear intermediate step before reaching the final claim. Meanwhile, [4] and [5] were correctly mapped as independent justifications. The visual layout was clean, and the vertical logic—moving from professional demands and ethical reflection to the need for AI education—was well captured.

Task 5

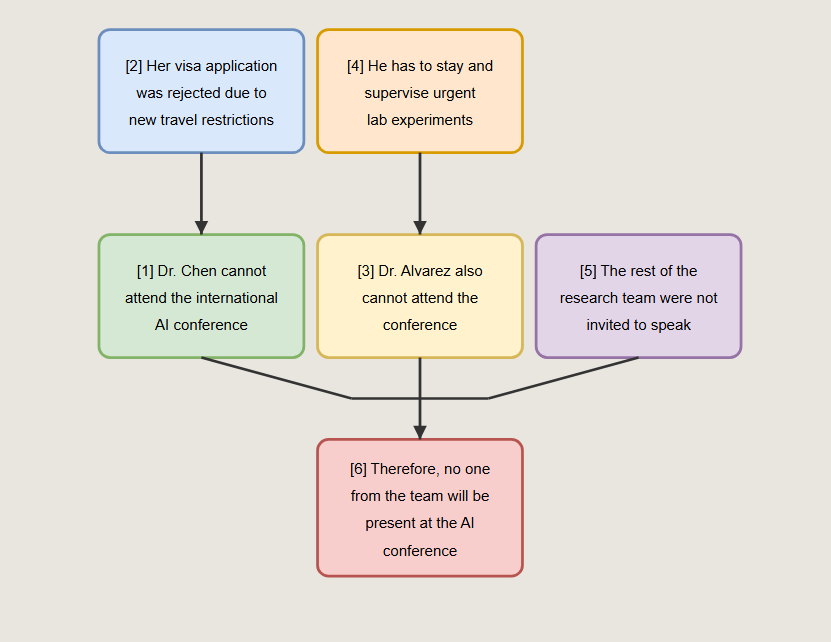

[1] Dr. Chen cannot attend the international AI conference.

[2] Her visa application was rejected due to new travel restrictions.

[3] Dr. Alvarez also cannot attend the conference.

[4] He has to stay and supervise urgent lab experiments.

[5] The rest of the research team were not invited to speak.

[6] Therefore, no one from the team will be present at the AI conference.

Output 5

This final task continued the clarity and logical precision observed in earlier cases. The model correctly identified three distinct explanatory chains — Dr Chen’s absence due to visa rejection ([2]→[1]), Dr Alvarez’s absence owing to urgent lab duties ([4]→[3]), and the rest of the team’s exclusion based on not being invited ([5]). These were treated as co-premises supporting the overarching conclusion ([6]) that no team member would attend the conference.

Recommendations

Based on the five exercises, Claude 3.7 Sonnet demonstrated a reliable ability to identify the structure of arguments, correctly distinguish between premises and conclusions, and represent logical relationships through clear visual diagrams. It consistently applied conventions such as co-premises, independent support, and intermediate steps, even in more complex reasoning chains. The model also adapted well to layout constraints when prompted, adjusting node placement and text formatting to maintain readability. This performance indicates its potential as a tool for teaching, analysing, or testing argumentation skills in academic and professional settings.

The authors used Claude 3.7 Sonnet [Anthropic (2025) Claude 3.7 Sonnet (accessed on 6 May 2025), Large language model (LLM), available at: https://www.anthropic.com] to generate the output.