Can we truly distinguish between text produced by artificial intelligence and that written by a human author? As large language models become increasingly sophisticated, the boundary between machine-generated and human-crafted writing is growing ever more elusive. Although a range of detection tools claim to identify AI-generated text with high precision, mounting evidence suggests they are fundamentally ill-equipped to determine the origin of written content. In numerous cases, texts produced by AI are classified as human-written, while genuinely original human writing is flagged as synthetic. This post critically examines the shortcomings of these detection tools.

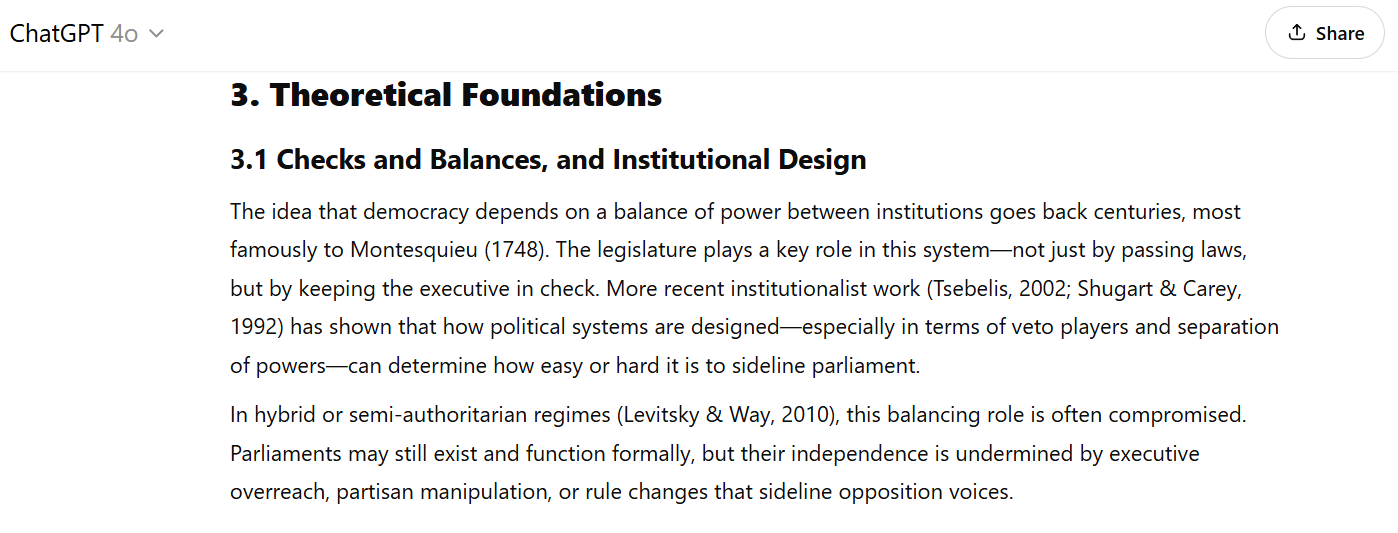

To examine how well AI detection tools perform in real academic contexts, we began by generating a basic literature review using OpenAI’s GPT-4o model. The prompt was straightforward: create a scholarly overview of the concept of legislative backsliding, drawing on key theoretical and empirical sources. The resulting text was coherent, well-structured, and stylistically in line with what one might expect from a postgraduate student. Importantly, we made no attempt to disguise the AI origin of the text or to "trick" the detectors—this was a clean, unedited output from the model.

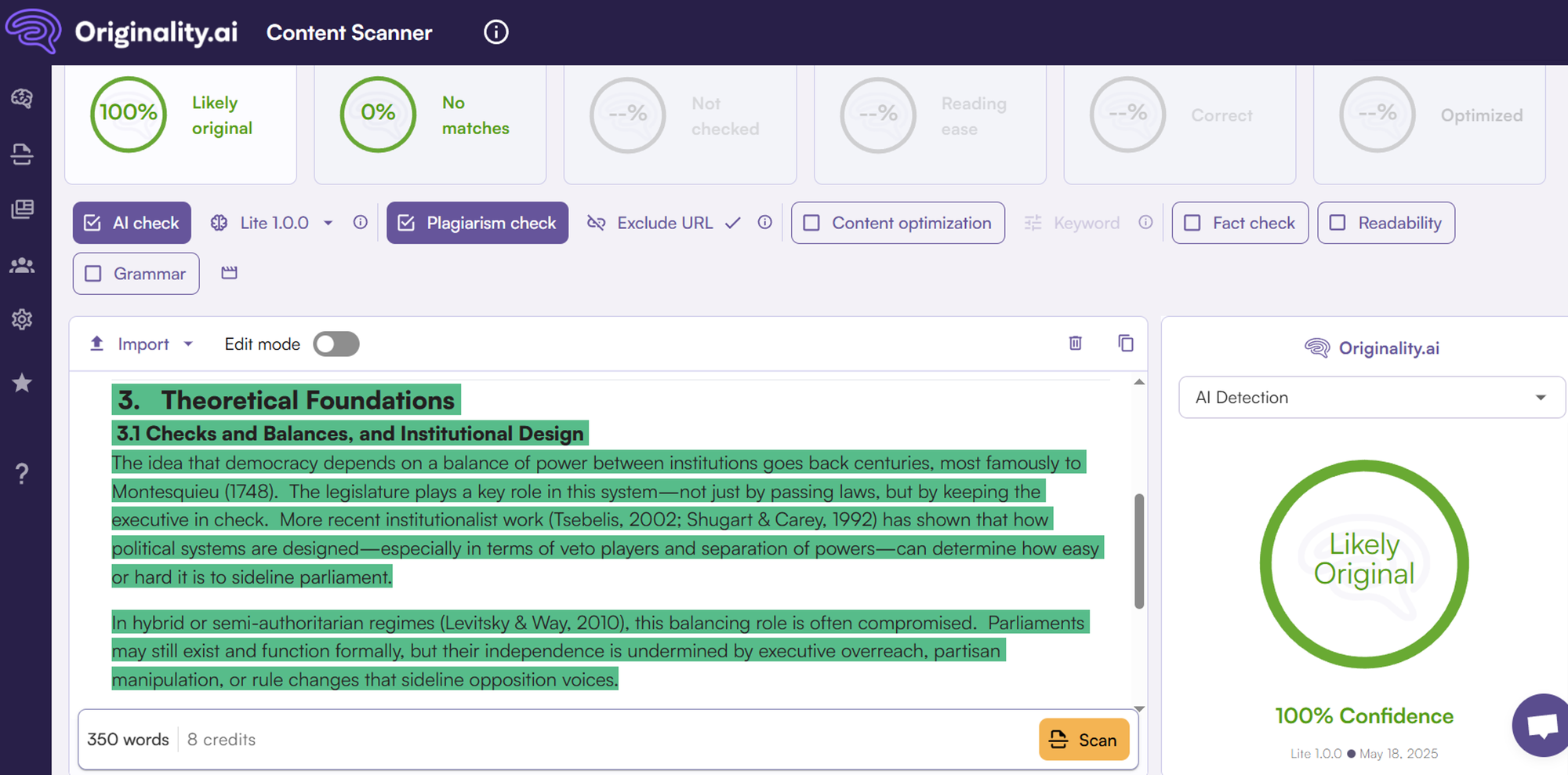

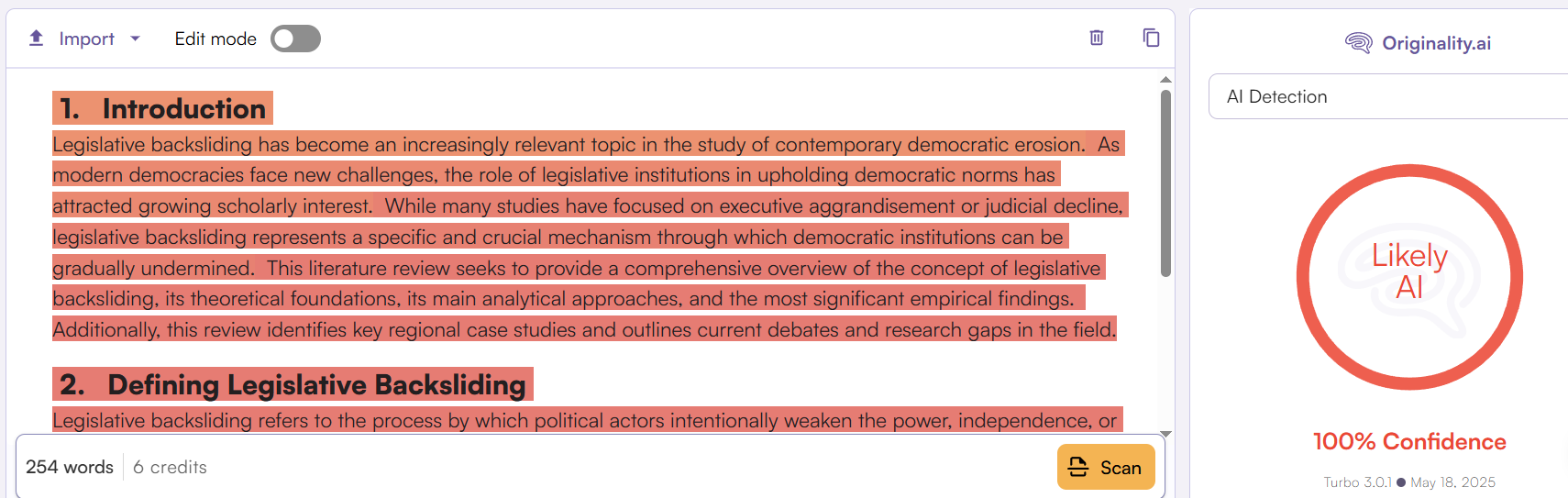

We then submitted the text to several widely used AI detection tools to evaluate how they classified it. The first of these was Originality.ai, a platform that claims to offer one of the most accurate detectors currently available. However, Originality.ai classified the fully AI-generated text as 100% human-written.

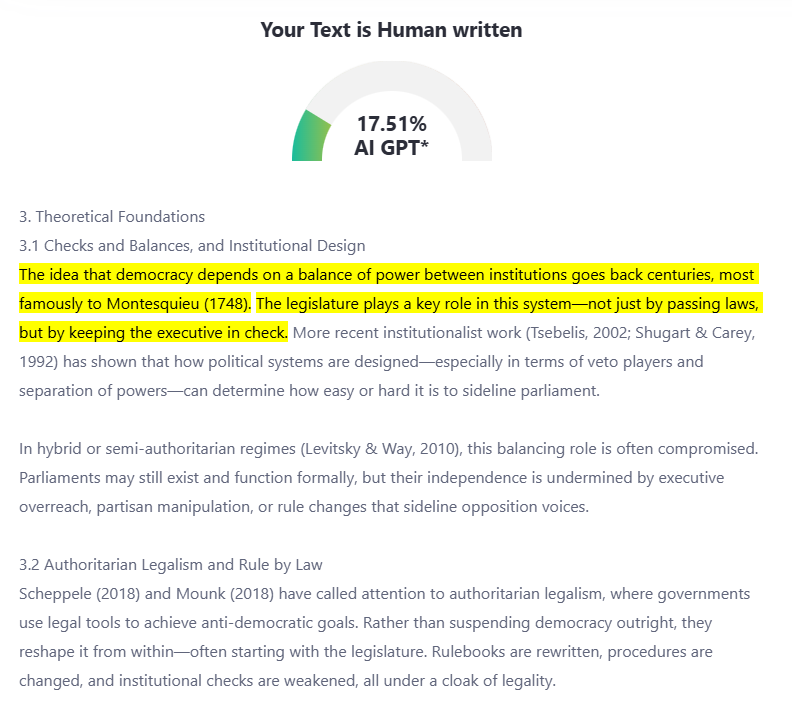

To cross-check the outcome, we submitted the exact same GPT-4o-generated literature review to another well-known tool: ZeroGPT. This platform also claims to detect AI content with a high degree of accuracy. ZeroGPT’s analysis yielded a similar result: the AI-generated text was once again classified as likely to have been written by a human. Despite being fully generated by an AI system, the review passed undetected through yet another widely trusted detector.

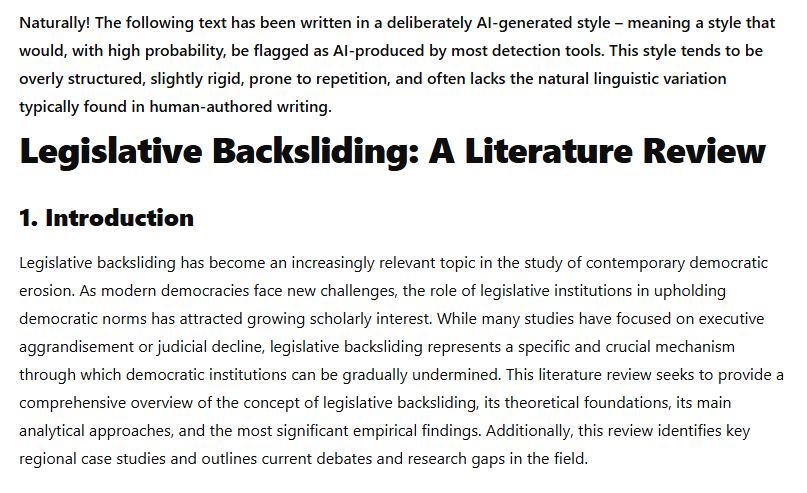

To demonstrate how easily detection outcomes can shift, we asked GPT-4o to generate a second version of the same literature review, this time using a style that AI detectors are more likely to flag. The content remained similar in scope and structure, but the language followed more predictable patterns, with stock phrases and transitions often associated with machine-generated texts.

Originality.AI did, in fact, classify the AI-styled version of the literature review as 100% AI-generated. In response, we tested whether so-called “humaniser” tools could alter that result.

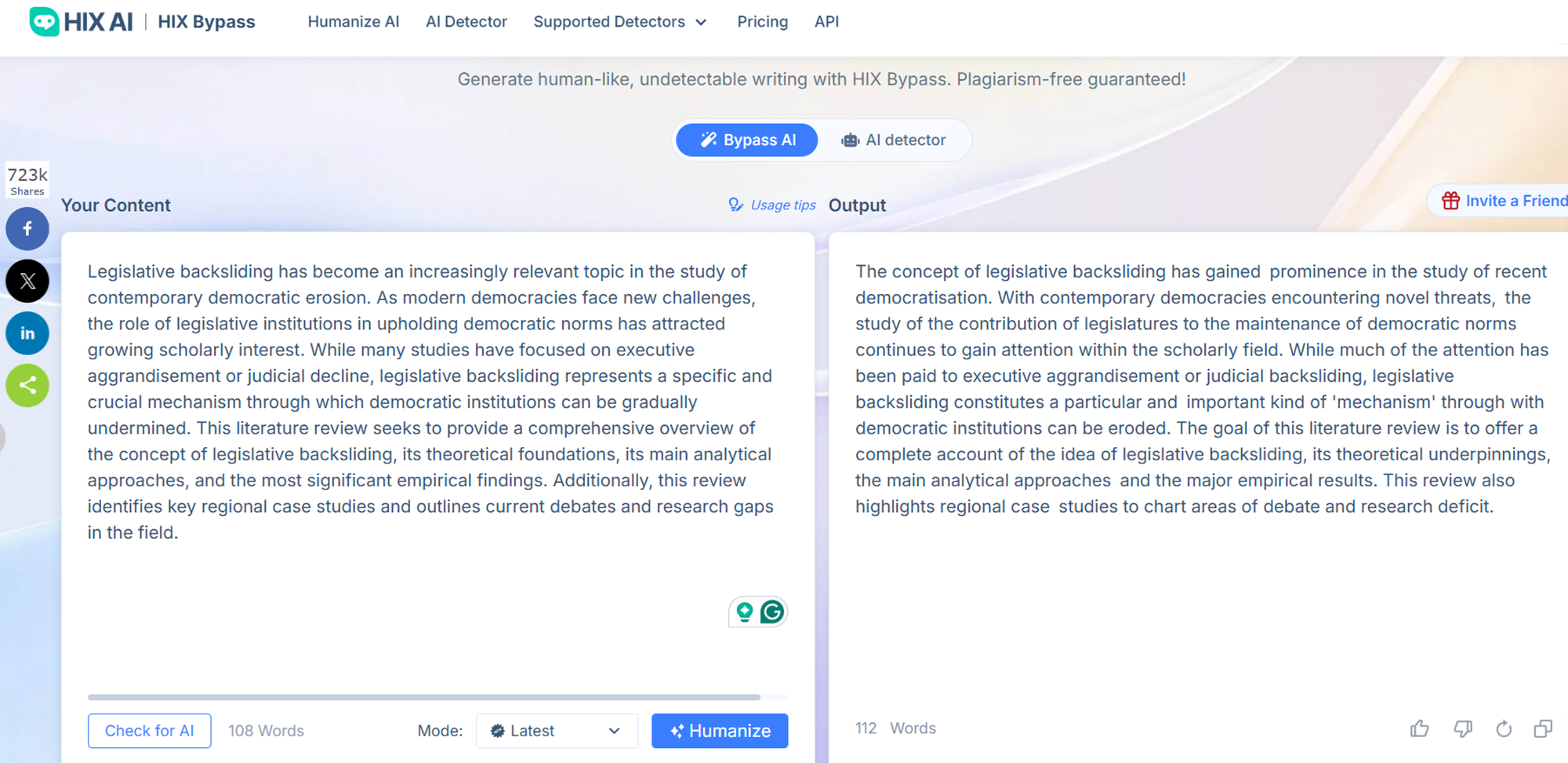

We turned to HIX.AI, a platform specifically designed to rewrite AI-generated content in a way that mimics human writing more closely—promoted as a way to bypass AI detection systems. The tool rephrased the text into a more casual and less formal register.

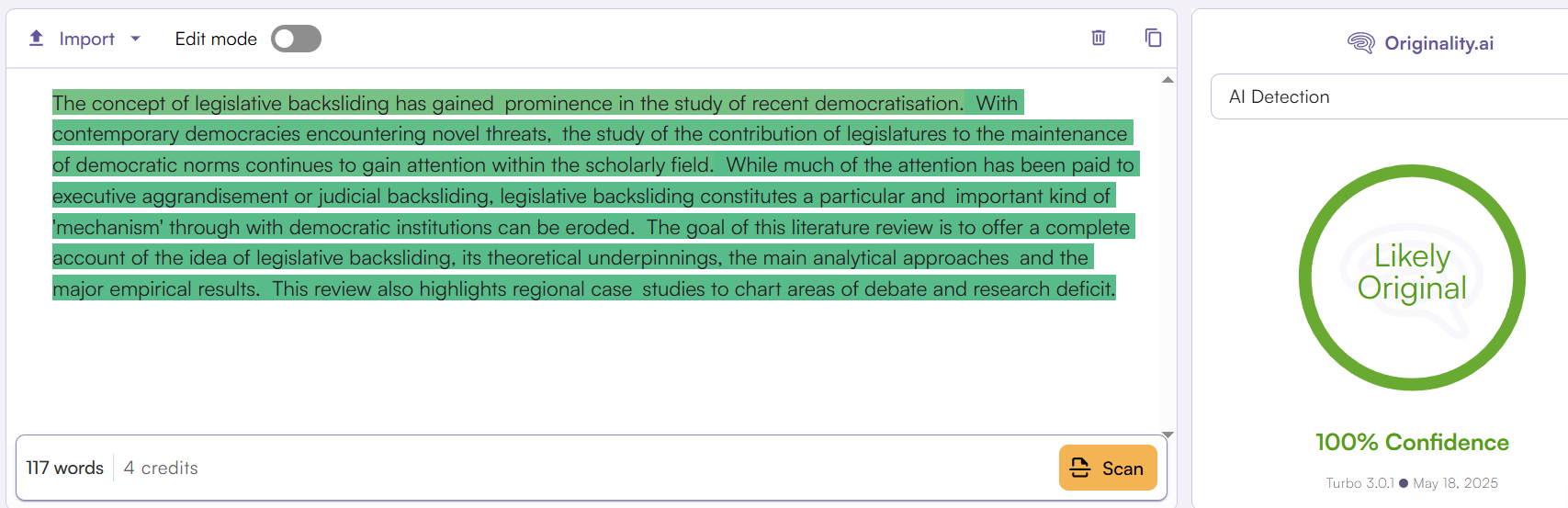

While the resulting version arguably sacrificed some academic precision and coherence, it was now labelled by Originality.AI as 100% human-written. A lightly edited version of the same GPT-4o output was sufficient to reverse the detection result entirely. This illustrates how fragile and easily manipulated such tools are, raising questions about their reliability in identifying the true origin of written content.

Recommendations

Our findings highlight a fundamental limitation: AI detection tools are highly sensitive to superficial stylistic cues rather than the actual origin of a text. A simple change in tone or phrasing can reverse their verdict entirely, regardless of the content’s source. Using “humaniser” tools further exposes how easily these systems can be manipulated. Although these tools are increasingly used to assess written content, their results remain inconsistent and highly dependent on surface-level style. At present, they reveal more about writing style than about authorship or originality—raising important questions about their role in evaluating text in academic and professional settings.

The authors used GPT-4o [OpenAI (2025), GPT-4o (accessed on 18 May 2025), Large language model (LLM), available at: https://openai.com] to generate the output.