This post presents a practical solution for integrating OpenAI’s GPT API into RStudio using a custom Shiny interface. The tool enables real-time code generation from natural language instructions, allowing users to interact with GPT-4 directly within their R workflow—without leaving the environment or blocking the console. We demonstrate how to securely configure the API, build the Shiny-based chat interface, and explain the significance of running it in a separate session. Finally, we test the system on the classic mtcars dataset to illustrate its effectiveness in generating and executing R code for data visualisation.

Setting up the API key in RStudio

Rather than hard-coding the OpenAI API key in a script—which poses clear security and reproducibility risks—we used a more robust and conventional approach by storing it in the .Renviron file. This method ensures the key is automatically loaded in every new R session and remains hidden from the codebase.

To do this, we used the following command directly in the R console:

file.edit("~/.Renviron")

This command opens the user-level environment file for editing. We then added the following line to the file (replacing the placeholder with our actual API key):

OPENAI_API_KEY=sk-xxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxx

After saving the file, we restarted the R session (restart R session manually via the Session > Restart R option, or use the code below) to load the updated environment:

.rs.restartR()

Launching the Shiny-based Chat Interface in RStudio

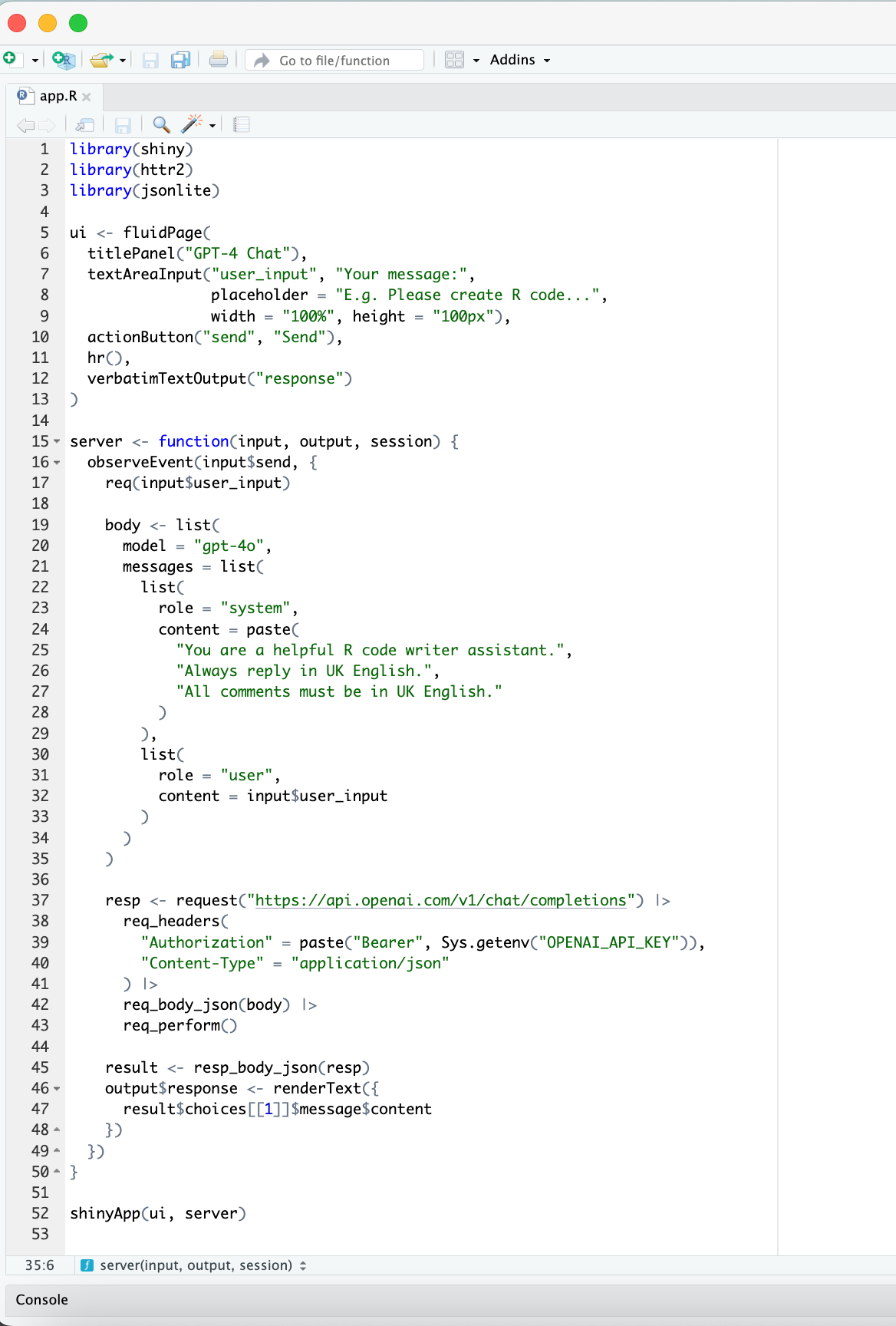

With the API key securely stored and accessible via the environment, we built a simple yet effective Shiny application to enable natural language interaction with the GPT-4 model directly from within RStudio. The application provides a user-friendly chat interface where researchers can enter free-text instructions—such as asking for code to generate a plot—and receive a model-generated R code snippet in response. This facilitates rapid prototyping, code drafting, and teaching use cases, all without leaving the RStudio environment.

The app is built using the {shiny} and {httr2} packages. The interface consists of a text area for user input, a "Send" button, and a response panel that displays the GPT-generated output. The API call is constructed within the server logic and executed asynchronously when the user submits a prompt.

The complete source code of the Shiny application presented above is also available for download as an .R file, ensuring full reproducibility and easy adaptation.

By running the GPT-powered assistant in a local Shiny app, we create a clean and lightweight interface that opens in the browser—making interaction smooth and distraction-free. Crucially, this setup does not block the R console during execution. Unlike inline API calls in scripts, which halt the session until a response is returned, this Shiny-based approach allows the console to remain fully available for other tasks while the model generates code in parallel.

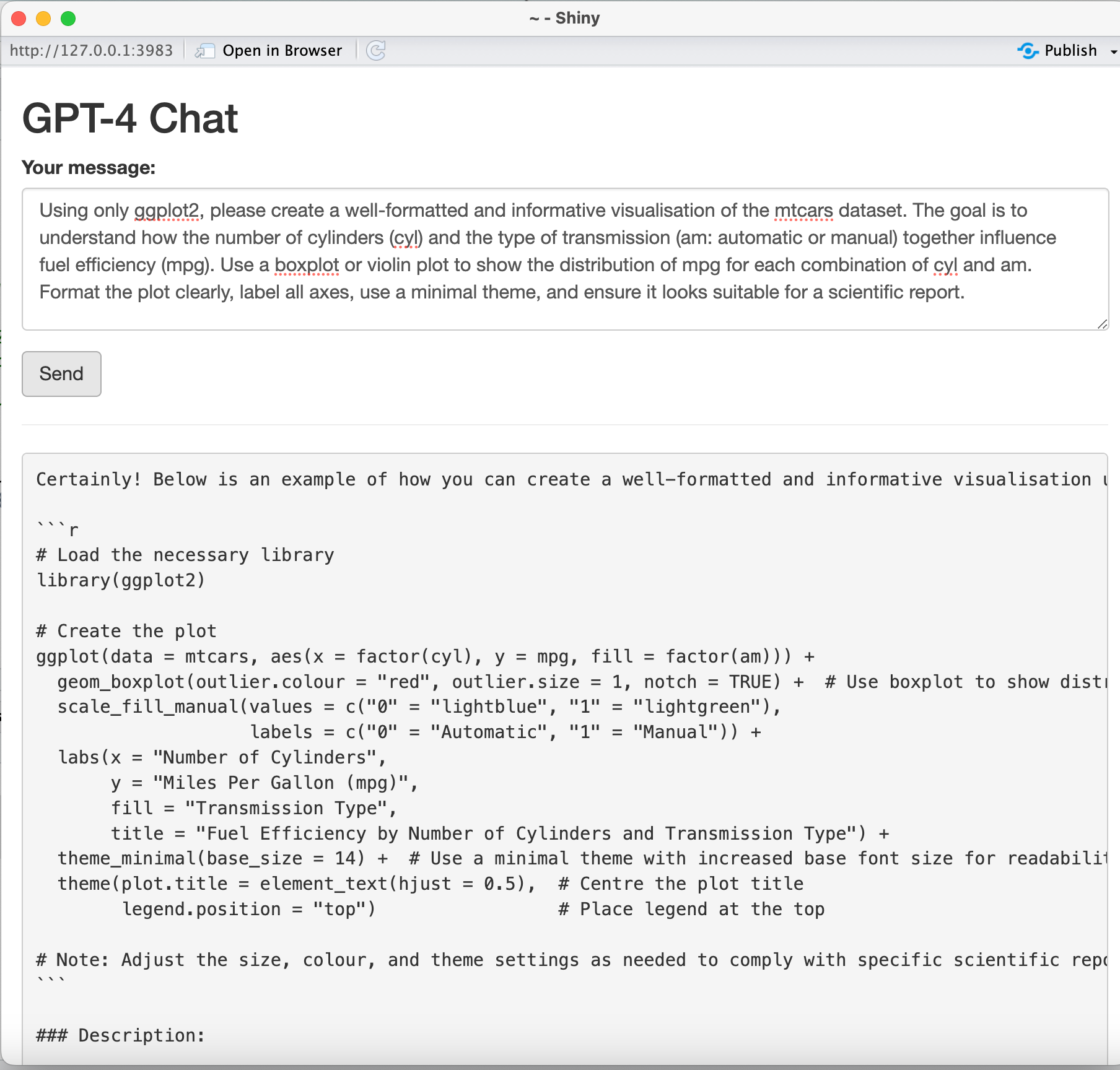

Testing the Interface with a Real Prompt

To evaluate the setup, we submitted a natural-language prompt asking the model to visualise the impact of cylinder count and transmission type on fuel efficiency using the mtcars dataset. As shown in the screenshot, the assistant returned well-structured and directly executable ggplot2 code. The output not only met the formatting criteria we specified but was also ready to run without modification—demonstrating the practical utility of this Shiny-based integration.

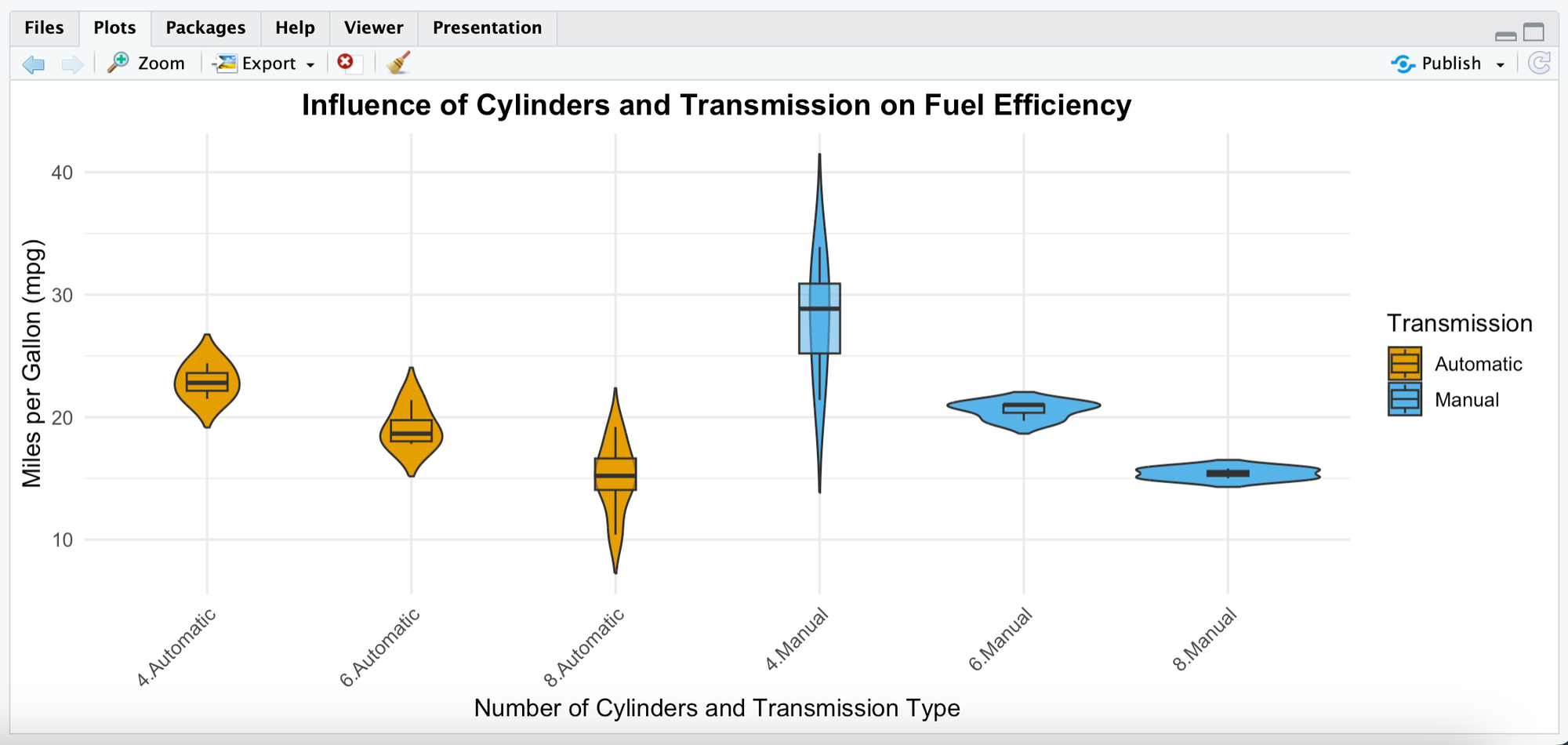

The generated violin plot illustrates how fuel efficiency (measured in miles per gallon) varies across combinations of cylinder number and transmission type in the mtcars dataset. Manual cars with 4 cylinders appear to be the most fuel-efficient, while 8-cylinder vehicles—particularly with automatic transmission—show the lowest mpg values. The visualisation effectively captures both central tendencies and distributional spread, providing a clear overview for comparative analysis.

Recommendations

This streamlined setup offers researchers a fast, distraction-free way to experiment with code generation, automate repetitive tasks, and prototype visualisations or analyses without leaving RStudio. Its real strength lies in enabling natural language interaction with GPT-4 in a controlled environment. By running in a browser-based Shiny session, the tool remains lightweight, responsive, and keeps the R console fully available for parallel work.

The authors used GPT-4o [OpenAI (2025), GPT-4o (accessed on 27 May 2025), Large language model (LLM), available at: https://openai.com] to generate the output.