As ChatGPT is increasingly used in academic research, understanding how to manage privacy, control data usage, and make informed choices about sharing outputs has become essential. While our previous blog post focused on configuring the ChatGPT interface—through settings such as personalisation, memory, and model selection—this follow-up explores tools that help researchers maintain control over what is retained, used, or made public. In this post, we introduce three key features within ChatGPT’s interface: Temporary Chats for confidential sessions, the ability to opt out of having your data used for model training, and the option to share selected conversations via public links. These features support both privacy-conscious use and transparent collaboration in academic environments.

1. Using Temporary Chats for One-Off, Unstored Sessions

When testing prompts, preparing examples, or working with material that doesn’t need to be stored or linked to your account, Temporary Chats offer a simple solution.

This feature creates a one-off session that:

- does not save to your chat history,

- does not use memory,

- and does not contribute to model training.

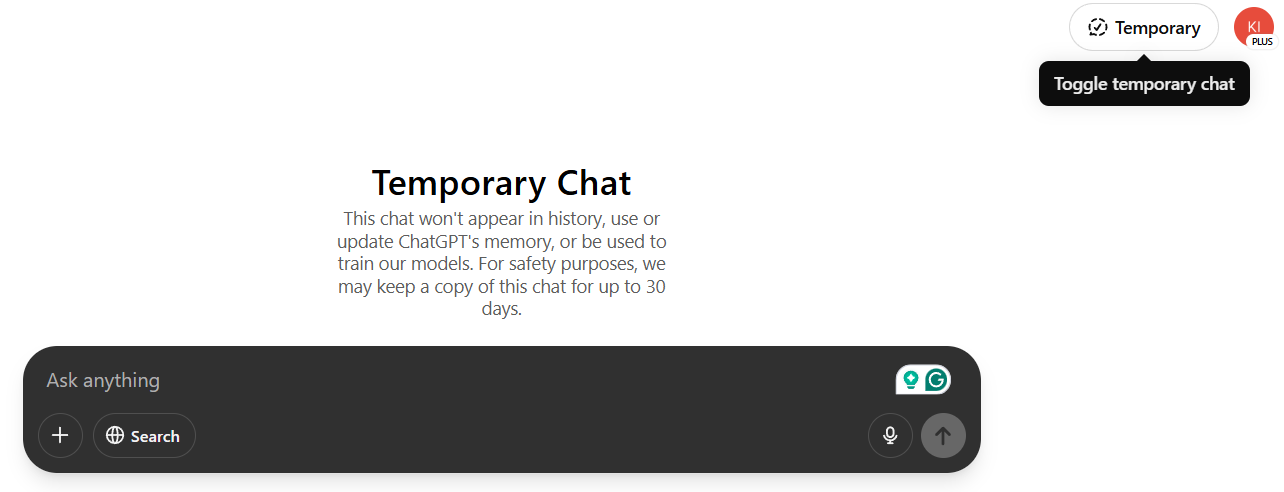

To start a Temporary Chat, click the “Temporary” button in the top-right corner of the screen. A banner will confirm that you are in a non-persistent session where no memory is used, no data is stored, and nothing will be retained after closing the tab.

2. Opting Out of Model Training: Keep Your Conversations Private

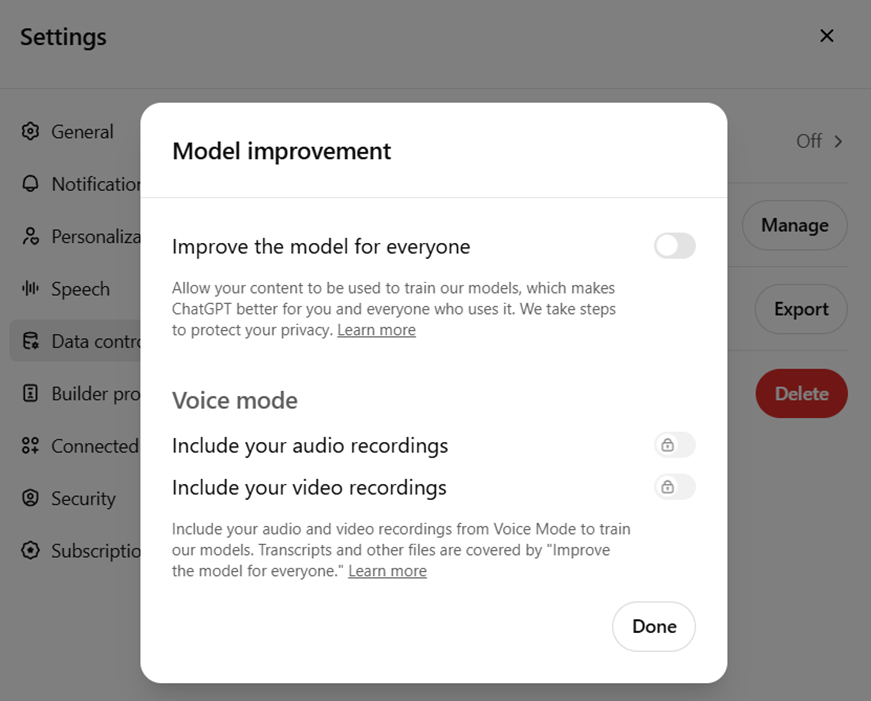

By default, ChatGPT may use your conversations to help improve model performance. While this process does not associate data with your identity, researchers working with sensitive topics or early-stage ideas may prefer to opt out entirely. Switching this option off ensures that your prompts and responses are excluded from future model training and refinement, without affecting the model’s ability to assist you.

You can disable this under: Settings > Data Controls > Improve the model for everyone

This change applies to all standard (non-temporary) chats. However, it does not guarantee complete confidentiality. For short-term or high-sensitivity work, it is recommended to use this setting in combination with Temporary Chat mode and to avoid entering personally identifiable information or sensitive data.

3. Using Shareable Links in ChatGPT

ChatGPT enables users to turn any individual conversation into a public, read-only link. This feature is especially useful for researchers who want to:

- share annotated responses with collaborators,

- include prompt–response examples in teaching materials or publications,

- or reference a documented interaction during peer review or team discussion.

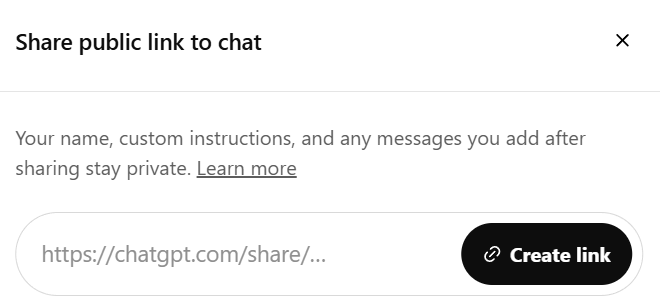

To generate a shareable link, open the relevant conversation and click the “Share” button in the top-right corner of the interface. A preview will appear, allowing you to check and copy the link before distributing it.

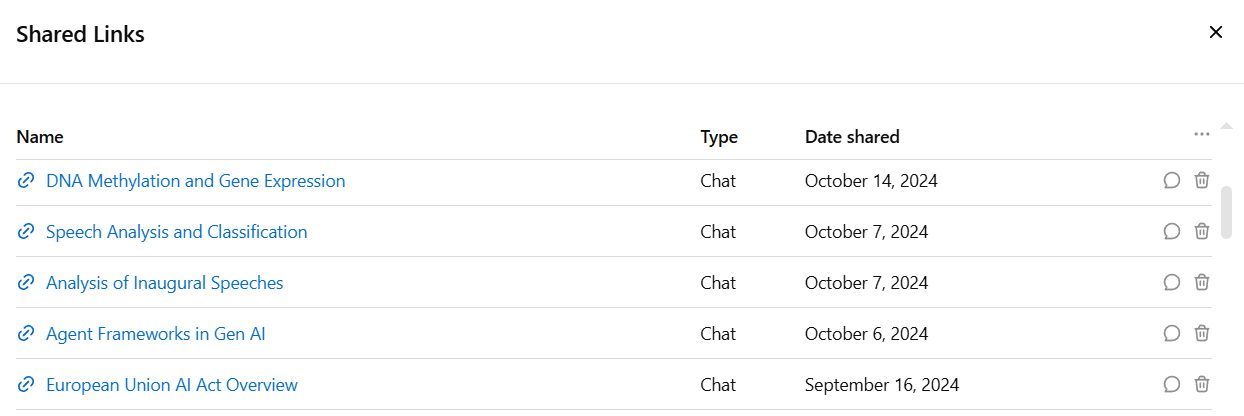

To view or revoke access to your shared chats, navigate to: Settings > Data Controls > Shared links > Manage

Only the selected conversation is included in the link—no personal information, account history, or identity is exposed. However, it remains the user’s responsibility to ensure that no confidential or sensitive material is included before making a conversation public.

Recommendations

ChatGPT offers powerful support for academic work, but using it responsibly requires awareness of how data is handled. Temporary Chats, the option to opt out of model training, and shareable links with access controls give users more control over privacy and visibility.

We recommend:

- Using Temporary Chat for tasks that don’t need to be saved.

- Disabling training use when handling sensitive or unpublished content.

- Carefully reviewing chats before sharing links publicly.

However, even with these precautions, uploading personal or sensitive data—including special categories of data—to generative AI models like ChatGPT is not recommended.

The authors used GPT-4o [OpenAI (2025) GPT-4o (accessed on 25 April 2025), Large language model (LLM), available at: https://openai.com] to generate the output.