Prompt-based methods are becoming increasingly relevant in biomedical text mining, offering flexible ways to perform tasks such as named entity recognition without explicit model training. In this case study, we assess the performance of DeepSeek-V3 on a structured disease mention extraction task using a curated subset of the NCBI Disease Corpus. Building on our previous work—where we transformed the original dataset into a structured CSV format—we created a test set of 30 abstracts from which expert-annotated disease mentions were deliberately removed. To guide the model, we constructed a detailed prompt that included 70 manually annotated training examples, demonstrating the expected output format. DeepSeek-V3 achieved highly accurate results, correctly identifying disease names from both titles and abstracts. This example-driven prompting setup highlights the model’s strong potential for biomedical named entity recognition without any fine-tuning.

Input files

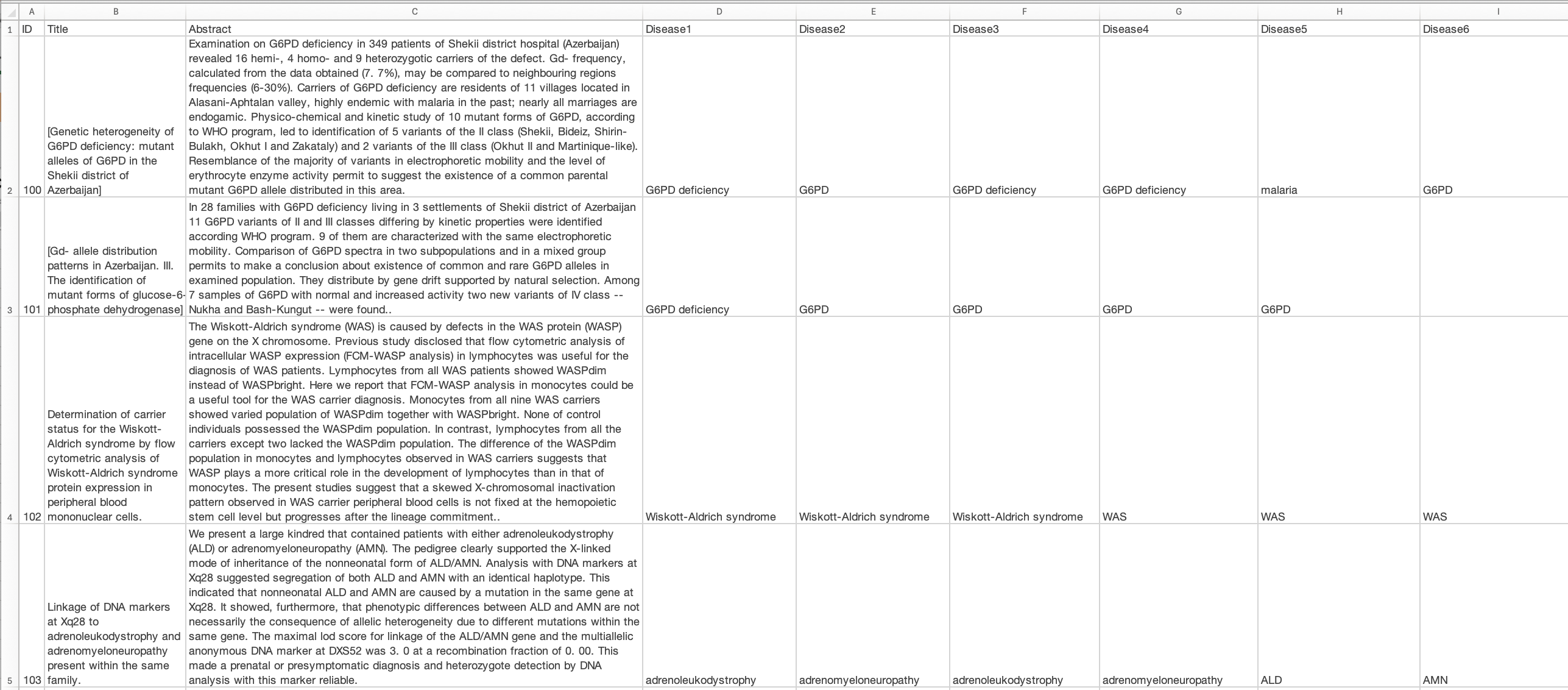

The dataset used in this task is the NCBI Disease Corpus, a manually annotated resource developed by the National Center for Biotechnology Information (NCBI). It contains PubMed titles and abstracts with expert-labelled disease mentions and is widely used for biomedical named entity recognition (NER) benchmarks. For this case study, we relied on a structured version of the corpus previously introduced in our earlier post, where each row contains a unique ID, the title, the abstract, and—where applicable—a series of columns listing the annotated disease names in order of appearance.

We created two subsets for evaluation:

- A training set of 70 rows, including all manually annotated disease mentions, used as in-context examples within the prompt.

- A test set of 30 rows, where the disease annotations were removed. The model’s task was to reconstruct these based on the prompt examples.

Both subsets followed the same structure, enabling direct comparison between human and model-generated outputs.

Prompt

You have access to two Excel files:

NCBI_train.xlsx: a training set with 70 rows. Each row includes an ID, a title, an abstract, and the manually extracted disease mentions from that title and abstract, listed in separate columns (e.g., Disease1, Disease2, etc.).NCBI_test.xlsx: a test set with 30 rows, where each row includes an ID, a title, and an abstract, but no extracted diseases.

Your task is to analyse the training set (NCBI_train.xlsx), learn how disease mentions are identified and formatted, and then apply this knowledge to complete the test set (NCBI_test.xlsx).

For each row in the test set, extract all disease mentions in the order they appear, first from the title, and then from the abstract. These should include both general terms (e.g., “cancer”) and specific expressions (e.g., “familial adenomatous polyposis”, “Duchenne muscular dystrophy”).

Instructions:

- Carefully read the title and extract every disease mention you can identify, in the order they appear.

- Then read the abstract and continue extracting all disease mentions in the order they appear.

- If a disease appears more than once or in different forms, include each instance separately.

- Follow the same output structure and disease annotation logic as seen in the training set.

Return format (for the 30 test rows):

- Column A: ID

- Column B: Title

- Column C: Abstract

- Columns D and onwards: Disease1, Disease2, Disease3, ..., listing each disease mention in order of appearance (first from the title, then from the abstract)

Keep exactly one row per abstract. Your output should closely match the structure of the training file.

Output

DeepSeek-V3 performed exceptionally well on the disease mention extraction task. When prompted with 70 structured examples from the training set, the model was able to recover the vast majority of disease entities in the 30-row test set with high precision and recall. It correctly identified both general and specific disease names from titles and abstracts, maintaining the expected order and granularity in most cases.

Although the model was not fine-tuned and relied solely on prompt guidance, its ability to generalise across various biomedical contexts was striking. Particularly impressive was its handling of abstracts containing a high number of disease mentions—for instance, in entry #119, where it successfully extracted 22 distinct disease-related expressions, including rare conditions such as Wiskott-Aldrich syndrome and co-morbidities like Kaposi sarcoma and T-cell lymphoma.

Some minor deviations from the human annotations were observed. For example:

- In entry #103, the model recognised

ALD/AMNas a single disease expression due to the use of the slash, whereas human annotators split it into two entities: ALD (adrenoleukodystrophy) and AMN (adrenomyeloneuropathy). Despite this, the model did extract both terms individually elsewhere in the abstract. - In entry #108, the model extracted phrases like human cancers rather than the more general cancers chosen by annotators, suggesting a slightly broader context window in some cases.

- Similarly, in entry #128, the phrase progressive mental retardation was selected by the model instead of just mental retardation. While this doesn't affect the correctness of the extraction, it illustrates the model’s tendency to include adjacent qualifiers.

Limitations

While DeepSeek-V3 demonstrated highly accurate performance on the disease mention extraction task, several practical limitations were observed.

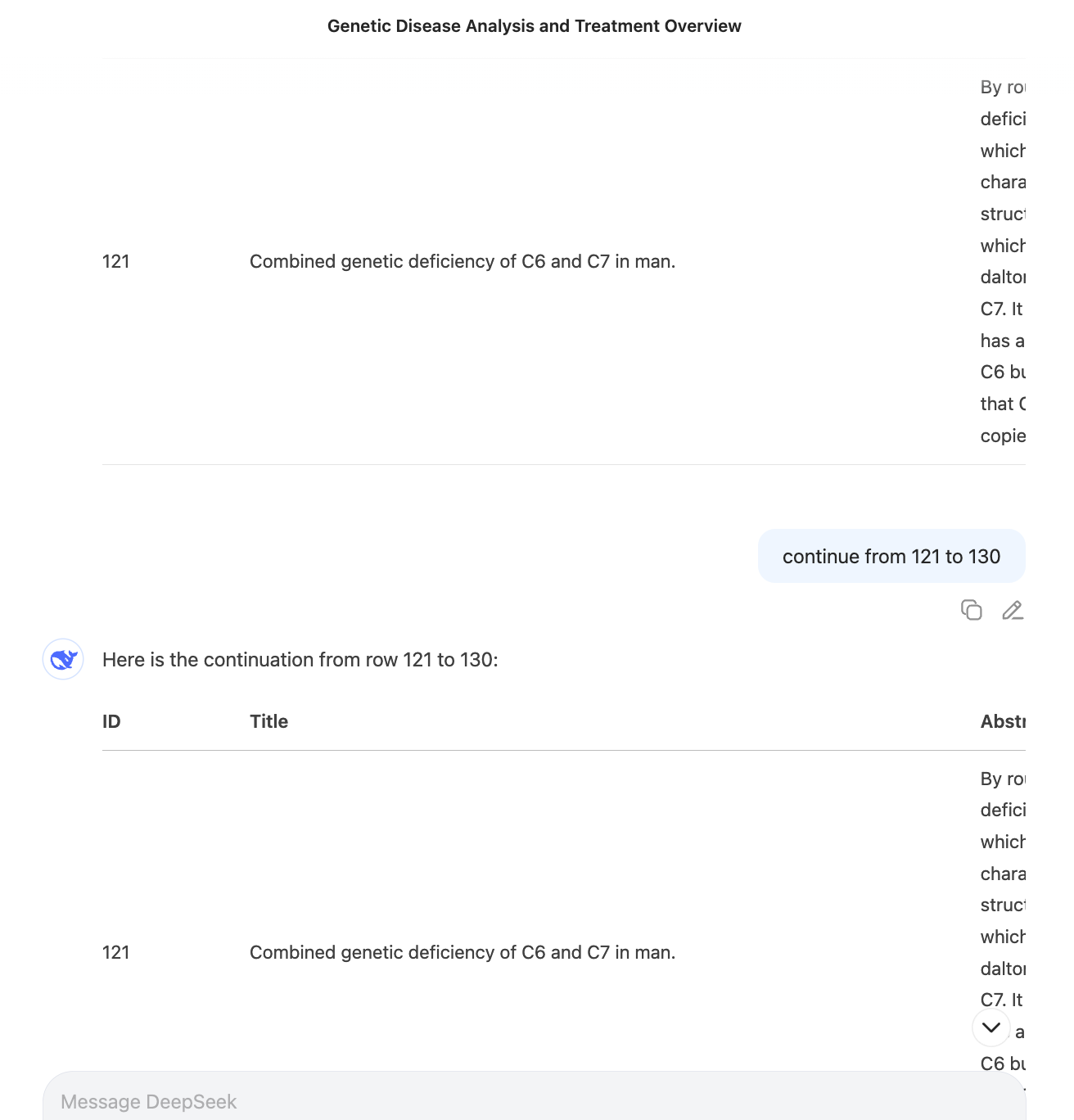

Firstly, the model was unable to return the full output for the 30-row test set in a single response, despite the input being well within context limits. In practice, it returned only the first portion (up to row 121) and required a separate follow-up prompt to complete the rest. This suggests internal output length constraints that can limit usability when working with even moderately sized datasets.

Secondly, the model’s performance proved to be highly sensitive to the number of examples provided in the prompt. When only 3 to 5 sample abstracts were included as few-shot guidance, the model extracted very few disease mentions from the test set—missing many entities that were otherwise straightforward. It was only when we increased the prompt to include around 70 training examples that DeepSeek-V3 achieved the expected extraction quality. This highlights the model’s dependency on rich in-context supervision, especially for specialised biomedical tasks with complex terminologies and multi-entity structures.

Recommendations

To achieve reliable results in prompt-based biomedical NER tasks, we recommend using a substantial number of in-context examples—our experiments showed that 3–5 examples were insufficient, while 70 well-structured examples led to high accuracy. The prompt should closely mirror the desired output format to help the model generalise effectively. Users should also be aware that even with short inputs, models like DeepSeek-V3 may truncate long outputs and require manual continuation. Additionally, the model tends to extract longer surface forms (e.g. “progressive mental retardation”), which may differ from human annotation conventions. Careful prompt design and post-processing are essential for robust and scalable extraction.

The authors used DeepSeek-V3 [DeepSeek (2025) DeepSeek-V3 (accessed on 1 June 2025), Large language model (LLM), available at: https://www.deepseek.com] to generate the output.