Image-Based Handwriting Recognition: Comparing AI Model Accuracy Across Languages

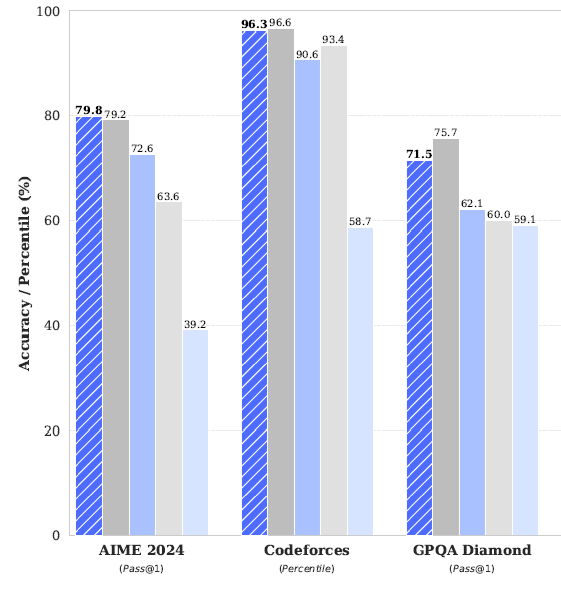

In this follow-up experiment, we tested how generative AI models handle handwritten text when provided as image input rather than PDF. Unlike in the earlier test, all models were able to process the images and return readable outputs. Performance Comparison 🏆 Champion as of October 2: Copilot, Gemini 2.5 Pro,