Claude Projects recently introduced a Retrieval-Augmented Generation (RAG) feature that extends its capabilities beyond the standard context window. Unlike traditional approaches that require custom vector databases and API integration, Claude's implementation offers a streamlined, no-code solution for handling large knowledge bases directly within the Projects interface. In this post, we evaluate the feature's performance by testing it against our entire PromptRevolution article archive—113 posts spanning multiple AI models, techniques, and use cases.

Introduction

As discussed in our earlier technical implementation, building a functional RAG system typically requires substantial technical overhead: document preprocessing, vectorisation pipelines, embedding storage, and custom retrieval logic. Claude Projects' new RAG feature aims to eliminate these barriers by handling document indexing automatically when uploaded files exceed the model's context capacity.

We tested whether this built-in approach could deliver accurate, source-grounded answers without requiring any code, external tools, or manual configuration.

Tool Overview

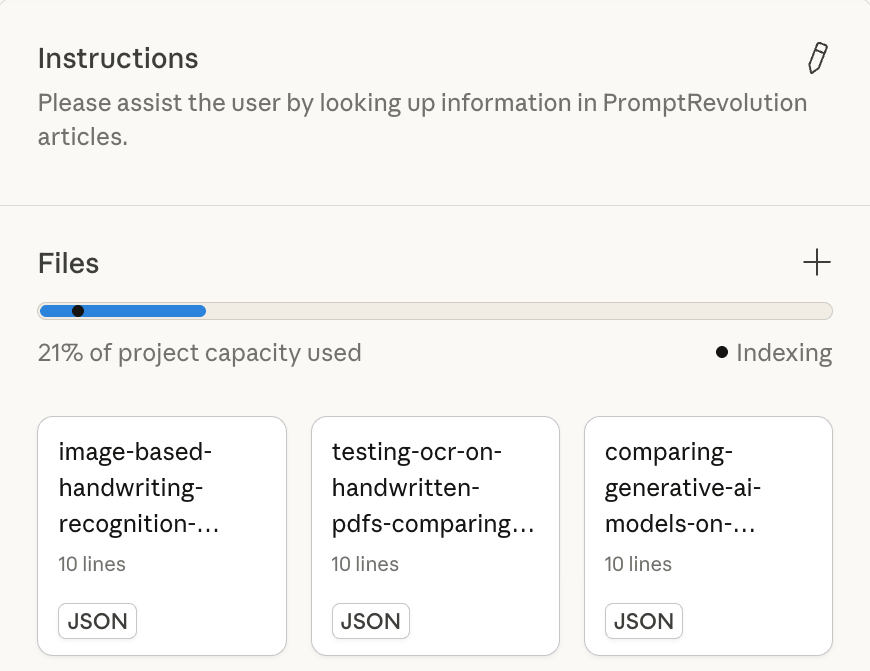

Claude Projects now includes automatic RAG activation when the uploaded knowledge base exceeds what can fit within the model's context window. The feature is indicated by an "Indexing" marker in the interface, signalling that Claude will use semantic retrieval rather than keeping all documents in active memory.

Key characteristics of the implementation:

- Automatic activation: No configuration required—RAG is triggered based on upload size

- Transparent processing: The interface clearly indicates when RAG is active

- Fast indexing: Document processing completes within seconds to minutes

- Memory efficient: Our 113-article archive used only 21% of available project storage

- Model and tool access: Currently available with all Claude models and tools available in the chat interface. We used Opus 4.5 for this task.

Unlike custom RAG implementations that require managing vector databases and embedding models separately, Claude handles the entire retrieval pipeline internally.

Testing Approach

We created a Claude Project named "PR Article Lookup" and uploaded all past articles from PromptRevolution in JSON format. Each file was named using the article's URL slug.

The project was configured with a single instruction:

Please assist the user by looking up information in PromptRevolution articles.

This minimal setup allowed us to assess Claude's retrieval accuracy without additional prompt engineering or search refinement.

Testing Scenarios

Test 1: Specific Model Identification

We first tested whether Claude could accurately identify technical details from a specific article:

Which model did we use in the Building a Retrieval-Augmented Generation (RAG) System for Domain-Specific Document Querying article?

Claude's response:

"Based on the PromptRevolution article, the RAG system was built using OpenAI's gpt-4-1106-preview model, accessed via the Chat API. The article explains that this model version was chosen for its: - Strong performance in factual reasoning - Consistent output style - Extended context window capacity (essential when working with multi-paragraph content from policy documents). The temperature was set to zero to ensure deterministic, reproducible results aligned with the source materials."

This response was entirely accurate and included contextual details about why that specific model was chosen—information that required understanding the article's content beyond simple keyword matching.

Test 2: Concept-Based Article Discovery

Our second test assessed Claude's ability to identify relevant articles based on a technical concept, even when that concept wasn't explicitly present in file names:

Please list all articles where we used API.

Claude successfully identified all ten articles involving API usage:

- Unlocking Large Language Models via API: Capabilities, Access, and Practical Considerations

- Building a Retrieval-Augmented Generation (RAG) System for Domain-Specific Document Querying

- Zero-Shot Stance Classification with DeepSeek: An API-Based Experiment

- Automating Plant Disease Detection at Scale: From Prompt Limitations to a High-Accuracy API Workflow with GPT-4o

- Automated Text Extraction from Scanned PDFs with the Mistral OCR API

- Integrating OpenAI's GPT API into RStudio with Shiny: Real-Time Code Generation from Natural Language

- Step-by-Step Guide to Integrating GPT and Claude into Google Docs for Custom AI-Automated Editing

- Benchmarking Claude 4 Sonnet and GPT-4o for Brain MRI Image Labelling: Comparing Chat Interface and API Results

- Prompt-Driven Video Analysis of Animal Behaviour Using Gemini 2.5 Pro in Google AI Studio and via API

- Building Intelligent Tool Use with the OpenAI API: A Practical Implementation Guide

Importantly, two of these articles' filenames (derived from their URL slugs) do not explicitly contain "API"—meaning Claude successfully performed semantic search rather than simple pattern matching. This demonstrates genuine content-based retrieval rather than superficial filename scanning.

Recommendations

Claude Projects' RAG feature represents a significant step forward in making retrieval-augmented generation accessible to non-technical users. The combination of automatic activation, fast processing, and accurate semantic search makes it particularly well-suited for:

- Rapid knowledge base deployment where speed matters more than customisation

- Internal documentation systems where users need quick, accurate answers without citations

- Research exploration when working with large document collections

- Prototype development before investing in custom RAG infrastructure

One observed limitation of the feature is that the output currently does not provide claim-specific source citations. For use cases requiring explicit citations – as well as more precise control over retrieval logic, or integration with existing systems – custom RAG implementations (like our Horizon Navigator) could remain the better choice.

The feature's performance on our 113-article test confirms that Claude's internal RAG implementation can handle substantial document collections with minimal setup—delivering accurate, context-aware responses that go well beyond simple keyword matching.

The authors used Claude Opus 4.5 [Anthropic (2025) Claude Opus 4.5, Large language model (LLM), available at: https://claude.ai] to generate the output.