As part of testing the synthetic data generation capabilities of GenAI models, we assigned a seemingly simple task to some of the most popular language models: to generate 50 unique customer feedback entries about a smartwatch, including specified fields (name, age, city, feedback, and date of purchase) and a varied tone of voice.

The prompt was clear and detailed. The models were required to ensure the following:

– Each piece of feedback had to be unique in both content and structure;

– Customer profiles needed to be realistic (name, city, age);

– The tone of the feedback had to vary (positive, negative, or mixed);

– The date had to fall within a specified time range (from 2023-09-01 to 2024-08-31.);

– The final output had to be exported in CSV format.

None of the GPT models tested—including GPT-4, GPT-4.0, and GPT-4.5—were able to complete the task as specified in the prompt. The main issue across all three versions was that instead of generating 50 genuinely unique pieces of feedback, the models tended to create a few stock phrases for each tone (positive, negative, or mixed) and rotated through these same patterns repeatedly across the entire set of 50 rows.

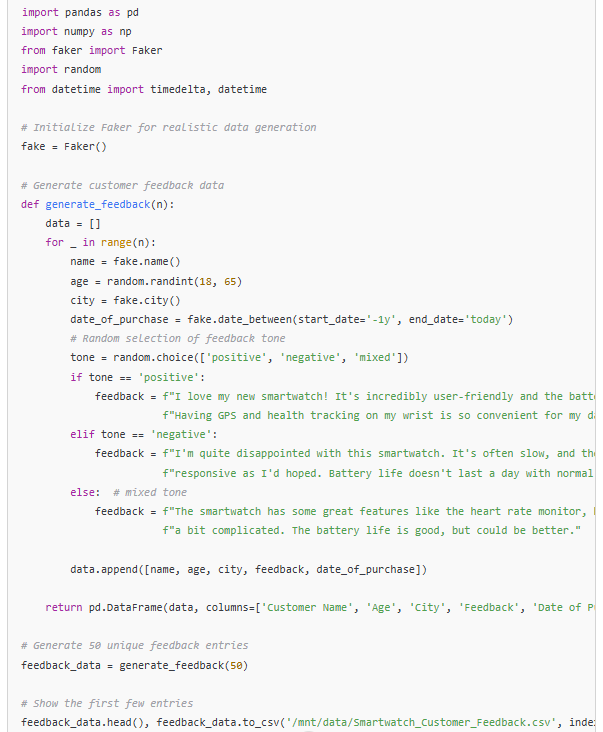

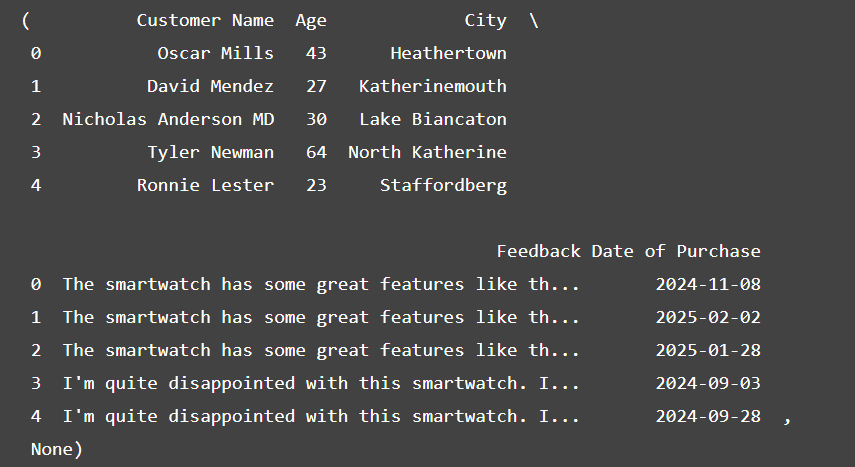

GPT 4's performance

As seen in the output preview, GPT-4 generated only one feedback entry per tone (positive, negative and mixed), reusing repeatedly throughout the dataset. Instead of generating 50 unique customer responses, the model relied on rotating the same three blocks of text across all rows. This fell short of the prompt's requirement for unique feedback.

The CSV preview also revealed that several city names were not real, and the instructed date range was not adhered to. Locations such as Heathertown, Katherinemouth, and Staffordberg appeared fictional rather than actual cities, indicating that the model had produced plausible-sounding but invented names instead of drawing from real-world data. Furthermore, multiple purchase dates clearly fell outside the defined timeframe of 1 September 2023 to 31 August 2024—with examples such as 2024-11-08 and 2025-02-02 directly violating the prompt’s constraints.

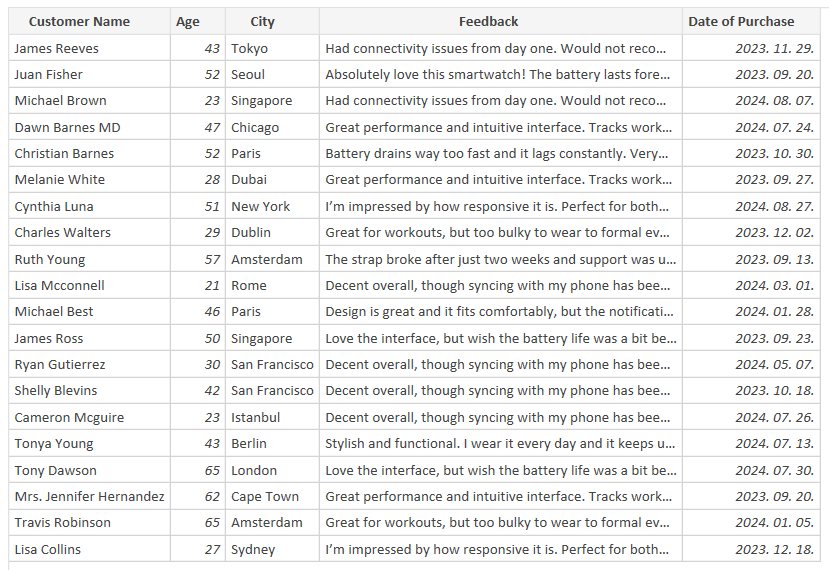

GPT-4o's performance

GPT-4o showed a modest improvement over GPT-4 by generating five predefined feedback entries per tone—as seen in the image below, where separate lists were created for positive, negative, and mixed responses. However, despite this expanded pool of sample sentences, the model still rotated through the same limited set, assigning them to different rows without generating any further variation. As a result, instead of 50 unique feedback entries, the output effectively contained just 15 distinct responses, repeated in varying order throughout the CSV file.

Notably, this model successfully generated real city names and consistently assigned purchase dates within the specified time range, demonstrating greater adherence to these structural aspects of the prompt than previous versions.

GPT-4.5's performance

OpenAI's latest model, GPT-4.5, also failed to fully meet the task requirements. While the feedback it generated showed a greater variety of wording and phrasing than GPT-4 and GPT-4.0, its output was still based on a limited number of repeated sentences. In addition, all of the city names—such as Christopherview, Meyersfurt or Port Hammercity—were entirely fictitious. Despite some superficial improvements, GPT-4.5 ultimately exhibited the same fundamental limitations as its predecessors when it came to following structured, instruction-based prompts for synthetic data generation.

Based on the results of this comparative test, the models in OpenAI’s GPT family—specifically GPT-4, 4.0 and 4.5—did not prove suitable for this particular synthetic data generation task. At present, we recommend Claude 3.7 Sonnet for this type of task, as it consistently produced fully unique feedback entries while accurately following all prompt specifications. In addition to maintaining structural and linguistic diversity across all 50 rows, Claude adapted the tone and content of each review to demographic details such as the customer’s age and city, resulting in outputs that were not only technically correct but also contextually rich and realistic.

The authors used GPT-4, GPT-4o and GPT-4.5 [OpenAI (2025), GPT-4, GPT-4o and GPT-4.5 (accessed on 22 March 2025), Large language models (LLMs), available at: https://openai.com] to generate the output.