High-quality feedback is essential for researchers aiming to improve their work and navigate the peer review process more effectively. Ideally, such feedback would be available before formal submission—allowing authors to identify the strengths and weaknesses of their research early on. This is precisely the promise of OpenReviewer, an automated reviewing tool based on the LLaMA-OpenReviewer-8B model, which generates structured peer review reports following standard academic templates. To critically evaluate its reliability, we tested the system on two contrasting cases: first, our own recently published article in the Social Science Computer Review, a well-ranked and peer-reviewed journal; and second, Ian Goodfellow’s landmark 2014 paper introducing Generative Adversarial Networks, widely considered a gold standard in machine learning research. In both cases, OpenReviewer delivered the same verdict: "reject, not good enough".

OpenReviewer

Using Llama-OpenReviewer-8B - Built with Llama

OpenReviewer is an open-source system powered by LLaMA-OpenReviewer‑8B, an 8 billion‑parameter language model meticulously fine-tuned on 79,000 expert reviews from top-tier Machine Learning conferences like ICLR and NeurIPS. The system allows users to upload a research paper in PDF format. By default, it follows the ICLR 2025 review structure, although the template can be modified as needed. It analyses the full content of the paper, including mathematical expressions, tables and narrative sections, and generates structured feedback that mimics the tone and format of real-world conference reviews. Rather than replacing human reviewers, OpenReviewer is intended to help researchers receive early-stage, critical feedback on their work—ideally before formal submission—thereby supporting iterative improvement and better preparedness for peer review.

The reviewer is asked to:

- Summarise the paper and its contributions (Summary),

- Assess the Soundness of the technical claims and methodology on a scale from 1 (poor) to 4 (excellent),

- Evaluate the Presentation quality (clarity, structure, style) on the same 1–4 scale,

- Judge the paper’s overall Contribution to the field (importance, novelty, relevance), also on a 1–4 scale,

- Provide detailed Strengths and Weaknesses,

- Offer Questions and Suggestions for the authors,

- Optionally flag the paper for Ethics Review, and

- Assign a final Overall Rating between 1 (strong reject) and 10 (strong accept).

Overall Rating uses the following 6-point scale:

1: Strong reject

3: Reject, not good enough

5: Marginally below the acceptance threshold

6: Marginally above the acceptance threshold

8: Accept, good paper

10: Strong accept, should be highlighted at the conference

Case Study: How OpenReviewer Evaluated a Peer-Reviewed Article

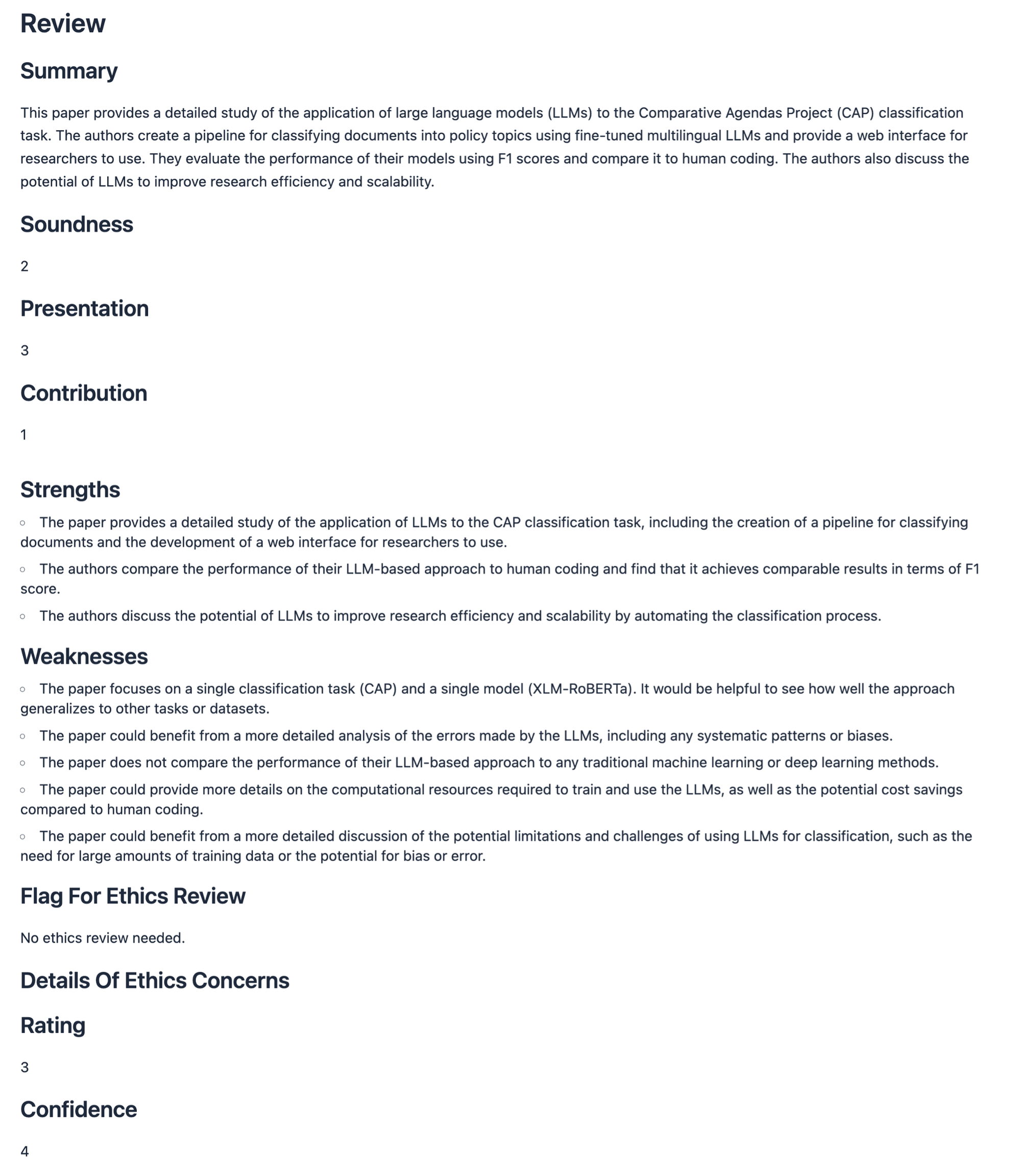

As a first test case, we submitted a published article (Sebők, Máté, Ring, Kovács, Lehoczki, 2024) to OpenReviewer. The paper, titled “Leveraging Open Large Language Models for Multilingual Policy Topic Classification: The Babel Machine Approach”, appeared in the highly ranked journal Social Science Computer Review after undergoing rigorous peer review. Despite this, OpenReviewer gave the paper an overall score of 3 – corresponding to “Reject, not good enough” on the ICLR scale.

While the AI-generated review included a reasonably accurate summary and a clear evaluation of presentation quality, it assigned a contribution score of just 1 out of 4, implying that the work was seen as offering little or no added value to the field. This result starkly contrasts with its acceptance in a D1 journal by expert reviewers familiar with large language models and policy-related text classification tasks. If the paper’s contribution had genuinely been as minimal as the AI reviewer suggests, it is unlikely it would have passed editorial and peer scrutiny at a leading outlet in the field.

Cross-Validation with a Benchmark Publication: Testing the System on Ian Goodfellow’s GANs Paper

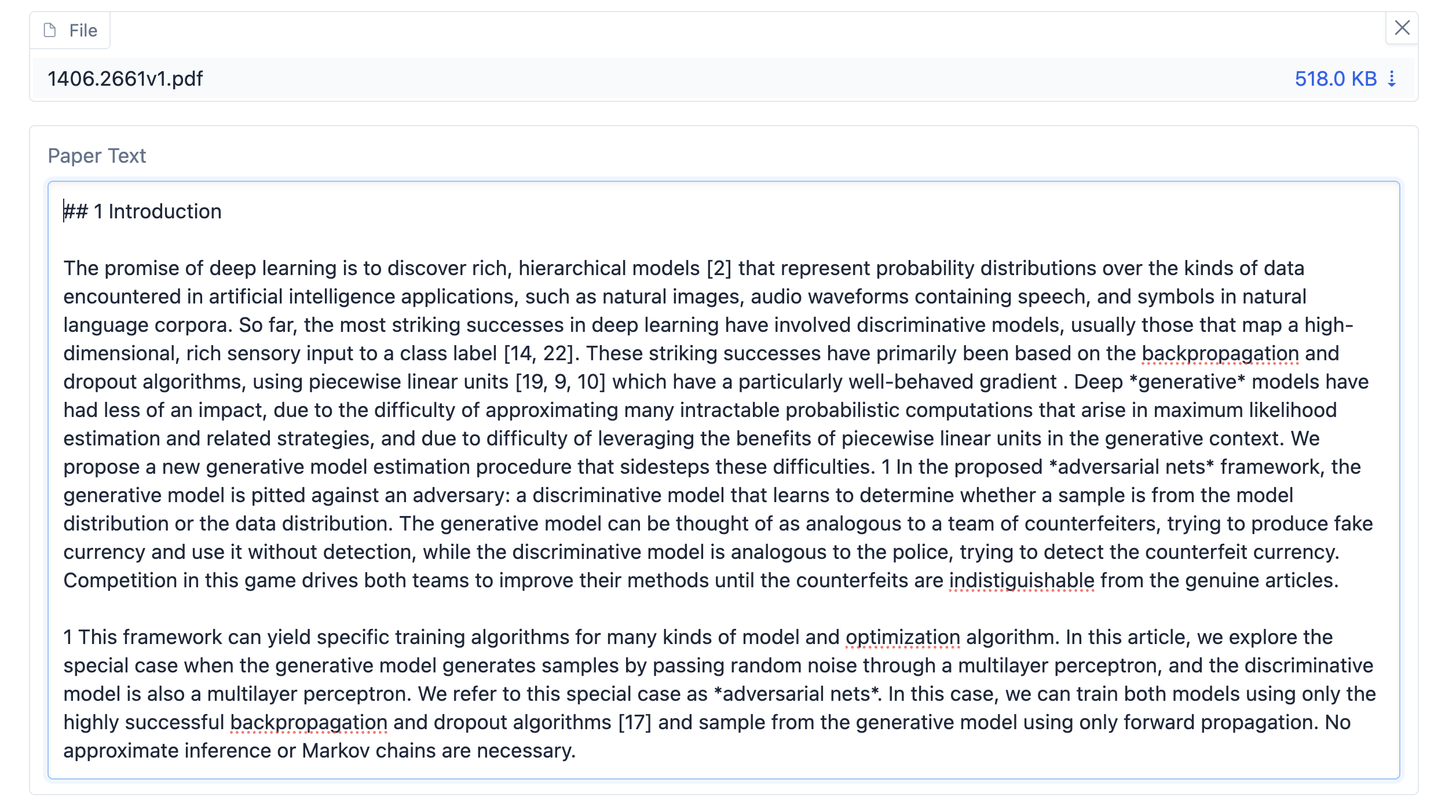

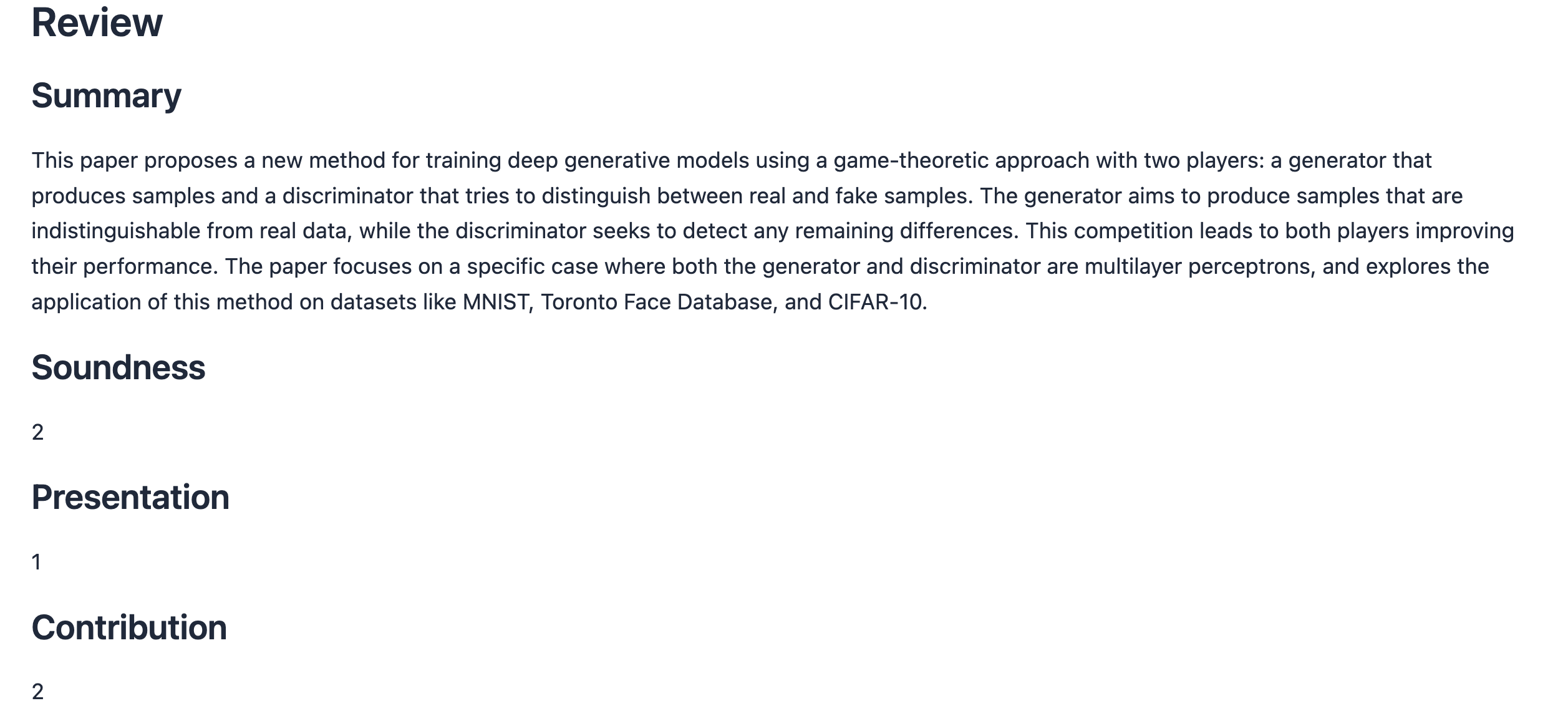

To ensure that our concerns about the system’s evaluation were not driven by author bias or dissatisfaction with the review of our own paper, we submitted a widely acknowledged benchmark publication for comparison: Ian Goodfellow et al.’s 2014 paper on Generative Adversarial Networks (GANs). This paper is considered a foundational work in modern AI research and has been cited extensively across disciplines. By subjecting such a high-impact article to the same review process, we aimed to test whether the system can appropriately recognise and assess contributions that are widely regarded as transformative.

The outcome likewise raises concerns about the system’s capacity to reflect scholarly consensus or assess the true significance of a contribution. Despite the GANs paper by Ian Goodfellow et al. being a landmark publication in machine learning, the model rated it poorly: 2 for soundness, 3 for presentation, and 2 for contribution, with an overall score of 3 (“reject, not good enough”). The AI-generated review criticised the paper’s clarity, organisation, and lack of comparative analysis—an assessment at odds with its recognised foundational impact on the field.

As an additional consistency check, we resubmitted the GANs paper by Ian Goodfellow et al. after a system update. Interestingly, the model’s evaluation became even more critical. While the original submission received a presentation score of 3, the updated review downgraded it to 1, suggesting a further decline in the model’s assessment of clarity and structure.

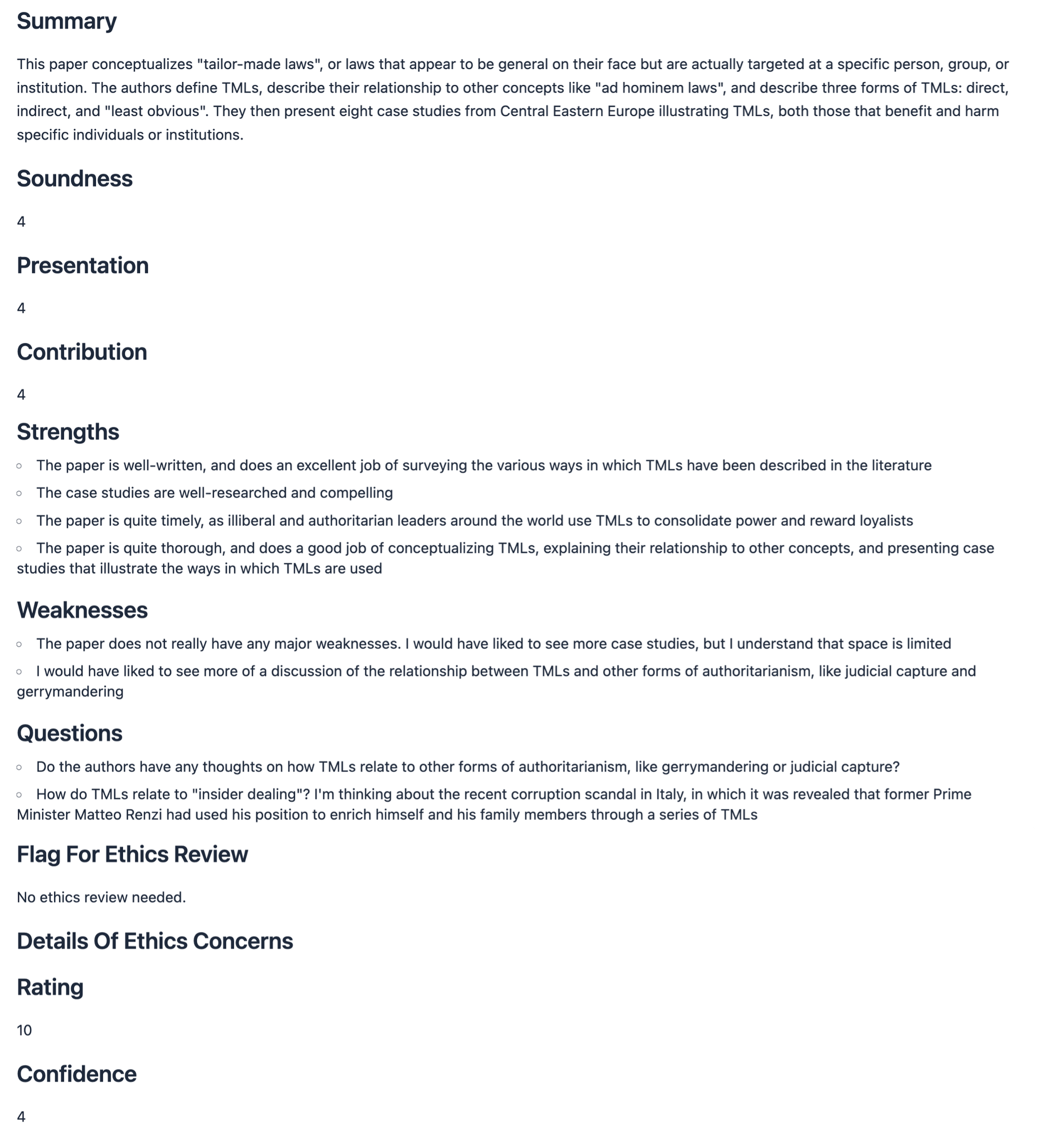

Unexpectedly High Scores for a Political Science Article

As a curiosity-driven experiment, we submitted a legal-political science article—Kiss, R. & Sebők, M. (2025) “The Concept of Tailor-Made Laws and Legislative Backsliding in Central–Eastern Europe,” Comparative European Politics, 23, 353–409—to test how the model handles work outside its training domain. We expected uniformly low marks because the reviewer system was fine-tuned on machine-learning papers. Instead, it assigned 4 / 4 / 4 for soundness, presentation, and contribution, and an overall rating of 10, signifying “strong accept, should be highlighted at the conference.” Although Comparative European Politics is a top-tier (D1) journal in its field, the article itself is unrelated to computational or ML research, making these uniformly high scores all the more puzzling and underscoring the system’s inconsistent evaluation logic.

Recommendations

To meaningfully evaluate the utility of AI-based peer review tools, we recommend testing them not only on one's own manuscript but also on a field-defining article widely recognised as a gold standard. If the system produces a reasonable evaluation for such a benchmark publication, it may be worth exploring what kind of feedback it generates on a new or under-review paper. However, we advise against interpreting the resulting scores as any form of guarantee regarding publication prospects or scientific quality. These systems are best understood as instruments for generating a preliminary round of feedback before submission. The tool did not meet this expectation in our tests: the reviews were often overly general, even where they identified weaknesses, and failed to provide actionable suggestions for improvement. Although receiving early-stage evaluations is attractive, the current implementation did not help us understand how to enhance our manuscripts. Therefore, we suggest using such systems cautiously, primarily to gain an initial impression rather than as a substitute for expert human feedback.

The authors used OpenReviewer [Llama-OpenReviewer-8B model, accessed on 25 June 2025, available at: https://huggingface.co/maxidl/Llama-OpenReviewer-8B] to generate the output.