Accessing large language models (LLMs) through APIs opens up research and development opportunities that go well beyond the limits of traditional chat interfaces. For researchers API access enables language models to be built directly into bespoke workflows, analytical tools, or automated processes—supporting tasks such as large-scale text analysis, data extraction, and integration with existing research infrastructure. While online playgrounds (like those on Hugging Face or Together.ai) allow for hands-on testing and comparison of models—even for some models that do not offer public API access—true integration into your own systems depends on the availability and effective use of an API. This article outlines the practical benefits of accessing large language models via API, introduces the main options for researchers, and highlights the key considerations to bear in mind when moving beyond the chat interface.

What Is an LLM API—and Why Does It Matter?

A Large Language Model (LLM) API is a technical interface that allows users to send queries and receive outputs from an advanced language model through code or dedicated software, rather than via manual interaction in a web browser. Unlike chat interfaces designed for direct user input, an API enables automated and repeatable access to LLMs from scripts, research tools, or custom applications. This approach is particularly valuable for researchers and developers. API access supports large-scale text analysis, high-throughput data processing, and systematic evaluation of model outputs. Such capabilities are essential for reproducible experiments, efficient workflows, and integrating LLM-based solutions into existing research environments.

A further major benefit of API access is the ability to fine-tune generation parameters—such as temperature, seed, top-k, and top-p—when issuing requests. Adjusting these parameters programmatically affords precise control over the creativity, determinism, and variability of model outputs. For researchers, this allows systematic benchmarking, rigorous evaluation, and reproducible experimentation at scale. For a detailed explanation of these settings and their research significance, see our earlier guide.

Leading LLM providers—including OpenAI, Google, and Anthropic—offer secure APIs for their most advanced models. Through these interfaces, users can automate content generation, information extraction, and text analysis, as well as perform advanced tasks that would be impractical to carry out manually. As a result, LLM APIs have become fundamental tools for contemporary research and development, enabling language models to be used in a much wider range of applications than chat-based prompting alone.

Leading Providers and Model Access Options

A growing number of providers offer access to state-of-the-art large language models via secure APIs, enabling integration into research workflows, applications, and data pipelines. Below is a brief overview of the most prominent providers and where their models can be accessed:

- Anthropic - Anthropic API

- Cohere - Cohere API

- DeepSeek - DeepSeek API

- Google (Gemini) - Gemini API

- Grok - Grok API

- Meta - Meta LLama API

- Mistral AI - Mistral AI API

- OpenAI - OpenAI API

- Alibaba - Qwen API

Testing and Prototyping LLMs: The Function of Playground Interfaces

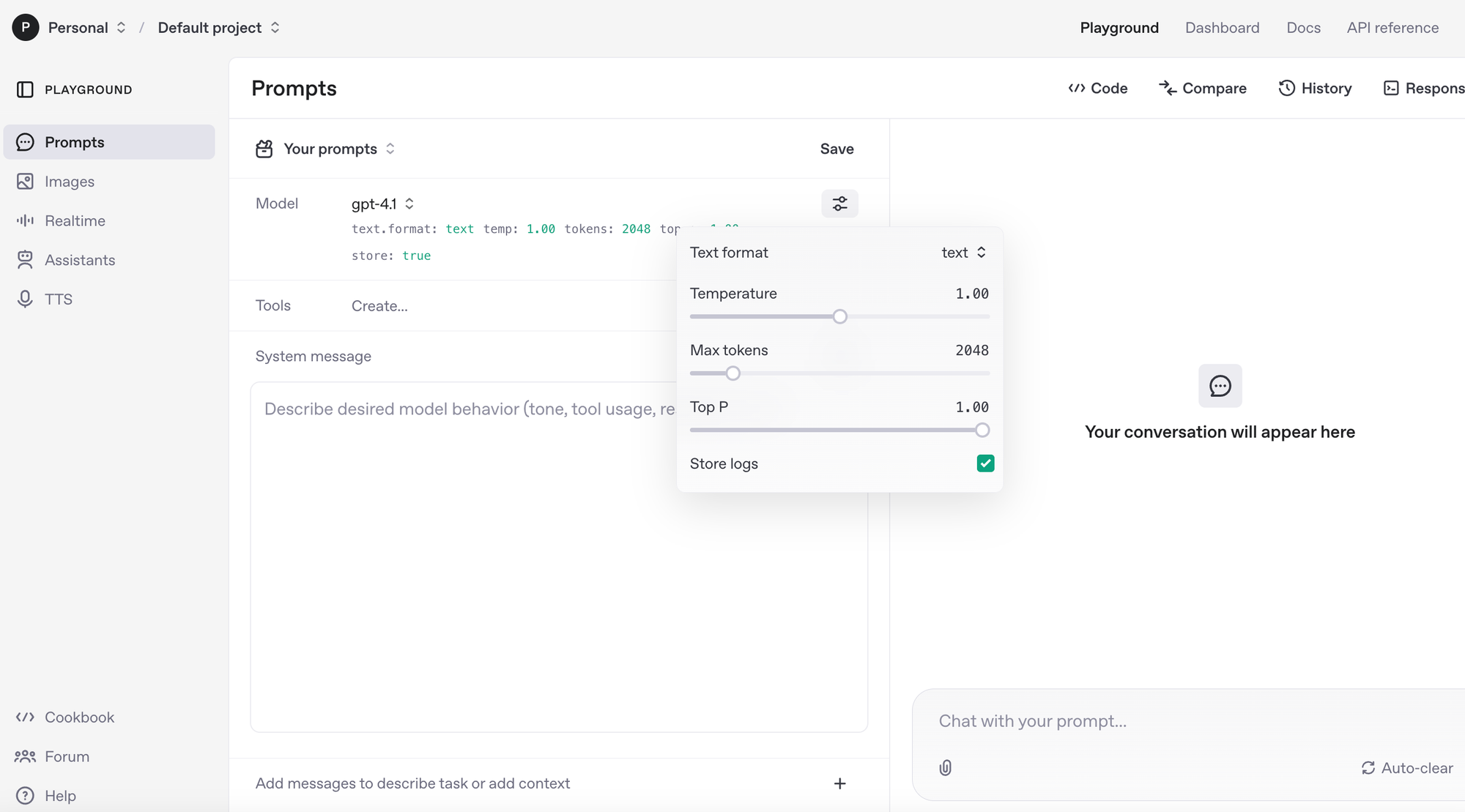

Online playgrounds—such as OpenAI’s Playground, Anthropic’s Claude Console, or Google’s AI Studio—are browser-based interfaces that sit atop the same APIs used for programmatic access to large language models. These tools enable researchers and developers to experiment interactively with prompts, parameters, and model behaviour without writing code or managing API keys. In many cases, playgrounds reveal the corresponding API calls for each interaction, allowing users to transition smoothly from manual exploration to automated workflows. While playgrounds are invaluable for rapid prototyping and testing, scalable and reproducible research applications require direct API integration, which allows for automation, batch processing, and integration within bespoke software tools.

Playgrounds not only enable hands-on interaction with leading language models, but also provide valuable controls for both prompt engineering and model configuration. Most playgrounds allow researchers to set a dedicated “system prompt”, defining the overall behaviour, tone, or task-specific constraints for the model throughout the session. This is particularly useful for guiding outputs to meet specific research needs or to simulate consistent roles. In addition, playgrounds typically offer direct adjustment of model parameters—such as temperature, top-k, and top-p—allowing users to experiment with different settings interactively. This flexibility enables researchers to fine-tune model behaviour and establish optimal configurations in an accessible, code-free environment before moving on to more complex, API-driven workflows.

API Integration: How Does It Work?

Integrating large language models into your own research workflows via API is a straightforward process, but it requires careful attention to authentication, data handling, and provider-specific usage limits.

Obtaining and Using an API Key

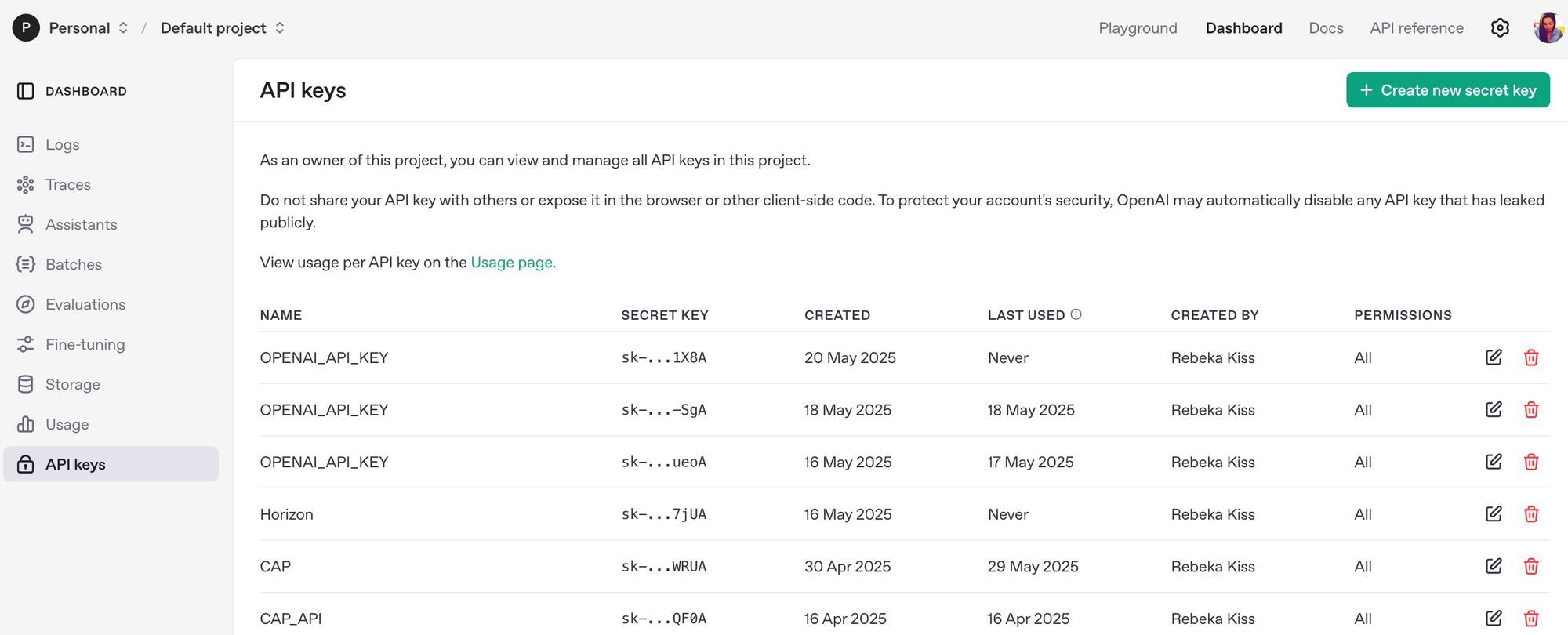

The first step is to sign up with the chosen LLM provider (such as OpenAI, Anthropic, Cohere, or Mistral) and generate an API key via their developer dashboard. This key is a unique alphanumeric string that identifies and authenticates your requests.

When generating a new API key via your provider’s dashboard, always remember to copy the key and store it securely. For security reasons, most platforms will only display the full API key once at the moment of creation—afterwards, you cannot retrieve the same key again. If the key is lost, you will need to generate a new one and update all scripts or applications that rely on it. Never share your API key in public code, notebooks, or repositories.

Securing Your API Key in Google Colab

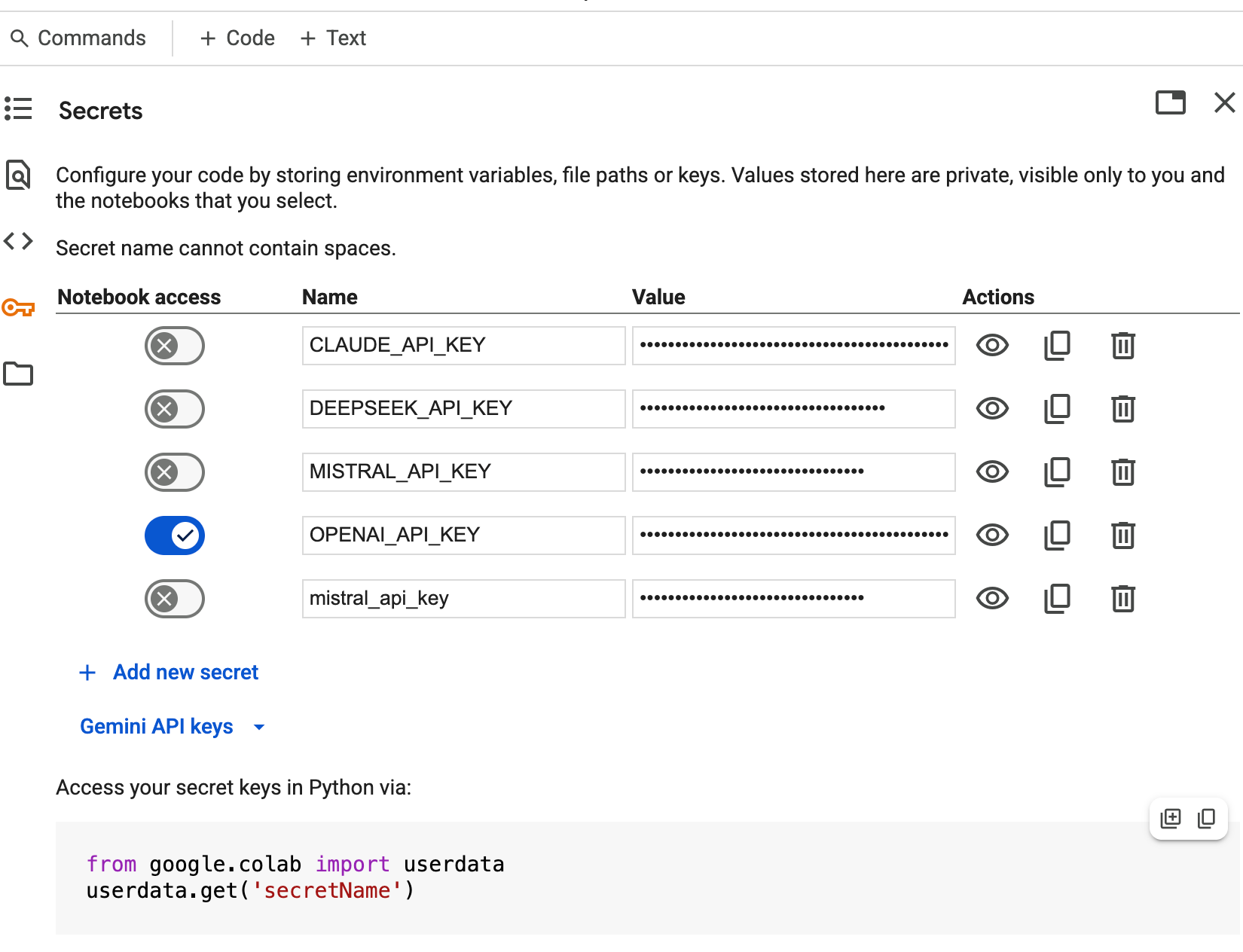

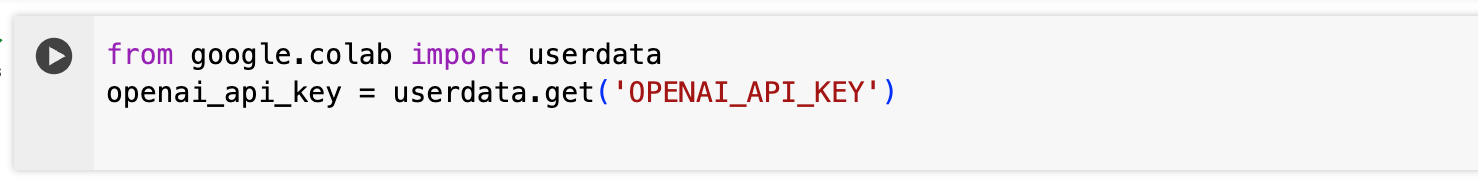

When using an API key in a collaborative environment such as Google Colab, never hard-code the key directly in your scripts or notebooks. Instead, store it in a secure environment variable or the platform’s built-in secrets manager.

In Google Colab, you can safely store your API keys under the “Secrets” tab, as shown above. This feature ensures that sensitive information remains private—only visible to you and not exposed in shared or published notebooks. Once a secret is saved, you can access it from your code as follows:

By storing your API keys in this way, you reduce the risk of accidental disclosure and maintain a secure research workflow. Assign each key a clear and unique name (e.g. CLAUDE_API_KEY, MISTRAL_API_KEY), and always avoid sharing notebooks that might reveal sensitive information. If you need to collaborate with others, make sure all secrets are removed or replaced with dummy values before sharing the notebook.

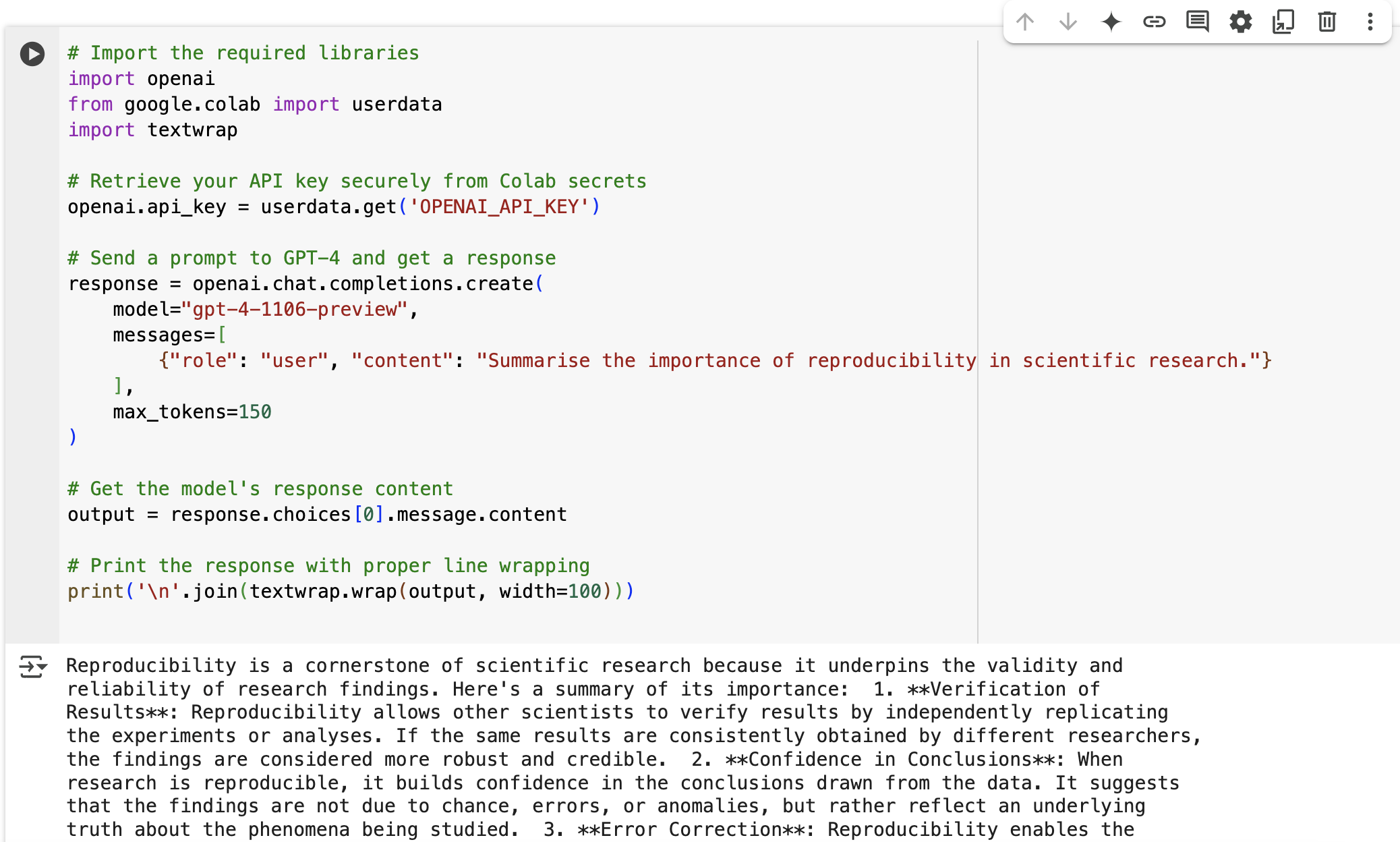

Example: Accessing GPT-4 via the OpenAI API in Python

Below is a minimal example demonstrating how to send a prompt to OpenAI’s GPT-4 model using the official API. The code assumes you have securely stored your API key (see previous section).

API Usage Costs: A Brief Overview

It is important to note that the use of large language models via API is typically not free. Most providers charge based on usage—commonly by the number of tokens processed (tokens include both input and output text). Below is a comparative table summarising the standard pricing for popular models, as of May 2025. Pricing may vary by provider, model version, and usage volume, so always check the latest details on each provider’s website.

| Provider | Model | Input Price / 1M tokens | Output Price / 1M tokens | Pricing Link |

|---|---|---|---|---|

| OpenAI | GPT-4.1 | $2.00 | $8.00 | OpenAI |

| GPT-4.1 mini | $0.40 | $1.60 | ||

| GPT-4.1 nano | $0.10 | $0.40 | ||

| Anthropic | Claude Opus 4 | $15.00 | $75.00 | Anthropic |

| Claude Sonnet 4 | $3.00 | $15.00 | ||

| Claude Haiku 3.5 | $0.80 | $4.00 | ||

| Mistral | Medium 3 | $0.40 | $2.00 | Mistral |

| Small 3.1 | $0.10 | $0.30 | ||

| DeepSeek | Chat | $0.27 (miss) $0.07 (hit) |

$1.10 | DeepSeek |

| Reasoner | $0.55 (miss) $0.14 (hit) |

$2.19 | ||

| Gemini 2.0 Flash | $0.10 (text/image/video) $0.70 (audio) |

$0.40 (text/image/video) $8.50 (audio) |

Google Gemini |

Notes:

- Input refers to tokens submitted as part of the prompt.

- Output refers to tokens generated by the model.

- Prices may change frequently—always consult the official pricing pages for the most up-to-date information.

Recommendations

For researchers seeking to move beyond the constraints of chat-based interfaces, accessing large language models through APIs offers a powerful and flexible route to automate, scale, and rigorously control their workflows. While online playgrounds remain invaluable for hands-on experimentation and prompt development, API access is essential for systematic research, integration with existing analytical tools, and reproducibility at scale. Before committing to a particular provider or model, carefully review current pricing, available features, and your project’s specific requirements. Ultimately, the effective use of LLM APIs can unlock new research avenues and provide a robust foundation for building advanced, data-driven applications.

The authors used GPT-4o [OpenAI (2025), GPT-4o (accessed on 31 May 2025), Large language model (LLM), available at: https://openai.com] to generate the output.