Understanding Model Confidence Through Log Probabilities: A Practical OpenAI API Implementation

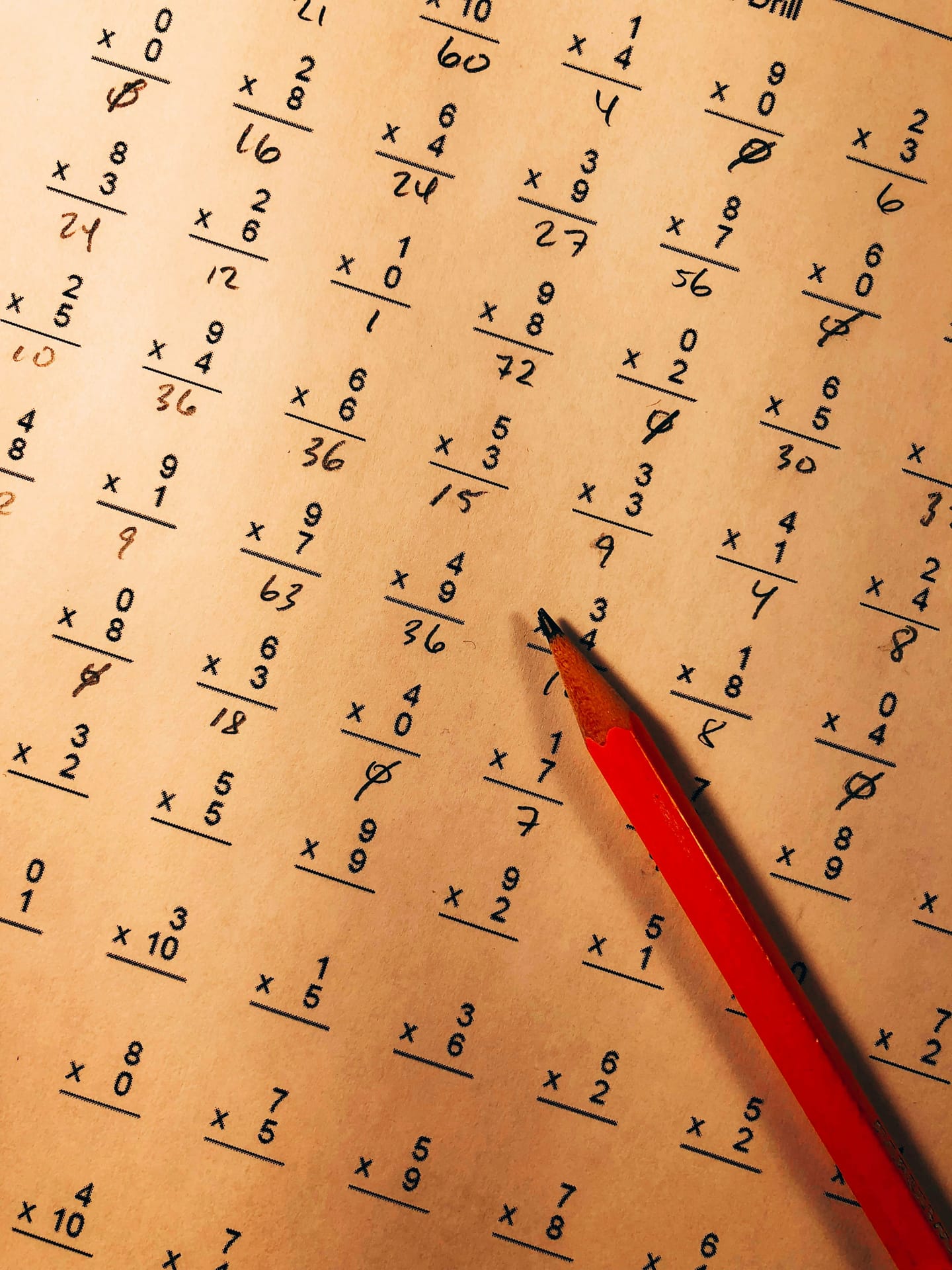

Log probabilities (logprobs) provide a window into how confident a language model is about its predictions. In this technical implementation, we demonstrate how to access and interpret logprobs via the OpenAI API, using a series of increasingly difficult multiplication tasks. Our experiment reveals that declining confidence scores can effectively signal