In this post we put Notebook LM’s new literature review feature to the test. The model was given six pre-selected sources on a single topic and asked to produce a structured review. The results were not bad at all: the output drew appropriately on the provided references and offered a coherent overview. Still, there were some flaws — occasional mismatched or incomplete page numbers, and minor citation errors. This means it cannot be used as a ready-made text without careful checking. Yet, in a field where one is not fully at home and needs a first overview grounded solely in selected sources, the function shows real promise. It can sketch out the main arguments and connections in a useful way, as long as one remembers that citations always need validation.

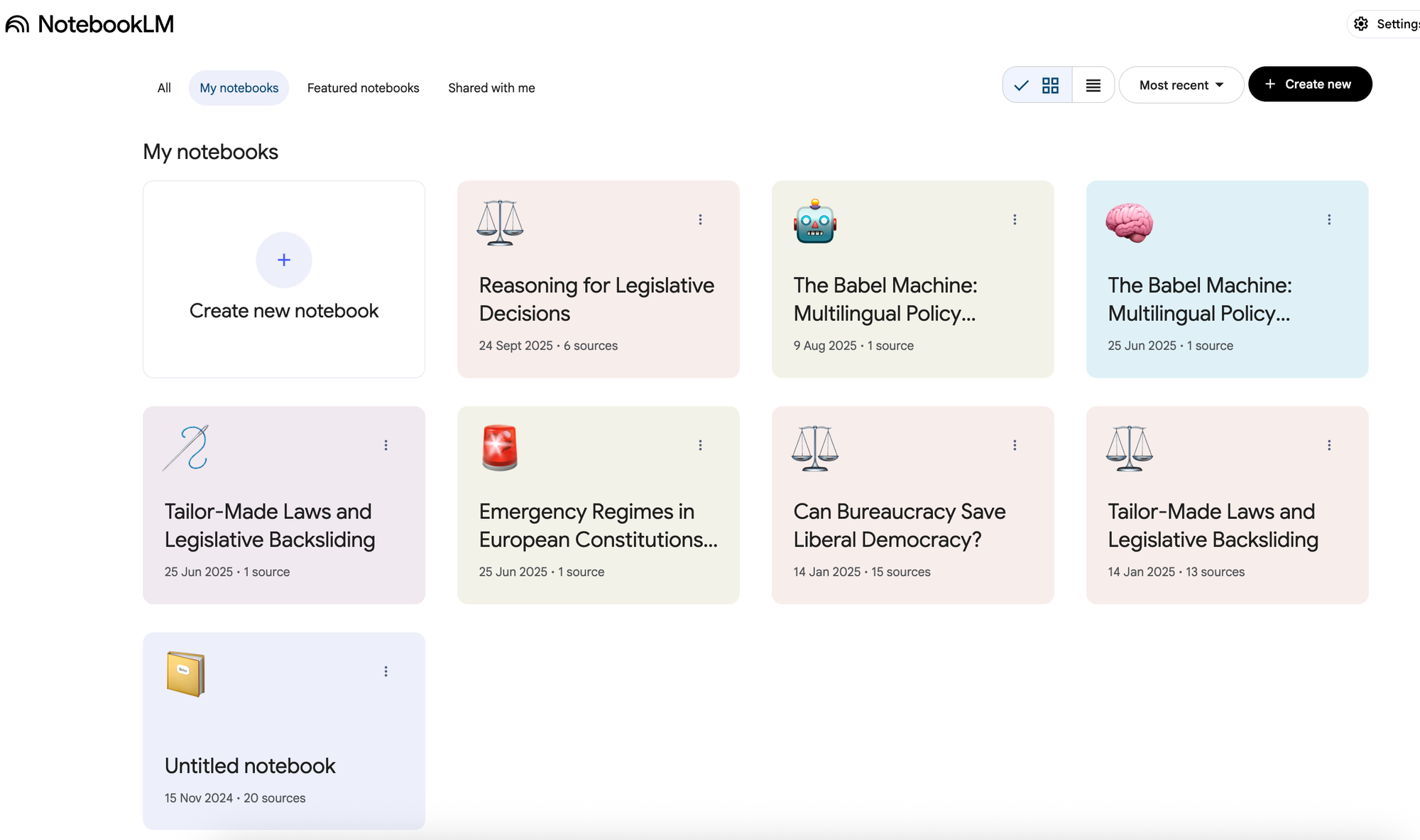

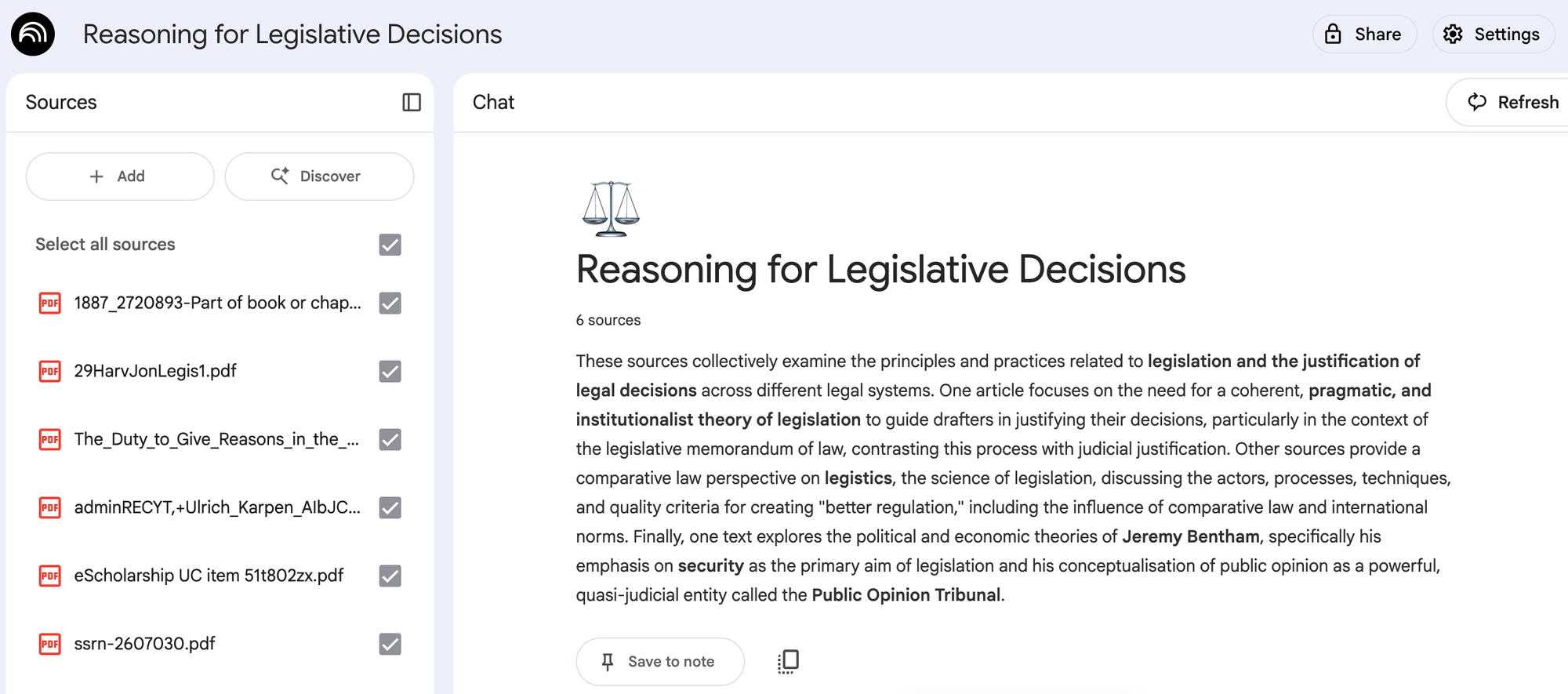

Notebook LM is Google’s experimental platform designed to support researchers, students, and writers in engaging more systematically with their sources. Users can access the tool at https://notebooklm.google/, where it is possible to create notebooks that function as dedicated project spaces. Within each notebook, one can upload a set of documents, organise them thematically, and then prompt the AI to generate analyses, summaries, or literature reviews that are explicitly grounded in the selected materials.

For this test we created a dedicated notebook entitled Reasoning for Legislative Decisions and uploaded six scholarly sources. These included classic works on the duty to give reasons in law, comparative analyses of legislative practice, and theoretical contributions such as Ulrich Karpen’s writings on legistics and Robert Seidman’s institutionalist theory of legislation. All selected materials focused on the same substantive issue: why legislators are expected to provide explicit reasoning for their decisions, for instance through explanatory memoranda attached to statutes.

Prompt

Once the sources were in place, the model was prompted with instructions to produce a literature review that would rely exclusively on the uploaded documents. The request specified that the review should be written in a coherent academic style, synthesising the arguments of the texts, and that all claims should be supported with Harvard in-text citations including author, year, and precise page numbers. Since the model received a narrowly curated set of PDFs on this specific theme, no additional requirements were set regarding length or structure. The purpose was to obtain a comprehensive review processing several hundred pages of material, thereby assessing the model’s capacity to provide an initial overview in a specialised field based solely on the selected sources.

Output

The resulting literature review demonstrated a solid capacity to synthesise the six selected sources into a coherent narrative. Substantively, the model drew effectively on the uploaded texts and covered the main arguments advanced in the scholarship.

Nonetheless, a number of inaccuracies emerged in the citations. For example, the passage stating that “The core principle, as articulated in a Council of Europe resolution, is that when an act adversely affects a person’s rights, liberties, or interests, they should be informed of the reasons on which it is based” (Waaldijk, 1987, p. 111) misattributes the reference, since Waaldijk does not cite a Council of Europe resolution at this point in the text.

Such misplacements and minor page errors were noticeable, though they can be detected by subject-matter expertise and careful validation. However, despite these flaws, the overall review offered a well-structured and informative synthesis of several hundred pages of material, showing the potential of the tool to provide a meaningful first overview when constrained to a defined corpus of sources.

Recommendations

Notebook LM’s literature review feature shows real promise as an exploratory tool for generating an initial synthesis of a specialised field from a curated set of sources. It can process large volumes of text and organise the main arguments into a coherent overview, making it especially useful when researchers or students seek a first mapping of a topic without prior expertise. However, the experiment also revealed persistent weaknesses in citation accuracy, including misattributions and page mismatches, which means the output cannot be treated as a ready-made product. Its value lies in accelerating the early stages of a literature review and highlighting key debates, but critical validation and manual refinement remain essential to ensure academic rigour.

The authors used Google NotebookLM [Google (2025) NotebookLM (accessed on 24 September 2025), AI-powered research assistant, available at: https://notebooklm.google] to generate the output.