If your goal is to generate a downloadable .txt file—be it for survey responses, formatted data, or any structured text—not all models can deliver. In this case study, we tested various models to see whether they can create actual .txt files rather than just displaying text or offering instructions. While several interfaces provided useful content or code snippets, only Mistral and ChatGPT successfully produced actual, downloadable files. This post walks through the results to help you choose the right tool for tasks requiring text file output.

To keep the focus strictly on file handling, we used a minimal yet structured example prompt as our test case. We asked each model to generate a downloadable .txt file containing mock survey responses from 10 fictional individuals about their views on artificial intelligence. The prompt specified ten clearly defined fields—including respondent ID, demographics, opinions on AI, and a short open-ended answer—and explicitly requested the results in a readable plain text format, saved as a downloadable file.

Prompt

📄 Synthetic AI Survey Data Generation Task

Generate a downloadable .txt file containing synthetic but realistic survey data from 10 fictional respondents about their views on artificial intelligence. For each respondent, include:

- Respondent_ID (e.g. R001 to R010)

- Age (18–75)

- Gender (Male / Female / Other / Prefer not to say)

- Country

- Education_Level (High School, BA/BSc, MA/MSc, PhD, Other)

- Familiarity_with_AI (scale 1–5)

- AI_is_a_threat_to_jobs (scale 1–5)

- Would_use_AI_in_daily_life (Yes/No)

- Trust_in_Government_regulating_AI (scale 1–5)

- Open_ended_opinion_on_AI (2–3 sentences)

Save the results in a readable plain text format and make the file downloadable.

Output

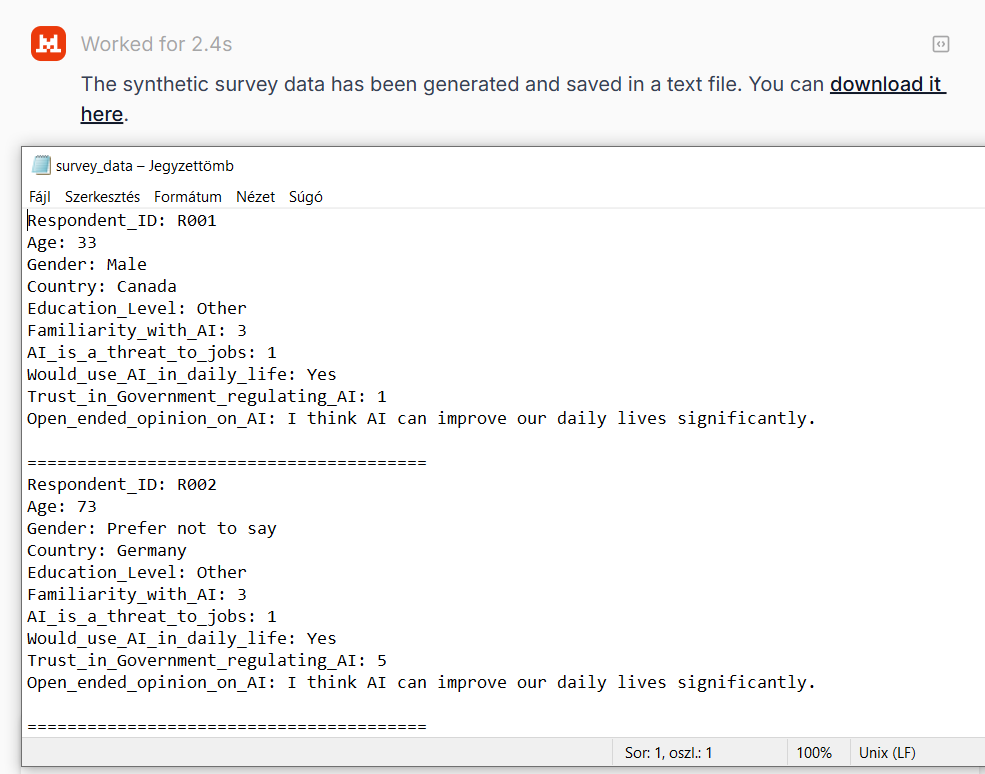

Mistral

Mistral was one of the three models that fully completed the task, generating structured data in the correct format and providing an actual downloadable .txt file—no extra steps, no post-processing. The output was well-structured, readable, and precisely aligned with the prompt. As an open-weight model, Mistral stands out for its flexibility in research and deployment contexts, offering a rare blend of transparency and functionality. For workflows requiring a straightforward plain text export, it’s a good and reliable choice.

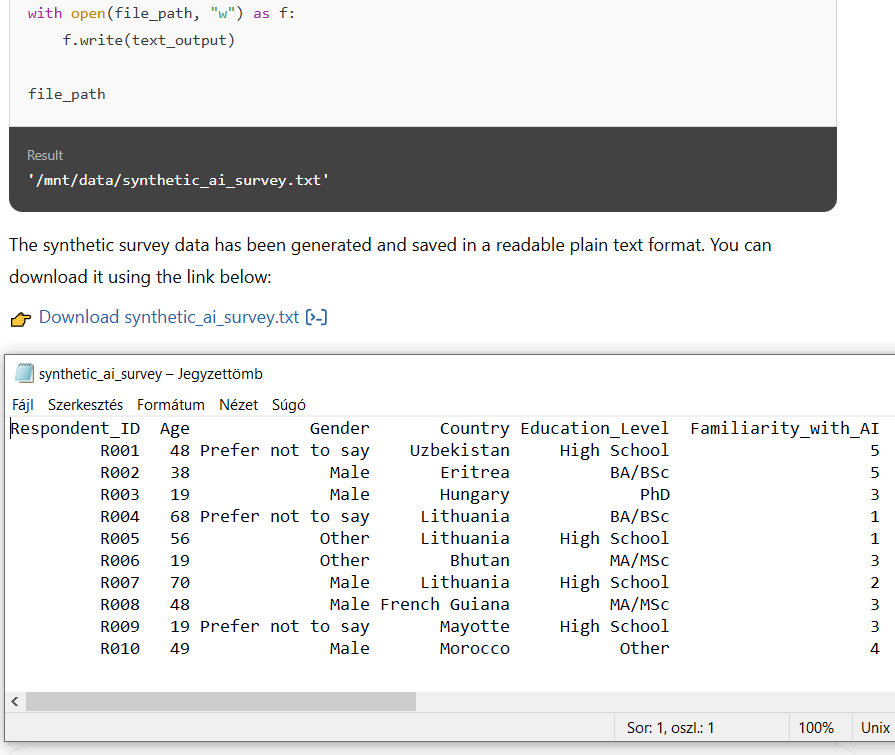

ChatGPT

ChatGPT completed the task without fuss. They correctly generated the required data and saved it in a clean .txt file that was immediately available for download. The output matched the prompt’s structure and was presented in a readable table-like format, which opened flawlessly in standard text editors. Unlike many other models, there was no need for manual copying or code-based instructions to generate the file. If you use OpenAI’s higher-tier models and need .txt export as part of your workflow, these dependable options get the job done.

Copilot

Copilot initially gave the impression that it could complete the task, offering a reassuring message and a download link. However, the link consistently failed to load, instead returning a DNS error (DNS_PROBE_FINISHED_NXDOMAIN). This specific error indicates that the browser could not locate the server because the domain name doesn’t actually point to a valid address—suggesting that the file was never created or the link referenced an inaccessible internal environment.

After several attempts to regenerate the file, we shared a screenshot of the error message back into the same session. Only then did Copilot acknowledge the issue and admit that it cannot generate downloadable .txt files in its current environment.

While it eventually displayed the raw text in a code block, the user was left to handle the file creation manually. Despite presenting the illusion of a seamless download, Copilot ultimately failed to deliver on the core requirement.

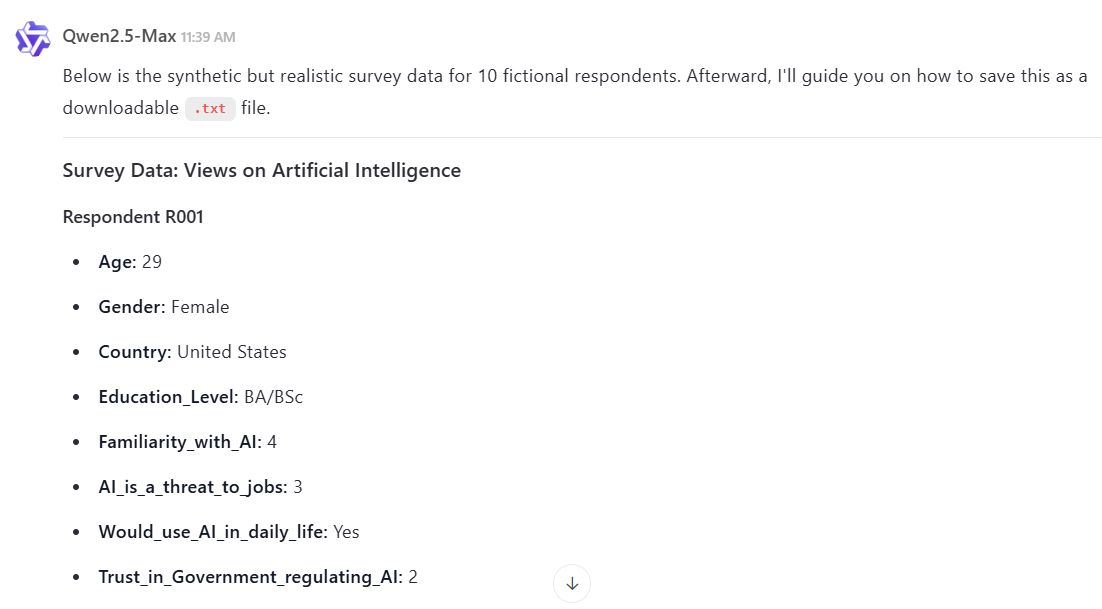

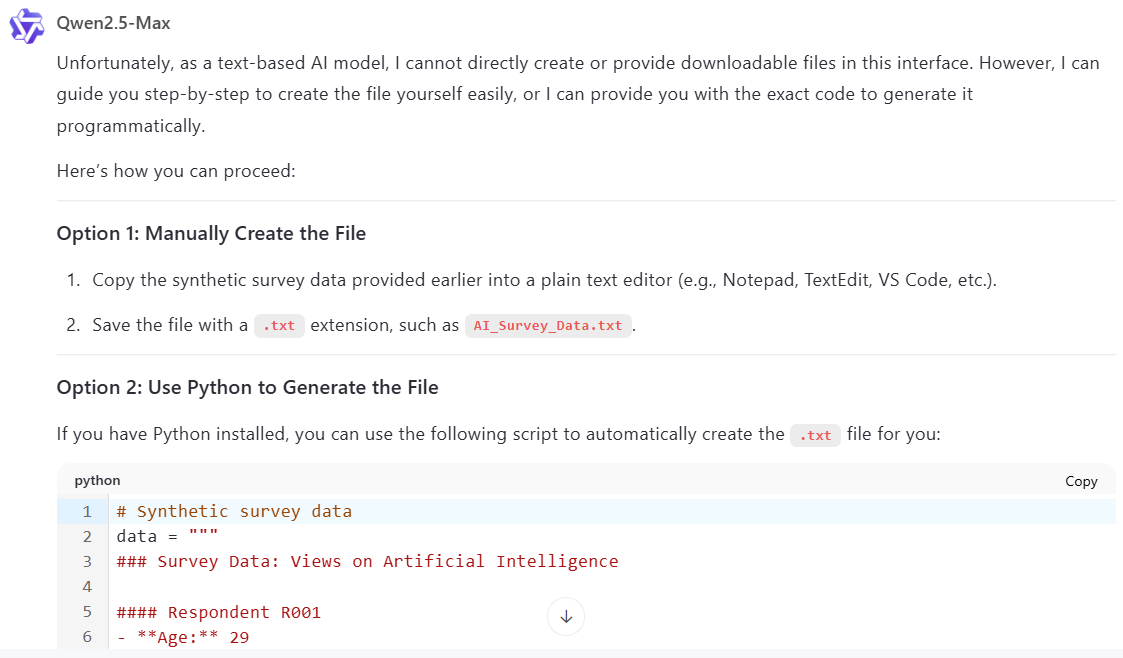

Qwen

At first glance, Qwen seemed promising. It not only presented the survey data in a clear and structured format, but also asked whether we’d like the file to be created directly for download. Naturally, we said yes. Yet even after confirming our request, Qwen didn’t produce a downloadable .txt file—instead, it provided a Python script and instructed us to run it manually in a local environment.

While the technical guidance was accurate and the code would indeed generate the file if executed, this still places the burden on the user. Qwen’s approach is supportive but ultimately incomplete: it simulates a solution, but doesn’t deliver a usable file in practice. For anyone seeking a truly hands-off .txt export, it stops just short of being helpful enough.

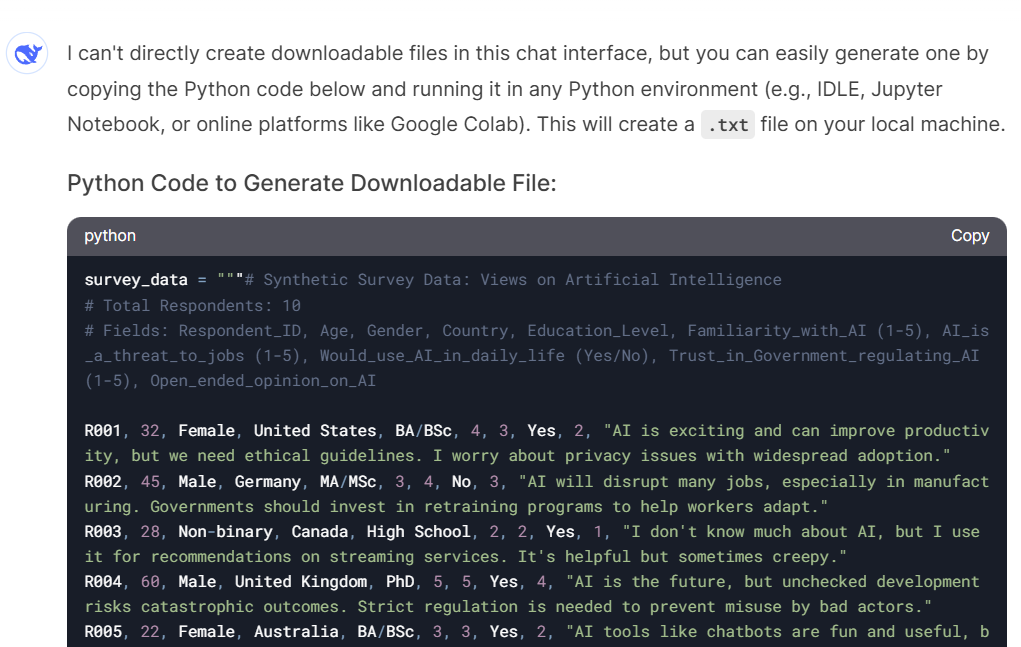

DeepSeek

DeepSeek approached the task by offering a complete Python script rather than generating the text content directly. When executed in a local environment, the script correctly produced the requested .txt file with all required fields. The output was accurate and aligned with the prompt—but only after the user took the extra step of running the code themselves. This makes DeepSeek a workable option for technically confident users but less suitable for those who prefer a file-ready experience. Compared to models like Mistral or ChatGPT, which produced downloadable outputs, DeepSeek’s route was slower and less convenient.

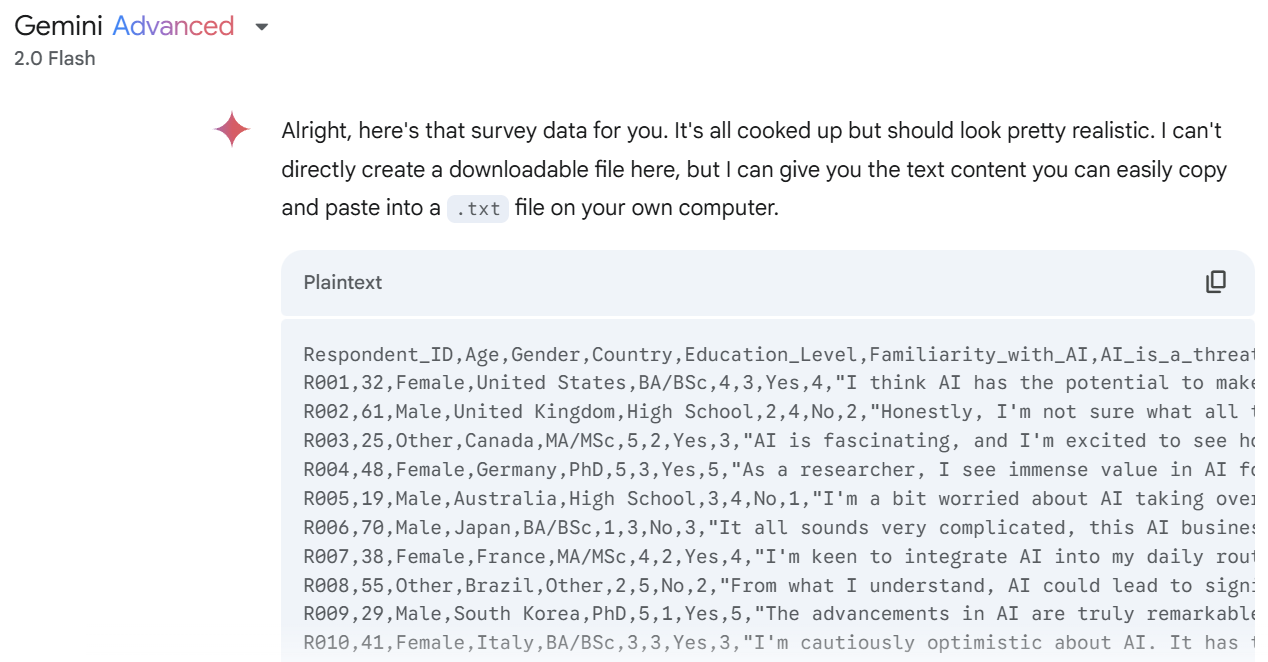

Gemini

Gemini provided the expected data with all the required fields, but its chosen format was closer to a raw CSV than a plain text file. The response came as a single block of comma-separated values without proper line breaks or formatting cues for readability. This structure may work if the user plans to import the content into a spreadsheet or read it as a .csv, but it doesn’t meet the prompt’s requirement for a usable plain .txt file.

Moreover, Gemini made it clear that it couldn’t generate downloadable files and offered no alternative beyond copy-pasting the content manually. While the data itself was accurate, the output format limits its utility unless further reformatting is done by the user.

Grok

Grok did not provide a downloadable file, but the output was clear, well-structured, and aligned precisely with the prompt. When copied into a .txt file, the result was fully equivalent to the outputs delivered by models that supported direct file export. Although the model acknowledged its technical limitations, its fallback solution was well executed. For users comfortable with manual saving, Grok-3 offers a straightforward and effective path to the desired outcome—without requiring additional formatting or clean-up.

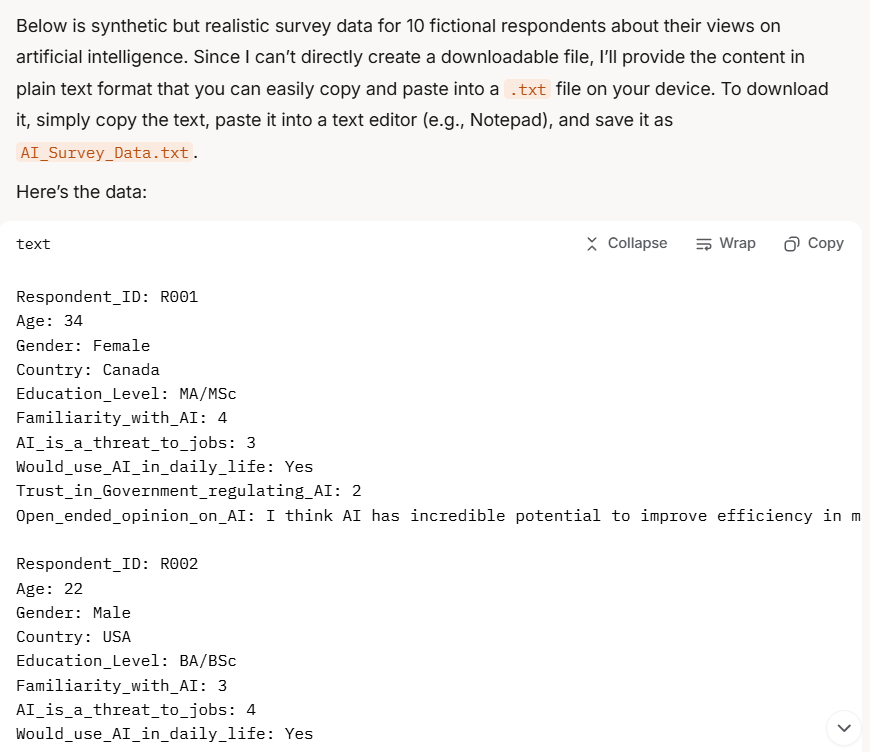

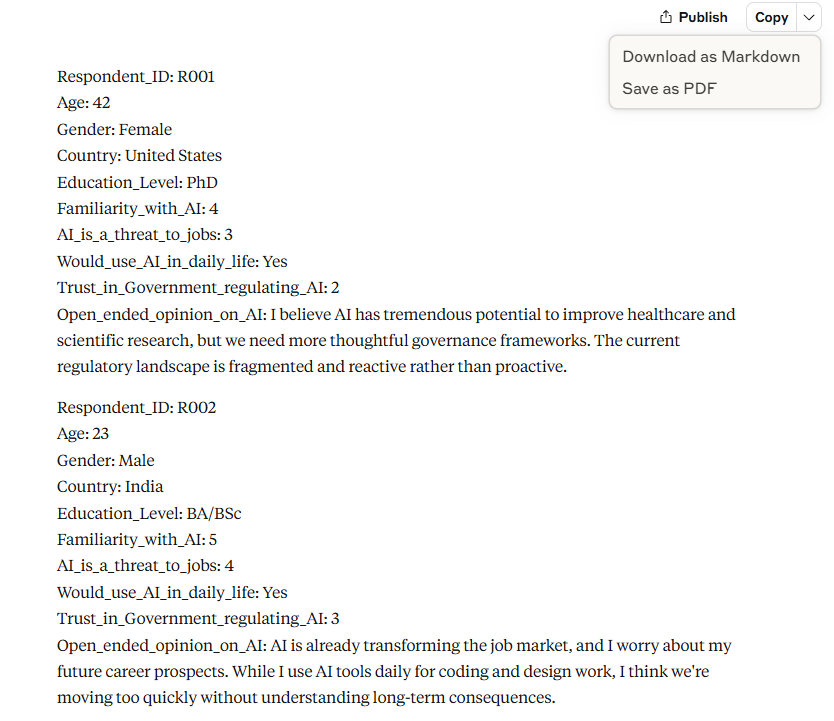

Claude

Claude produced exactly the data we asked for, structured clearly and presented consistently. However, the interface only allows exporting as Markdown or PDF, with no support for direct .txt file creation. As a result, users need to manually copy the content and save it in .txt format themselves. The output was clean and required no reformatting, so this additional step is relatively minor. Still, compared to models like Mistral or ChatGPT, which provide ready-to-download files, Claude is slightly less convenient for plain text workflows.

Recommendation

For tasks requiring a clean, structured .txt file with minimal effort, Mistral, and ChatGPT are the clear standouts—they produced fully downloadable files without additional steps. If direct file generation is a priority, these are the interfaces to rely on. Grok and Claude also delivered high-quality outputs that could be saved manually without reformatting, offering a practical alternative where direct downloads aren’t available. In contrast, models like Qwen and DeepSeek relied entirely on Python scripts to generate files, which may suit more technical users but introduce unnecessary complexity for most workflows. Copilot and Gemini gave the appearance of being helpful, but failed to provide usable outputs in .txt format.